diff --git a/.github/workflows/python-pytest.yml b/.github/workflows/python-pytest.yml

index 09678571..a0de909d 100644

--- a/.github/workflows/python-pytest.yml

+++ b/.github/workflows/python-pytest.yml

@@ -8,6 +8,9 @@ on:

branches: [ master ]

pull_request:

branches: [ master ]

+ schedule:

+ # since * is a special character in YAML you have to quote this string

+ - cron: '0 22 1/7 * *'

jobs:

test_default:

@@ -16,10 +19,12 @@ jobs:

fail-fast: false

matrix:

os: [windows-latest, ubuntu-latest]

- python-version: ["3.7", "3.8", "3.9", "3.10"]

+ python-version: ["3.9", "3.10", "3.11", "3.12"]

include:

- - os: macos-latest

+ - os: ubuntu-22.04

python-version: 3.8

+# - os: macos-12

+# python-version: 3.11

steps:

- uses: actions/checkout@v2

- name: Set up Python ${{ matrix.python-version }}

@@ -29,7 +34,7 @@ jobs:

- name: Install dependencies

run: |

python -m pip install --upgrade pip

- pip install -q "XlsxWriter<3.0.5" -r requirements.txt -r requirements-zhcn.txt -r requirements-dask.txt -r requirements-extra.txt

+ pip install -q "numpy<2.0.0" "pandas<2.0.0" "XlsxWriter<3.0.5" -r requirements.txt -r requirements-zhcn.txt -r requirements-dask.txt -r requirements-extra.txt

pip install -q pytest-cov==2.4.0 python-coveralls codacy-coverage

pip list

- name: Test with pytest

@@ -37,38 +42,38 @@ jobs:

pytest --cov=hypernets --durations=30

- test_with_dask_ft:

+ test_dask_ft:

runs-on: ${{ matrix.os }}

strategy:

fail-fast: false

matrix:

# os: [ubuntu-latest, windows-latest]

- os: [windows-latest, ubuntu-latest]

- python-version: [3.7, 3.8]

+ os: [ubuntu-22.04, ]

+ python-version: [3.8, ]

ft-version: [0.27]

woodwork-version: [0.13.0]

dask-version: [2021.1.1, 2021.7.2]

- include:

- - os: ubuntu-20.04

- python-version: 3.6

- ft-version: 0.23

- woodwork-version: 0.1.0

- dask-version: 2021.1.1

- - os: ubuntu-latest

- python-version: 3.7

- ft-version: 1.2

- woodwork-version: 0.13.0

- dask-version: 2021.10.0

- - os: ubuntu-latest

- python-version: 3.8

- ft-version: 1.2

- woodwork-version: 0.13.0

- dask-version: 2021.10.0

- - os: windows-latest

- python-version: 3.8

- ft-version: 1.2

- woodwork-version: 0.13.0

- dask-version: 2021.10.0

+# include:

+# - os: ubuntu-22.04

+# python-version: 3.8

+# ft-version: 1.2

+# woodwork-version: 0.13.0

+# dask-version: 2022.12.1

+# - os: windows-latest

+# python-version: 3.8

+# ft-version: 1.2

+# woodwork-version: 0.13.0

+# dask-version: 2022.12.1

+# - os: ubuntu-20.04

+# python-version: 3.6

+# ft-version: 0.23

+# woodwork-version: 0.1.0

+# dask-version: 2021.1.1

+# - os: ubuntu-20.04

+# python-version: 3.7

+# ft-version: 1.2

+# woodwork-version: 0.13.0

+# dask-version: 2021.10.0

steps:

- uses: actions/checkout@v2

- name: Set up Python ${{ matrix.python-version }}

@@ -79,20 +84,20 @@ jobs:

run: |

python -m pip install --upgrade pip

pip install -q dask==${{ matrix.dask-version }} distributed==${{ matrix.dask-version }} dask-ml "featuretools==${{ matrix.ft-version }}" woodwork==${{ matrix.woodwork-version }} "pandas<1.5.0"

- pip install -q -r requirements.txt -r requirements-zhcn.txt -r requirements-extra.txt "scikit-learn<1.1.0" "XlsxWriter<3.0.5" "pyarrow<=4.0.0"

+ pip install -q -r requirements.txt -r requirements-zhcn.txt -r requirements-extra.txt "pandas<2.0" "scikit-learn<1.1.0" "XlsxWriter<3.0.5" "pyarrow<=4.0.0"

pip install -q pytest-cov==2.4.0 python-coveralls codacy-coverage

pip list

- name: Test with pytest

run: |

pytest --cov=hypernets --durations=30

- test_without_daskml:

+ test_without_dask_ft:

runs-on: ${{ matrix.os }}

strategy:

fail-fast: false

matrix:

- os: [windows-latest, ubuntu-latest]

- python-version: [3.7, 3.8]

+ os: [ubuntu-22.04, ]

+ python-version: [3.8, ]

steps:

- uses: actions/checkout@v2

- name: Set up Python ${{ matrix.python-version }}

@@ -102,35 +107,36 @@ jobs:

- name: Install dependencies

run: |

python -m pip install --upgrade pip

- pip install -q -r requirements.txt "scikit-learn<1.1.0" "XlsxWriter<3.0.5"

+ pip install -q -r requirements.txt "pandas<2.0" "scikit-learn<1.1.0" "XlsxWriter<3.0.5"

pip install -q pytest-cov==2.4.0 python-coveralls codacy-coverage

pip list

- name: Test with pytest

run: |

pytest --cov=hypernets --durations=30

-

- test_without_geohash:

- runs-on: ${{ matrix.os }}

- strategy:

- fail-fast: false

- matrix:

- os: [ubuntu-latest, ]

- python-version: [3.7, 3.8]

- dask-version: [2021.7.2,]

- steps:

- - uses: actions/checkout@v2

- - name: Set up Python ${{ matrix.python-version }}

- uses: actions/setup-python@v2

- with:

- python-version: ${{ matrix.python-version }}

- - name: Install dependencies

- run: |

- python -m pip install --upgrade pip

- pip install -q dask==${{ matrix.dask-version }} distributed==${{ matrix.dask-version }} dask-ml "pandas<1.5.0"

- pip install -q -r requirements.txt -r requirements-zhcn.txt "scikit-learn<1.1.0" "XlsxWriter<3.0.5"

- pip install -q pytest-cov==2.4.0 python-coveralls codacy-coverage

- pip list

- - name: Test with pytest

- run: |

- pytest --cov=hypernets --durations=30

+#

+# test_without_geohash:

+# runs-on: ${{ matrix.os }}

+# strategy:

+# fail-fast: false

+# matrix:

+# os: [ubuntu-latest, ]

+# python-version: [3.7, 3.8]

+# # dask-version: [2021.7.2,]

+# steps:

+# - uses: actions/checkout@v2

+# - name: Set up Python ${{ matrix.python-version }}

+# uses: actions/setup-python@v2

+# with:

+# python-version: ${{ matrix.python-version }}

+# # # pip install -q dask==${{ matrix.dask-version }} distributed==${{ matrix.dask-version }} dask-ml "pandas<1.5.0"

+# - name: Install dependencies

+# run: |

+# python -m pip install --upgrade pip

+# pip install -q "dask<=2023.2.0" "distributed<=2023.2.0" dask-ml "pandas<1.5.0"

+# pip install -q -r requirements.txt -r requirements-zhcn.txt "pandas<2.0" "scikit-learn<1.1.0" "XlsxWriter<3.0.5"

+# pip install -q pytest-cov==2.4.0 python-coveralls codacy-coverage

+# pip list

+# - name: Test with pytest

+# run: |

+# pytest --cov=hypernets --durations=30

diff --git a/CODE_OF_CONDUCT.md b/CODE_OF_CONDUCT.md

new file mode 100644

index 00000000..177aa746

--- /dev/null

+++ b/CODE_OF_CONDUCT.md

@@ -0,0 +1,75 @@

+

+# Contributor Covenant Code of Conduct

+

+## Our Pledge

+

+We as members, contributors, and leaders pledge to make participation in our

+community a harassment-free experience for everyone, regardless of age, body

+size, visible or invisible disability, ethnicity, sex characteristics, gender

+identity and expression, level of experience, education, socio-economic status,

+nationality, personal appearance, race, caste, color, religion, or sexual

+identity and orientation.

+

+We pledge to act and interact in ways that contribute to an open, welcoming,

+diverse, inclusive, and healthy community.

+

+## Our Standards

+

+Examples of behavior that contributes to a positive environment for our

+community include:

+

+* Demonstrating empathy and kindness toward other people

+* Being respectful of differing opinions, viewpoints, and experiences

+* Giving and gracefully accepting constructive feedback

+* Accepting responsibility and apologizing to those affected by our mistakes,

+ and learning from the experience

+* Focusing on what is best not just for us as individuals, but for the overall

+ community

+

+Examples of unacceptable behavior include:

+

+* The use of sexualized language or imagery, and sexual attention or advances of

+ any kind

+* Trolling, insulting or derogatory comments, and personal or political attacks

+* Public or private harassment

+* Publishing others' private information, such as a physical or email address,

+ without their explicit permission

+* Other conduct which could reasonably be considered inappropriate in a

+ professional setting

+

+## Enforcement Responsibilities

+

+Community leaders are responsible for clarifying and enforcing our standards of

+acceptable behavior and will take appropriate and fair corrective action in

+response to any behavior that they deem inappropriate, threatening, offensive,

+or harmful.

+

+Community leaders have the right and responsibility to remove, edit, or reject

+comments, commits, code, wiki edits, issues, and other contributions that are

+not aligned to this Code of Conduct, and will communicate reasons for moderation

+decisions when appropriate.

+

+## Scope

+

+This Code of Conduct applies within all community spaces, and also applies when

+an individual is officially representing the community in public spaces.

+Examples of representing our community include using an official e-mail address,

+posting via an official social media account, or acting as an appointed

+representative at an online or offline event.

+

+## Enforcement

+

+Instances of abusive, harassing, or otherwise unacceptable behavior may be

+reported to the community leaders responsible for enforcement at

+dlab-dat@zetyun.com.

+All complaints will be reviewed and investigated promptly and fairly.

+

+All community leaders are obligated to respect the privacy and security of the

+reporter of any incident.

+

+

+## Attribution

+

+This Code of Conduct is adapted from the Contributor Covenant,

+version 2.1, available at

+[https://www.contributor-covenant.org/version/2/1/code_of_conduct.html][v2.1].

\ No newline at end of file

diff --git a/README.md b/README.md

index 81b702ce..72063931 100644

--- a/README.md

+++ b/README.md

@@ -1,5 +1,5 @@

- +

+ [](https://pypi.org/project/hypernets)

@@ -11,6 +11,7 @@ Dear folks, we are offering challenging opportunities located in Beijing for bot

## Hypernets: A General Automated Machine Learning Framework

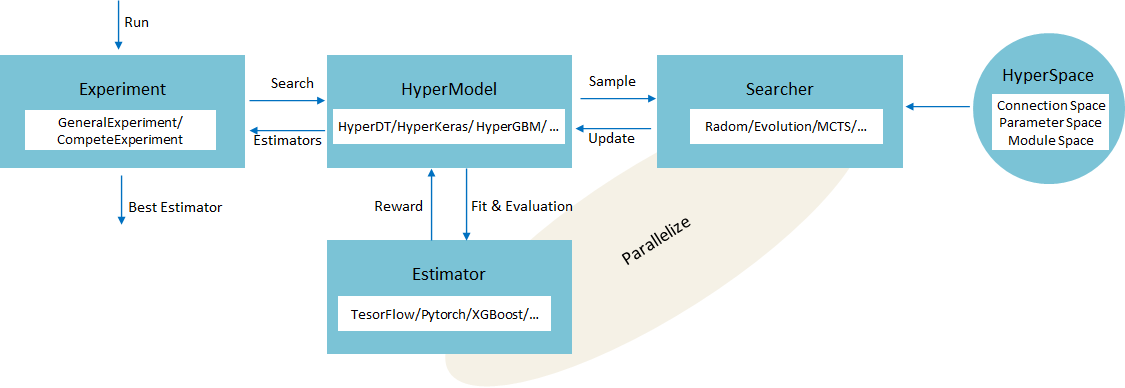

Hypernets is a general AutoML framework, based on which it can implement automatic optimization tools for various machine learning frameworks and libraries, including deep learning frameworks such as tensorflow, keras, pytorch, and machine learning libraries like sklearn, lightgbm, xgboost, etc.

+It also adopted various state-of-the-art optimization algorithms, including but not limited to evolution algorithm, monte carlo tree search for single objective optimization and multi-objective optimization algorithms such as MOEA/D,NSGA-II,R-NSGA-II.

We introduced an abstract search space representation, taking into account the requirements of hyperparameter optimization and neural architecture search(NAS), making Hypernets a general framework that can adapt to various automated machine learning needs. As an abstraction computing layer, tabular toolbox, has successfully implemented in various tabular data types: pandas, dask, cudf, etc.

@@ -18,14 +19,20 @@ We introduced an abstract search space representation, taking into account the r

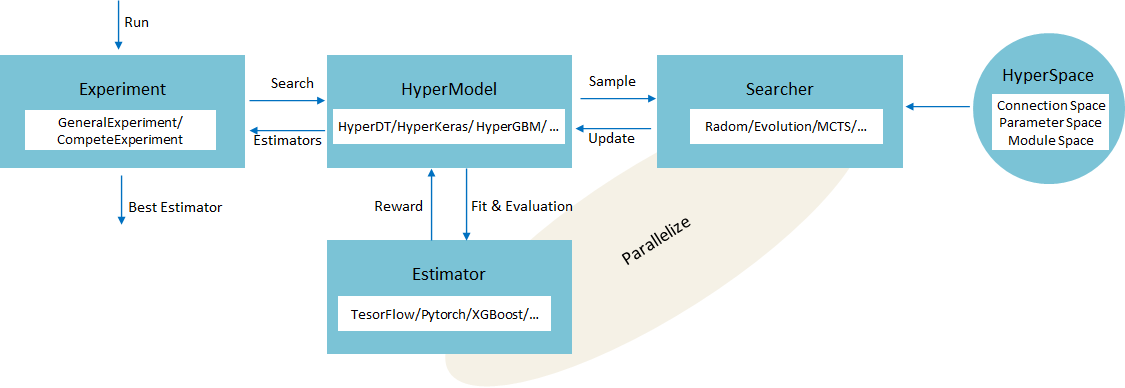

## Overview

### Conceptual Model

[](https://pypi.org/project/hypernets)

@@ -11,6 +11,7 @@ Dear folks, we are offering challenging opportunities located in Beijing for bot

## Hypernets: A General Automated Machine Learning Framework

Hypernets is a general AutoML framework, based on which it can implement automatic optimization tools for various machine learning frameworks and libraries, including deep learning frameworks such as tensorflow, keras, pytorch, and machine learning libraries like sklearn, lightgbm, xgboost, etc.

+It also adopted various state-of-the-art optimization algorithms, including but not limited to evolution algorithm, monte carlo tree search for single objective optimization and multi-objective optimization algorithms such as MOEA/D,NSGA-II,R-NSGA-II.

We introduced an abstract search space representation, taking into account the requirements of hyperparameter optimization and neural architecture search(NAS), making Hypernets a general framework that can adapt to various automated machine learning needs. As an abstraction computing layer, tabular toolbox, has successfully implemented in various tabular data types: pandas, dask, cudf, etc.

@@ -18,14 +19,20 @@ We introduced an abstract search space representation, taking into account the r

## Overview

### Conceptual Model

- +

+

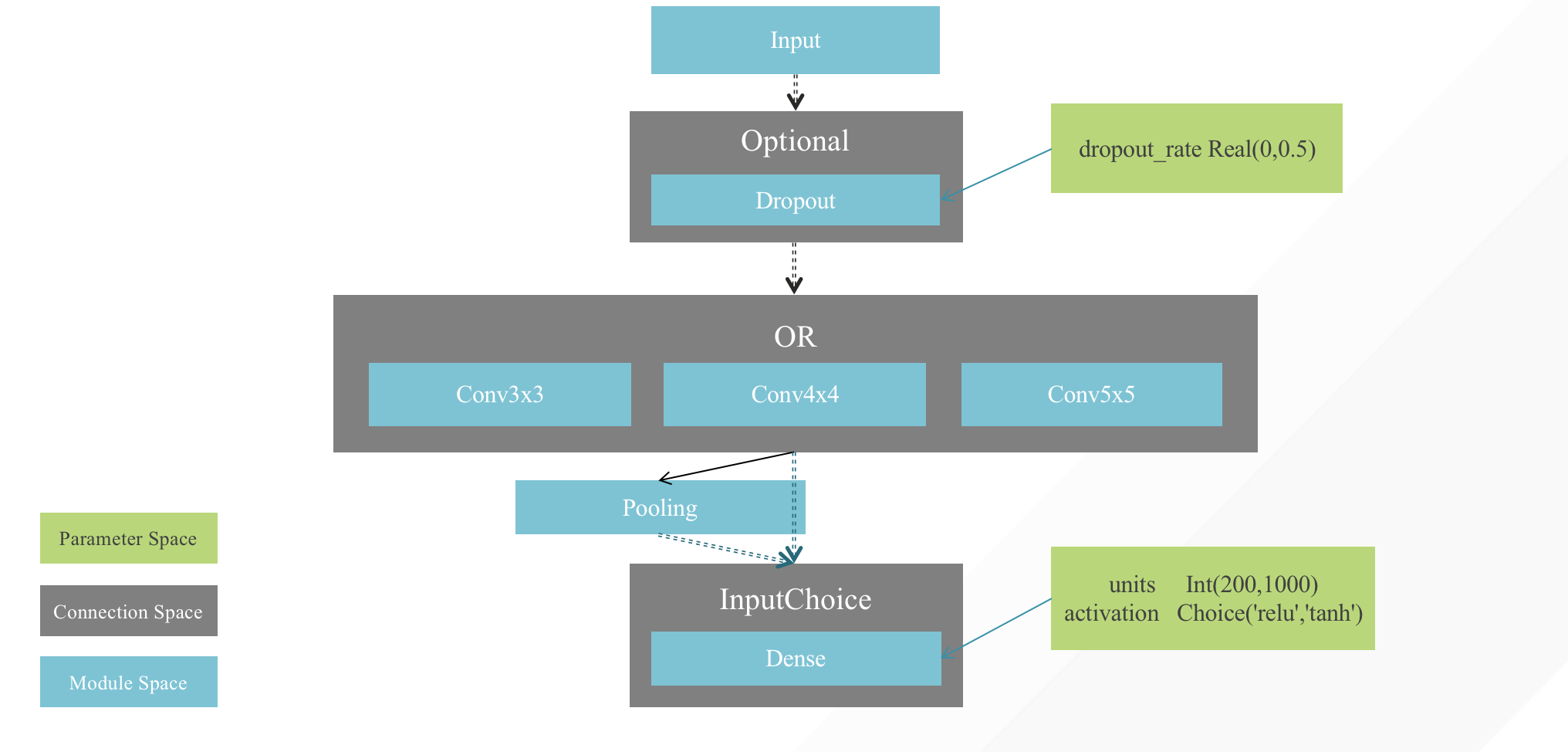

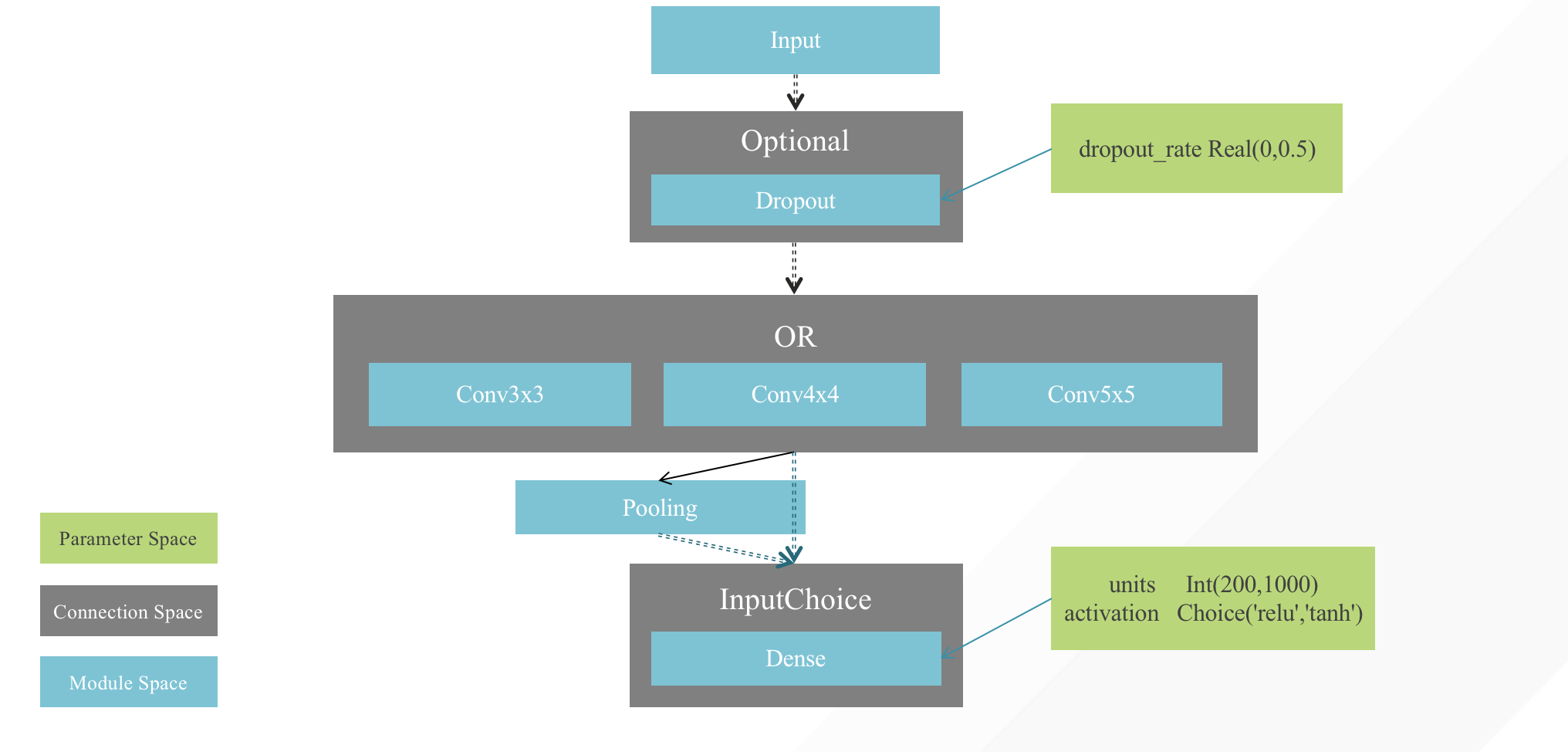

### Illustration of the Search Space

- +

+

+## What's NEW !

+

+- **New feature:** [Multi-objectives optimization support](https://hypernets.readthedocs.io/en/latest/searchers.html#multi-objective-optimization)

+- **New feature:** [Performance and model complexity measurement metrics](https://github.com/DataCanvasIO/HyperGBM/blob/main/hypergbm/examples/66.Objectives_example.ipynb)

+- **New feature:** [Distributed computing](https://hypergbm.readthedocs.io/en/latest/example_dask.html) and [GPU acceleration](https://hypergbm.readthedocs.io/en/latest/example_cuml.html) base on computational abstraction layer

+

## Installation

@@ -68,7 +75,7 @@ pip install hypernets[all]

```

-***Verify installation***:

+To ***Verify*** your installation:

```bash

python -m hypernets.examples.smoke_testing

```

@@ -77,18 +84,6 @@ python -m hypernets.examples.smoke_testing

* [A Brief Tutorial for Developing AutoML Tools with Hypernets](https://github.com/BochenLv/knn_toy_model/blob/main/Introduction.md)

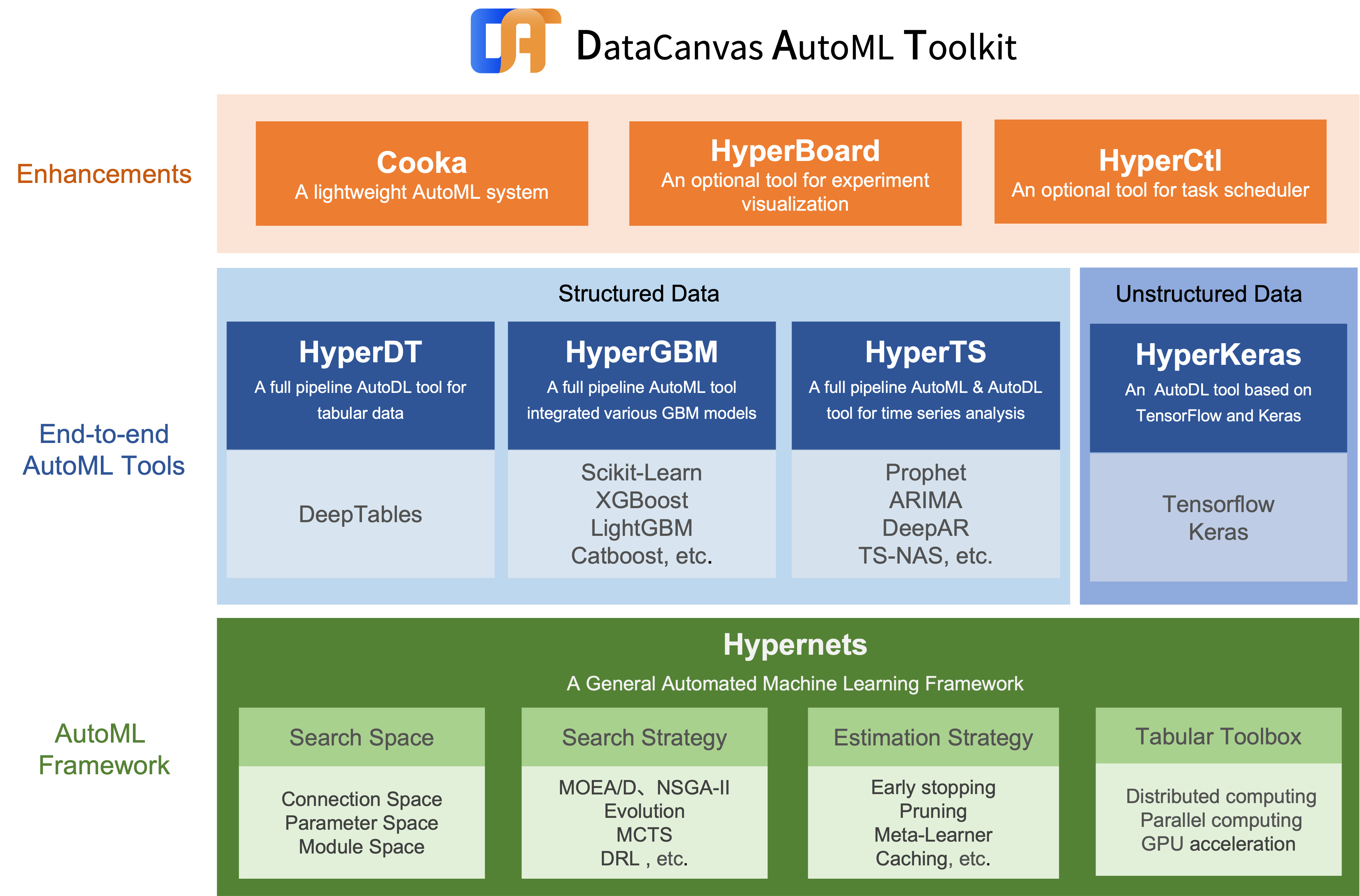

-## Hypernets related projects

-* [Hypernets](https://github.com/DataCanvasIO/Hypernets): A general automated machine learning (AutoML) framework.

-* [HyperGBM](https://github.com/DataCanvasIO/HyperGBM): A full pipeline AutoML tool integrated various GBM models.

-* [HyperDT/DeepTables](https://github.com/DataCanvasIO/DeepTables): An AutoDL tool for tabular data.

-* [HyperTS](https://github.com/DataCanvasIO/HyperTS): A full pipeline AutoML&AutoDL tool for time series datasets.

-* [HyperKeras](https://github.com/DataCanvasIO/HyperKeras): An AutoDL tool for Neural Architecture Search and Hyperparameter Optimization on Tensorflow and Keras.

-* [HyperBoard](https://github.com/DataCanvasIO/HyperBoard): A visualization tool for Hypernets.

-* [Cooka](https://github.com/DataCanvasIO/Cooka): Lightweight interactive AutoML system.

-

-

-

-

## Documents

* [Overview](https://hypernets.readthedocs.io/en/latest/overview.html)

* [QuickStart](https://hypernets.readthedocs.io/en/latest/quick_start.html)

@@ -102,5 +97,36 @@ python -m hypernets.examples.smoke_testing

* [Define An ENAS Micro Search Space](https://hypernets.readthedocs.io/en/latest/nas.html#define-an-enas-micro-search-space)

+## Hypernets related projects

+* [Hypernets](https://github.com/DataCanvasIO/Hypernets): A general automated machine learning (AutoML) framework.

+* [HyperGBM](https://github.com/DataCanvasIO/HyperGBM): A full pipeline AutoML tool integrated various GBM models.

+* [HyperDT/DeepTables](https://github.com/DataCanvasIO/DeepTables): An AutoDL tool for tabular data.

+* [HyperTS](https://github.com/DataCanvasIO/HyperTS): A full pipeline AutoML&AutoDL tool for time series datasets.

+* [HyperKeras](https://github.com/DataCanvasIO/HyperKeras): An AutoDL tool for Neural Architecture Search and Hyperparameter Optimization on Tensorflow and Keras.

+* [HyperBoard](https://github.com/DataCanvasIO/HyperBoard): A visualization tool for Hypernets.

+* [Cooka](https://github.com/DataCanvasIO/Cooka): Lightweight interactive AutoML system.

+

+

+

+

+## Citation

+

+If you use Hypernets in your research, please cite us as follows:

+

+ Jian Yang, Xuefeng Li, Haifeng Wu.

+ **Hypernets: A General Automated Machine Learning Framework.** https://github.com/DataCanvasIO/Hypernets. 2020. Version 0.2.x.

+

+BibTex:

+

+```

+@misc{hypernets,

+ author={Jian Yang, Xuefeng Li, Haifeng Wu},

+ title={{Hypernets}: { A General Automated Machine Learning Framework}},

+ howpublished={https://github.com/DataCanvasIO/Hypernets},

+ note={Version 0.2.x},

+ year={2020}

+}

+```

+

## DataCanvas

Hypernets is an open source project created by [DataCanvas](https://www.datacanvas.com/).

diff --git a/docs/source/hypernets.conf.rst b/docs/source/hypernets.conf.rst

new file mode 100644

index 00000000..020c7e9a

--- /dev/null

+++ b/docs/source/hypernets.conf.rst

@@ -0,0 +1,10 @@

+hypernets.conf package

+======================

+

+Module contents

+---------------

+

+.. automodule:: hypernets.conf

+ :members:

+ :undoc-members:

+ :show-inheritance:

diff --git a/docs/source/hypernets.core.rst b/docs/source/hypernets.core.rst

new file mode 100644

index 00000000..db8eb2fe

--- /dev/null

+++ b/docs/source/hypernets.core.rst

@@ -0,0 +1,125 @@

+hypernets.core package

+======================

+

+Submodules

+----------

+

+hypernets.core.callbacks module

+-------------------------------

+

+.. automodule:: hypernets.core.callbacks

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+hypernets.core.config module

+----------------------------

+

+.. automodule:: hypernets.core.config

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+hypernets.core.context module

+-----------------------------

+

+.. automodule:: hypernets.core.context

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+hypernets.core.dispatcher module

+--------------------------------

+

+.. automodule:: hypernets.core.dispatcher

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+hypernets.core.meta\_learner module

+-----------------------------------

+

+.. automodule:: hypernets.core.meta_learner

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+hypernets.core.mutables module

+------------------------------

+

+.. automodule:: hypernets.core.mutables

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+hypernets.core.objective module

+-------------------------------

+

+.. automodule:: hypernets.core.objective

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+hypernets.core.ops module

+-------------------------

+

+.. automodule:: hypernets.core.ops

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+hypernets.core.pareto module

+----------------------------

+

+.. automodule:: hypernets.core.pareto

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+hypernets.core.random\_state module

+-----------------------------------

+

+.. automodule:: hypernets.core.random_state

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+hypernets.core.search\_space module

+-----------------------------------

+

+.. automodule:: hypernets.core.search_space

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+hypernets.core.searcher module

+------------------------------

+

+.. automodule:: hypernets.core.searcher

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+hypernets.core.stateful module

+------------------------------

+

+.. automodule:: hypernets.core.stateful

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+hypernets.core.trial module

+---------------------------

+

+.. automodule:: hypernets.core.trial

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+Module contents

+---------------

+

+.. automodule:: hypernets.core

+ :members:

+ :undoc-members:

+ :show-inheritance:

diff --git a/docs/source/hypernets.discriminators.rst b/docs/source/hypernets.discriminators.rst

new file mode 100644

index 00000000..18b9402f

--- /dev/null

+++ b/docs/source/hypernets.discriminators.rst

@@ -0,0 +1,21 @@

+hypernets.discriminators package

+================================

+

+Submodules

+----------

+

+hypernets.discriminators.percentile module

+------------------------------------------

+

+.. automodule:: hypernets.discriminators.percentile

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+Module contents

+---------------

+

+.. automodule:: hypernets.discriminators

+ :members:

+ :undoc-members:

+ :show-inheritance:

diff --git a/docs/source/hypernets.dispatchers.cluster.grpc.proto.rst b/docs/source/hypernets.dispatchers.cluster.grpc.proto.rst

new file mode 100644

index 00000000..97c729e4

--- /dev/null

+++ b/docs/source/hypernets.dispatchers.cluster.grpc.proto.rst

@@ -0,0 +1,29 @@

+hypernets.dispatchers.cluster.grpc.proto package

+================================================

+

+Submodules

+----------

+

+hypernets.dispatchers.cluster.grpc.proto.spec\_pb2 module

+---------------------------------------------------------

+

+.. automodule:: hypernets.dispatchers.cluster.grpc.proto.spec_pb2

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+hypernets.dispatchers.cluster.grpc.proto.spec\_pb2\_grpc module

+---------------------------------------------------------------

+

+.. automodule:: hypernets.dispatchers.cluster.grpc.proto.spec_pb2_grpc

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+Module contents

+---------------

+

+.. automodule:: hypernets.dispatchers.cluster.grpc.proto

+ :members:

+ :undoc-members:

+ :show-inheritance:

diff --git a/docs/source/hypernets.dispatchers.cluster.grpc.rst b/docs/source/hypernets.dispatchers.cluster.grpc.rst

new file mode 100644

index 00000000..b3aa6652

--- /dev/null

+++ b/docs/source/hypernets.dispatchers.cluster.grpc.rst

@@ -0,0 +1,37 @@

+hypernets.dispatchers.cluster.grpc package

+==========================================

+

+Subpackages

+-----------

+

+.. toctree::

+ :maxdepth: 4

+

+ hypernets.dispatchers.cluster.grpc.proto

+

+Submodules

+----------

+

+hypernets.dispatchers.cluster.grpc.search\_driver\_client module

+----------------------------------------------------------------

+

+.. automodule:: hypernets.dispatchers.cluster.grpc.search_driver_client

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+hypernets.dispatchers.cluster.grpc.search\_driver\_service module

+-----------------------------------------------------------------

+

+.. automodule:: hypernets.dispatchers.cluster.grpc.search_driver_service

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+Module contents

+---------------

+

+.. automodule:: hypernets.dispatchers.cluster.grpc

+ :members:

+ :undoc-members:

+ :show-inheritance:

diff --git a/docs/source/hypernets.dispatchers.cluster.rst b/docs/source/hypernets.dispatchers.cluster.rst

new file mode 100644

index 00000000..67369754

--- /dev/null

+++ b/docs/source/hypernets.dispatchers.cluster.rst

@@ -0,0 +1,45 @@

+hypernets.dispatchers.cluster package

+=====================================

+

+Subpackages

+-----------

+

+.. toctree::

+ :maxdepth: 4

+

+ hypernets.dispatchers.cluster.grpc

+

+Submodules

+----------

+

+hypernets.dispatchers.cluster.cluster module

+--------------------------------------------

+

+.. automodule:: hypernets.dispatchers.cluster.cluster

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+hypernets.dispatchers.cluster.driver\_dispatcher module

+-------------------------------------------------------

+

+.. automodule:: hypernets.dispatchers.cluster.driver_dispatcher

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+hypernets.dispatchers.cluster.executor\_dispatcher module

+---------------------------------------------------------

+

+.. automodule:: hypernets.dispatchers.cluster.executor_dispatcher

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+Module contents

+---------------

+

+.. automodule:: hypernets.dispatchers.cluster

+ :members:

+ :undoc-members:

+ :show-inheritance:

diff --git a/docs/source/hypernets.dispatchers.dask.rst b/docs/source/hypernets.dispatchers.dask.rst

new file mode 100644

index 00000000..df2c83ba

--- /dev/null

+++ b/docs/source/hypernets.dispatchers.dask.rst

@@ -0,0 +1,21 @@

+hypernets.dispatchers.dask package

+==================================

+

+Submodules

+----------

+

+hypernets.dispatchers.dask.dask\_dispatcher module

+--------------------------------------------------

+

+.. automodule:: hypernets.dispatchers.dask.dask_dispatcher

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+Module contents

+---------------

+

+.. automodule:: hypernets.dispatchers.dask

+ :members:

+ :undoc-members:

+ :show-inheritance:

diff --git a/docs/source/hypernets.dispatchers.predict.grpc.proto.rst b/docs/source/hypernets.dispatchers.predict.grpc.proto.rst

new file mode 100644

index 00000000..18435466

--- /dev/null

+++ b/docs/source/hypernets.dispatchers.predict.grpc.proto.rst

@@ -0,0 +1,29 @@

+hypernets.dispatchers.predict.grpc.proto package

+================================================

+

+Submodules

+----------

+

+hypernets.dispatchers.predict.grpc.proto.predict\_pb2 module

+------------------------------------------------------------

+

+.. automodule:: hypernets.dispatchers.predict.grpc.proto.predict_pb2

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+hypernets.dispatchers.predict.grpc.proto.predict\_pb2\_grpc module

+------------------------------------------------------------------

+

+.. automodule:: hypernets.dispatchers.predict.grpc.proto.predict_pb2_grpc

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+Module contents

+---------------

+

+.. automodule:: hypernets.dispatchers.predict.grpc.proto

+ :members:

+ :undoc-members:

+ :show-inheritance:

diff --git a/docs/source/hypernets.dispatchers.predict.grpc.rst b/docs/source/hypernets.dispatchers.predict.grpc.rst

new file mode 100644

index 00000000..8da2e06f

--- /dev/null

+++ b/docs/source/hypernets.dispatchers.predict.grpc.rst

@@ -0,0 +1,37 @@

+hypernets.dispatchers.predict.grpc package

+==========================================

+

+Subpackages

+-----------

+

+.. toctree::

+ :maxdepth: 4

+

+ hypernets.dispatchers.predict.grpc.proto

+

+Submodules

+----------

+

+hypernets.dispatchers.predict.grpc.predict\_client module

+---------------------------------------------------------

+

+.. automodule:: hypernets.dispatchers.predict.grpc.predict_client

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+hypernets.dispatchers.predict.grpc.predict\_service module

+----------------------------------------------------------

+

+.. automodule:: hypernets.dispatchers.predict.grpc.predict_service

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+Module contents

+---------------

+

+.. automodule:: hypernets.dispatchers.predict.grpc

+ :members:

+ :undoc-members:

+ :show-inheritance:

diff --git a/docs/source/hypernets.dispatchers.predict.rst b/docs/source/hypernets.dispatchers.predict.rst

new file mode 100644

index 00000000..9b7283c7

--- /dev/null

+++ b/docs/source/hypernets.dispatchers.predict.rst

@@ -0,0 +1,29 @@

+hypernets.dispatchers.predict package

+=====================================

+

+Subpackages

+-----------

+

+.. toctree::

+ :maxdepth: 4

+

+ hypernets.dispatchers.predict.grpc

+

+Submodules

+----------

+

+hypernets.dispatchers.predict.predict\_helper module

+----------------------------------------------------

+

+.. automodule:: hypernets.dispatchers.predict.predict_helper

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+Module contents

+---------------

+

+.. automodule:: hypernets.dispatchers.predict

+ :members:

+ :undoc-members:

+ :show-inheritance:

diff --git a/docs/source/hypernets.dispatchers.process.grpc.proto.rst b/docs/source/hypernets.dispatchers.process.grpc.proto.rst

new file mode 100644

index 00000000..afa9c441

--- /dev/null

+++ b/docs/source/hypernets.dispatchers.process.grpc.proto.rst

@@ -0,0 +1,29 @@

+hypernets.dispatchers.process.grpc.proto package

+================================================

+

+Submodules

+----------

+

+hypernets.dispatchers.process.grpc.proto.proc\_pb2 module

+---------------------------------------------------------

+

+.. automodule:: hypernets.dispatchers.process.grpc.proto.proc_pb2

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+hypernets.dispatchers.process.grpc.proto.proc\_pb2\_grpc module

+---------------------------------------------------------------

+

+.. automodule:: hypernets.dispatchers.process.grpc.proto.proc_pb2_grpc

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+Module contents

+---------------

+

+.. automodule:: hypernets.dispatchers.process.grpc.proto

+ :members:

+ :undoc-members:

+ :show-inheritance:

diff --git a/docs/source/hypernets.dispatchers.process.grpc.rst b/docs/source/hypernets.dispatchers.process.grpc.rst

new file mode 100644

index 00000000..b862d65b

--- /dev/null

+++ b/docs/source/hypernets.dispatchers.process.grpc.rst

@@ -0,0 +1,37 @@

+hypernets.dispatchers.process.grpc package

+==========================================

+

+Subpackages

+-----------

+

+.. toctree::

+ :maxdepth: 4

+

+ hypernets.dispatchers.process.grpc.proto

+

+Submodules

+----------

+

+hypernets.dispatchers.process.grpc.process\_broker\_client module

+-----------------------------------------------------------------

+

+.. automodule:: hypernets.dispatchers.process.grpc.process_broker_client

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+hypernets.dispatchers.process.grpc.process\_broker\_service module

+------------------------------------------------------------------

+

+.. automodule:: hypernets.dispatchers.process.grpc.process_broker_service

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+Module contents

+---------------

+

+.. automodule:: hypernets.dispatchers.process.grpc

+ :members:

+ :undoc-members:

+ :show-inheritance:

diff --git a/docs/source/hypernets.dispatchers.process.rst b/docs/source/hypernets.dispatchers.process.rst

new file mode 100644

index 00000000..fa935c08

--- /dev/null

+++ b/docs/source/hypernets.dispatchers.process.rst

@@ -0,0 +1,45 @@

+hypernets.dispatchers.process package

+=====================================

+

+Subpackages

+-----------

+

+.. toctree::

+ :maxdepth: 4

+

+ hypernets.dispatchers.process.grpc

+

+Submodules

+----------

+

+hypernets.dispatchers.process.grpc\_process module

+--------------------------------------------------

+

+.. automodule:: hypernets.dispatchers.process.grpc_process

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+hypernets.dispatchers.process.local\_process module

+---------------------------------------------------

+

+.. automodule:: hypernets.dispatchers.process.local_process

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+hypernets.dispatchers.process.ssh\_process module

+-------------------------------------------------

+

+.. automodule:: hypernets.dispatchers.process.ssh_process

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+Module contents

+---------------

+

+.. automodule:: hypernets.dispatchers.process

+ :members:

+ :undoc-members:

+ :show-inheritance:

diff --git a/docs/source/hypernets.dispatchers.rst b/docs/source/hypernets.dispatchers.rst

new file mode 100644

index 00000000..96d7d805

--- /dev/null

+++ b/docs/source/hypernets.dispatchers.rst

@@ -0,0 +1,72 @@

+hypernets.dispatchers package

+=============================

+

+Subpackages

+-----------

+

+.. toctree::

+ :maxdepth: 4

+

+ hypernets.dispatchers.cluster

+ hypernets.dispatchers.dask

+ hypernets.dispatchers.predict

+ hypernets.dispatchers.process

+

+Submodules

+----------

+

+hypernets.dispatchers.cfg module

+--------------------------------

+

+.. automodule:: hypernets.dispatchers.cfg

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+hypernets.dispatchers.in\_process\_dispatcher module

+----------------------------------------------------

+

+.. automodule:: hypernets.dispatchers.in_process_dispatcher

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+hypernets.dispatchers.run module

+--------------------------------

+

+.. automodule:: hypernets.dispatchers.run

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+hypernets.dispatchers.run\_broker module

+----------------------------------------

+

+.. automodule:: hypernets.dispatchers.run_broker

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+hypernets.dispatchers.run\_predict module

+-----------------------------------------

+

+.. automodule:: hypernets.dispatchers.run_predict

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+hypernets.dispatchers.run\_predict\_server module

+-------------------------------------------------

+

+.. automodule:: hypernets.dispatchers.run_predict_server

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+Module contents

+---------------

+

+.. automodule:: hypernets.dispatchers

+ :members:

+ :undoc-members:

+ :show-inheritance:

diff --git a/docs/source/hypernets.examples.rst b/docs/source/hypernets.examples.rst

new file mode 100644

index 00000000..d46034cc

--- /dev/null

+++ b/docs/source/hypernets.examples.rst

@@ -0,0 +1,29 @@

+hypernets.examples package

+==========================

+

+Submodules

+----------

+

+hypernets.examples.plain\_model module

+--------------------------------------

+

+.. automodule:: hypernets.examples.plain_model

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+hypernets.examples.smoke\_testing module

+----------------------------------------

+

+.. automodule:: hypernets.examples.smoke_testing

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+Module contents

+---------------

+

+.. automodule:: hypernets.examples

+ :members:

+ :undoc-members:

+ :show-inheritance:

diff --git a/docs/source/hypernets.experiment.rst b/docs/source/hypernets.experiment.rst

new file mode 100644

index 00000000..8229a4f7

--- /dev/null

+++ b/docs/source/hypernets.experiment.rst

@@ -0,0 +1,53 @@

+hypernets.experiment package

+============================

+

+Submodules

+----------

+

+hypernets.experiment.cfg module

+-------------------------------

+

+.. automodule:: hypernets.experiment.cfg

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+hypernets.experiment.compete module

+-----------------------------------

+

+.. automodule:: hypernets.experiment.compete

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+hypernets.experiment.general module

+-----------------------------------

+

+.. automodule:: hypernets.experiment.general

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+hypernets.experiment.job module

+-------------------------------

+

+.. automodule:: hypernets.experiment.job

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+hypernets.experiment.report module

+----------------------------------

+

+.. automodule:: hypernets.experiment.report

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+Module contents

+---------------

+

+.. automodule:: hypernets.experiment

+ :members:

+ :undoc-members:

+ :show-inheritance:

diff --git a/docs/source/hypernets.hyperctl.rst b/docs/source/hypernets.hyperctl.rst

new file mode 100644

index 00000000..72cedc99

--- /dev/null

+++ b/docs/source/hypernets.hyperctl.rst

@@ -0,0 +1,93 @@

+hypernets.hyperctl package

+==========================

+

+Submodules

+----------

+

+hypernets.hyperctl.api module

+-----------------------------

+

+.. automodule:: hypernets.hyperctl.api

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+hypernets.hyperctl.appliation module

+------------------------------------

+

+.. automodule:: hypernets.hyperctl.appliation

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+hypernets.hyperctl.batch module

+-------------------------------

+

+.. automodule:: hypernets.hyperctl.batch

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+hypernets.hyperctl.callbacks module

+-----------------------------------

+

+.. automodule:: hypernets.hyperctl.callbacks

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+hypernets.hyperctl.cli module

+-----------------------------

+

+.. automodule:: hypernets.hyperctl.cli

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+hypernets.hyperctl.consts module

+--------------------------------

+

+.. automodule:: hypernets.hyperctl.consts

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+hypernets.hyperctl.executor module

+----------------------------------

+

+.. automodule:: hypernets.hyperctl.executor

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+hypernets.hyperctl.scheduler module

+-----------------------------------

+

+.. automodule:: hypernets.hyperctl.scheduler

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+hypernets.hyperctl.server module

+--------------------------------

+

+.. automodule:: hypernets.hyperctl.server

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+hypernets.hyperctl.utils module

+-------------------------------

+

+.. automodule:: hypernets.hyperctl.utils

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+Module contents

+---------------

+

+.. automodule:: hypernets.hyperctl

+ :members:

+ :undoc-members:

+ :show-inheritance:

diff --git a/docs/source/hypernets.model.rst b/docs/source/hypernets.model.rst

new file mode 100644

index 00000000..f6fae565

--- /dev/null

+++ b/docs/source/hypernets.model.rst

@@ -0,0 +1,37 @@

+hypernets.model package

+=======================

+

+Submodules

+----------

+

+hypernets.model.estimator module

+--------------------------------

+

+.. automodule:: hypernets.model.estimator

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+hypernets.model.hyper\_model module

+-----------------------------------

+

+.. automodule:: hypernets.model.hyper_model

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+hypernets.model.objectives module

+---------------------------------

+

+.. automodule:: hypernets.model.objectives

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+Module contents

+---------------

+

+.. automodule:: hypernets.model

+ :members:

+ :undoc-members:

+ :show-inheritance:

diff --git a/docs/source/hypernets.pipeline.rst b/docs/source/hypernets.pipeline.rst

new file mode 100644

index 00000000..aee7092f

--- /dev/null

+++ b/docs/source/hypernets.pipeline.rst

@@ -0,0 +1,29 @@

+hypernets.pipeline package

+==========================

+

+Submodules

+----------

+

+hypernets.pipeline.base module

+------------------------------

+

+.. automodule:: hypernets.pipeline.base

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+hypernets.pipeline.transformers module

+--------------------------------------

+

+.. automodule:: hypernets.pipeline.transformers

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+Module contents

+---------------

+

+.. automodule:: hypernets.pipeline

+ :members:

+ :undoc-members:

+ :show-inheritance:

diff --git a/docs/source/hypernets.rst b/docs/source/hypernets.rst

new file mode 100644

index 00000000..8e6ccde4

--- /dev/null

+++ b/docs/source/hypernets.rst

@@ -0,0 +1,20 @@

+hypernets package

+=================

+

+Subpackages

+-----------

+

+.. toctree::

+ :maxdepth: 4

+

+ hypernets.experiment

+ hypernets.searchers

+

+

+Module contents

+---------------

+

+.. automodule:: hypernets

+ :members:

+ :undoc-members:

+ :show-inheritance:

diff --git a/docs/source/hypernets.searchers.rst b/docs/source/hypernets.searchers.rst

new file mode 100644

index 00000000..3647d328

--- /dev/null

+++ b/docs/source/hypernets.searchers.rst

@@ -0,0 +1,93 @@

+hypernets.searchers package

+===========================

+

+Submodules

+----------

+

+hypernets.searchers.evolution\_searcher module

+----------------------------------------------

+

+.. automodule:: hypernets.searchers.evolution_searcher

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+hypernets.searchers.genetic module

+----------------------------------

+

+.. automodule:: hypernets.searchers.genetic

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+hypernets.searchers.grid\_searcher module

+-----------------------------------------

+

+.. automodule:: hypernets.searchers.grid_searcher

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+hypernets.searchers.mcts\_core module

+-------------------------------------

+

+.. automodule:: hypernets.searchers.mcts_core

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+hypernets.searchers.mcts\_searcher module

+-----------------------------------------

+

+.. automodule:: hypernets.searchers.mcts_searcher

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+hypernets.searchers.moead\_searcher module

+------------------------------------------

+

+.. automodule:: hypernets.searchers.moead_searcher

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+hypernets.searchers.moo module

+------------------------------

+

+.. automodule:: hypernets.searchers.moo

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+hypernets.searchers.nsga\_searcher module

+-----------------------------------------

+

+.. automodule:: hypernets.searchers.nsga_searcher

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+hypernets.searchers.playback\_searcher module

+---------------------------------------------

+

+.. automodule:: hypernets.searchers.playback_searcher

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+hypernets.searchers.random\_searcher module

+-------------------------------------------

+

+.. automodule:: hypernets.searchers.random_searcher

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+Module contents

+---------------

+

+.. automodule:: hypernets.searchers

+ :members:

+ :undoc-members:

+ :show-inheritance:

diff --git a/docs/source/hypernets.server.rst b/docs/source/hypernets.server.rst

new file mode 100644

index 00000000..96125860

--- /dev/null

+++ b/docs/source/hypernets.server.rst

@@ -0,0 +1,10 @@

+hypernets.server package

+========================

+

+Module contents

+---------------

+

+.. automodule:: hypernets.server

+ :members:

+ :undoc-members:

+ :show-inheritance:

diff --git a/docs/source/hypernets.tabular.cuml_ex.rst b/docs/source/hypernets.tabular.cuml_ex.rst

new file mode 100644

index 00000000..0a71ce3d

--- /dev/null

+++ b/docs/source/hypernets.tabular.cuml_ex.rst

@@ -0,0 +1,10 @@

+hypernets.tabular.cuml\_ex package

+==================================

+

+Module contents

+---------------

+

+.. automodule:: hypernets.tabular.cuml_ex

+ :members:

+ :undoc-members:

+ :show-inheritance:

diff --git a/docs/source/hypernets.tabular.dask_ex.rst b/docs/source/hypernets.tabular.dask_ex.rst

new file mode 100644

index 00000000..59c8994d

--- /dev/null

+++ b/docs/source/hypernets.tabular.dask_ex.rst

@@ -0,0 +1,10 @@

+hypernets.tabular.dask\_ex package

+==================================

+

+Module contents

+---------------

+

+.. automodule:: hypernets.tabular.dask_ex

+ :members:

+ :undoc-members:

+ :show-inheritance:

diff --git a/docs/source/hypernets.tabular.datasets.rst b/docs/source/hypernets.tabular.datasets.rst

new file mode 100644

index 00000000..2f145dbd

--- /dev/null

+++ b/docs/source/hypernets.tabular.datasets.rst

@@ -0,0 +1,21 @@

+hypernets.tabular.datasets package

+==================================

+

+Submodules

+----------

+

+hypernets.tabular.datasets.dsutils module

+-----------------------------------------

+

+.. automodule:: hypernets.tabular.datasets.dsutils

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+Module contents

+---------------

+

+.. automodule:: hypernets.tabular.datasets

+ :members:

+ :undoc-members:

+ :show-inheritance:

diff --git a/docs/source/hypernets.tabular.ensemble.rst b/docs/source/hypernets.tabular.ensemble.rst

new file mode 100644

index 00000000..25373ff0

--- /dev/null

+++ b/docs/source/hypernets.tabular.ensemble.rst

@@ -0,0 +1,37 @@

+hypernets.tabular.ensemble package

+==================================

+

+Submodules

+----------

+

+hypernets.tabular.ensemble.base\_ensemble module

+------------------------------------------------

+

+.. automodule:: hypernets.tabular.ensemble.base_ensemble

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+hypernets.tabular.ensemble.stacking module

+------------------------------------------

+

+.. automodule:: hypernets.tabular.ensemble.stacking

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+hypernets.tabular.ensemble.voting module

+----------------------------------------

+

+.. automodule:: hypernets.tabular.ensemble.voting

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+Module contents

+---------------

+

+.. automodule:: hypernets.tabular.ensemble

+ :members:

+ :undoc-members:

+ :show-inheritance:

diff --git a/docs/source/hypernets.tabular.evaluator.rst b/docs/source/hypernets.tabular.evaluator.rst

new file mode 100644

index 00000000..4279e5b9

--- /dev/null

+++ b/docs/source/hypernets.tabular.evaluator.rst

@@ -0,0 +1,61 @@

+hypernets.tabular.evaluator package

+===================================

+

+Submodules

+----------

+

+hypernets.tabular.evaluator.auto\_sklearn module

+------------------------------------------------

+

+.. automodule:: hypernets.tabular.evaluator.auto_sklearn

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+hypernets.tabular.evaluator.h2o module

+--------------------------------------

+

+.. automodule:: hypernets.tabular.evaluator.h2o

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+hypernets.tabular.evaluator.hyperdt module

+------------------------------------------

+

+.. automodule:: hypernets.tabular.evaluator.hyperdt

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+hypernets.tabular.evaluator.hypergbm module

+-------------------------------------------

+

+.. automodule:: hypernets.tabular.evaluator.hypergbm

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+hypernets.tabular.evaluator.tests module

+----------------------------------------

+

+.. automodule:: hypernets.tabular.evaluator.tests

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+hypernets.tabular.evaluator.tpot module

+---------------------------------------

+

+.. automodule:: hypernets.tabular.evaluator.tpot

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+Module contents

+---------------

+

+.. automodule:: hypernets.tabular.evaluator

+ :members:

+ :undoc-members:

+ :show-inheritance:

diff --git a/docs/source/hypernets.tabular.feature_generators.rst b/docs/source/hypernets.tabular.feature_generators.rst

new file mode 100644

index 00000000..92bc88bf

--- /dev/null

+++ b/docs/source/hypernets.tabular.feature_generators.rst

@@ -0,0 +1,10 @@

+hypernets.tabular.feature\_generators package

+=============================================

+

+Module contents

+---------------

+

+.. automodule:: hypernets.tabular.feature_generators

+ :members:

+ :undoc-members:

+ :show-inheritance:

diff --git a/docs/source/hypernets.tabular.lifelong_learning.rst b/docs/source/hypernets.tabular.lifelong_learning.rst

new file mode 100644

index 00000000..f41ae683

--- /dev/null

+++ b/docs/source/hypernets.tabular.lifelong_learning.rst

@@ -0,0 +1,10 @@

+hypernets.tabular.lifelong\_learning package

+============================================

+

+Module contents

+---------------

+

+.. automodule:: hypernets.tabular.lifelong_learning

+ :members:

+ :undoc-members:

+ :show-inheritance:

diff --git a/docs/source/hypernets.tabular.rst b/docs/source/hypernets.tabular.rst

new file mode 100644

index 00000000..23454375

--- /dev/null

+++ b/docs/source/hypernets.tabular.rst

@@ -0,0 +1,139 @@

+hypernets.tabular package

+=========================

+

+Subpackages

+-----------

+

+.. toctree::

+ :maxdepth: 4

+

+ hypernets.tabular.cuml_ex

+ hypernets.tabular.dask_ex

+ hypernets.tabular.datasets

+ hypernets.tabular.ensemble

+ hypernets.tabular.evaluator

+ hypernets.tabular.feature_generators

+ hypernets.tabular.lifelong_learning

+

+Submodules

+----------

+

+hypernets.tabular.cache module

+------------------------------

+

+.. automodule:: hypernets.tabular.cache

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+hypernets.tabular.cfg module

+----------------------------

+

+.. automodule:: hypernets.tabular.cfg

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+hypernets.tabular.collinearity module

+-------------------------------------

+

+.. automodule:: hypernets.tabular.collinearity

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+hypernets.tabular.column\_selector module

+-----------------------------------------

+

+.. automodule:: hypernets.tabular.column_selector

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+hypernets.tabular.data\_cleaner module

+--------------------------------------

+

+.. automodule:: hypernets.tabular.data_cleaner

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+hypernets.tabular.data\_hasher module

+-------------------------------------

+

+.. automodule:: hypernets.tabular.data_hasher

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+hypernets.tabular.dataframe\_mapper module

+------------------------------------------

+

+.. automodule:: hypernets.tabular.dataframe_mapper

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+hypernets.tabular.drift\_detection module

+-----------------------------------------

+

+.. automodule:: hypernets.tabular.drift_detection

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+hypernets.tabular.estimator\_detector module

+--------------------------------------------

+

+.. automodule:: hypernets.tabular.estimator_detector

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+hypernets.tabular.metrics module

+--------------------------------

+

+.. automodule:: hypernets.tabular.metrics

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+hypernets.tabular.persistence module

+------------------------------------

+

+.. automodule:: hypernets.tabular.persistence

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+hypernets.tabular.pseudo\_labeling module

+-----------------------------------------

+

+.. automodule:: hypernets.tabular.pseudo_labeling

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+hypernets.tabular.sklearn\_ex module

+------------------------------------

+

+.. automodule:: hypernets.tabular.sklearn_ex

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+hypernets.tabular.toolbox module

+--------------------------------

+

+.. automodule:: hypernets.tabular.toolbox

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+Module contents

+---------------

+

+.. automodule:: hypernets.tabular

+ :members:

+ :undoc-members:

+ :show-inheritance:

diff --git a/docs/source/hypernets.utils.rst b/docs/source/hypernets.utils.rst

new file mode 100644

index 00000000..0b8c93ed

--- /dev/null

+++ b/docs/source/hypernets.utils.rst

@@ -0,0 +1,61 @@

+hypernets.utils package

+=======================

+

+Submodules

+----------

+

+hypernets.utils.common module

+-----------------------------

+

+.. automodule:: hypernets.utils.common

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+hypernets.utils.const module

+----------------------------

+

+.. automodule:: hypernets.utils.const

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+hypernets.utils.df\_utils module

+--------------------------------

+

+.. automodule:: hypernets.utils.df_utils

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+hypernets.utils.logging module

+------------------------------

+

+.. automodule:: hypernets.utils.logging

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+hypernets.utils.param\_tuning module

+------------------------------------

+

+.. automodule:: hypernets.utils.param_tuning

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+hypernets.utils.ssh\_utils module

+---------------------------------

+

+.. automodule:: hypernets.utils.ssh_utils

+ :members:

+ :undoc-members:

+ :show-inheritance:

+

+Module contents

+---------------

+

+.. automodule:: hypernets.utils

+ :members:

+ :undoc-members:

+ :show-inheritance:

diff --git a/docs/source/images/datacanvas_automl_toolkit.png b/docs/source/images/DAT2.1.png

similarity index 100%

rename from docs/source/images/datacanvas_automl_toolkit.png

rename to docs/source/images/DAT2.1.png

diff --git a/docs/source/images/DAT_latest.png b/docs/source/images/DAT_latest.png

new file mode 100644

index 00000000..8a0835aa

Binary files /dev/null and b/docs/source/images/DAT_latest.png differ

diff --git a/docs/source/images/DAT_logo.png b/docs/source/images/DAT_logo.png

deleted file mode 100644

index fe1ddee9..00000000

Binary files a/docs/source/images/DAT_logo.png and /dev/null differ

diff --git a/docs/source/images/Hypernets.png b/docs/source/images/Hypernets.png

index 66a88ad6..5857508c 100644

Binary files a/docs/source/images/Hypernets.png and b/docs/source/images/Hypernets.png differ

diff --git a/docs/source/images/crowding_distance.png b/docs/source/images/crowding_distance.png

new file mode 100644

index 00000000..24db73ca

Binary files /dev/null and b/docs/source/images/crowding_distance.png differ

diff --git a/docs/source/images/moead_pbi.png b/docs/source/images/moead_pbi.png

new file mode 100644

index 00000000..139dffd6

Binary files /dev/null and b/docs/source/images/moead_pbi.png differ

diff --git a/docs/source/images/nsga2_procedure.png b/docs/source/images/nsga2_procedure.png

new file mode 100644

index 00000000..6d467b75

Binary files /dev/null and b/docs/source/images/nsga2_procedure.png differ

diff --git a/docs/source/images/r_dominance_sorting.png b/docs/source/images/r_dominance_sorting.png

new file mode 100644

index 00000000..b1d70e4f

Binary files /dev/null and b/docs/source/images/r_dominance_sorting.png differ

diff --git a/docs/source/index.rst b/docs/source/index.rst

index d4b522c3..acd0deb8 100644

--- a/docs/source/index.rst

+++ b/docs/source/index.rst

@@ -18,7 +18,8 @@ Hypernets is a general AutoML framework that can meet various needs such as feat

Neural Architecture Search

Experiment

Hyperctl

- Release Notes

+ API

+ Release Notes

FAQ

Indices and tables

diff --git a/docs/source/modules.rst b/docs/source/modules.rst

new file mode 100644

index 00000000..2a4d260f

--- /dev/null

+++ b/docs/source/modules.rst

@@ -0,0 +1,7 @@

+hypernets

+=========

+

+.. toctree::

+ :maxdepth: 4

+

+ hypernets

diff --git a/docs/source/release_note_025.md b/docs/source/release_note_025.rst

similarity index 97%

rename from docs/source/release_note_025.md

rename to docs/source/release_note_025.rst

index 998b4ddb..d9a980ea 100644

--- a/docs/source/release_note_025.md

+++ b/docs/source/release_note_025.rst

@@ -1,5 +1,5 @@

Version 0.2.5

-================

+-------------

We add a few new features to this version:

diff --git a/docs/source/release_note_030.rst b/docs/source/release_note_030.rst

new file mode 100644

index 00000000..f1c6f033

--- /dev/null

+++ b/docs/source/release_note_030.rst

@@ -0,0 +1,19 @@

+Version 0.3.0

+-------------

+

+We add a few new features to this version:

+

+* Multi-objectives optimization

+

+ * optimization algorithm

+ - add MOEA/D(Multiobjective Evolutionary Algorithm Based on Decomposition)

+ - add Tchebycheff, Weighted Sum, Penalty-based boundary intersection approach(PBI) decompose approachs

+ - add shuffle crossover, uniform crossover, single point crossover strategies for GA based algorithms

+ - automatically normalize objectives of different dimensions

+ - automatically convert maximization problem to minimization problem

+ - add NSGA-II(Non-dominated Sorting Genetic Algorithm)

+ - add R-NSGA-II(A new dominance relation for multicriteria decision making)

+

+ * builtin objectives

+ - number of features

+ - prediction performance

diff --git a/docs/source/release_notes.rst b/docs/source/release_notes.rst

new file mode 100644

index 00000000..30a08786

--- /dev/null

+++ b/docs/source/release_notes.rst

@@ -0,0 +1,10 @@

+Release Notes

+=============

+

+Releasing history:

+

+.. toctree::

+ :maxdepth: 1

+

+ v0.2.5

+ v0.3.0

diff --git a/docs/source/searchers.md b/docs/source/searchers.md

deleted file mode 100644

index 227c17b4..00000000

--- a/docs/source/searchers.md

+++ /dev/null

@@ -1,76 +0,0 @@

-# Searchers

-

-## MCTSSearcher

-

-Monte-Carlo Tree Search (MCTS) extends the celebrated Multi-armed Bandit algorithm to tree-structured search spaces. The MCTS algorithm iterates over four phases: selection, expansion, playout and backpropagation.

-

-* Selection: In each node of the tree, the child node is selected after a Multi-armed Bandit strategy, e.g. the UCT (Upper Confidence bound applied to Trees) algorithm.

-

-* Expansion: The algorithm adds one or more nodes to the tree. This node corresponds to the first encountered position that was not added in the tree.

-

-* Playout: When reaching the limits of the visited tree, a roll-out strategy is used to select the options until reaching a terminal node and computing the associated

-reward.

-

-* Backpropagation: The reward value is propagated back, i.e. it is used to update the value associated to all nodes along the visited path up to the root node.

-

-**Code example**

-```

-from hypernets.searchers import MCTSSearcher

-

-searcher = MCTSSearcher(search_space_fn, use_meta_learner=False, max_node_space=10, candidates_size=10, optimize_direction='max')

-```

-

-**Required Parameters**

-* *space_fn*: callable, A search space function which when called returns a `HyperSpace` instance.

-

-**Optional Parameters**

-- *policy*: hypernets.searchers.mcts_core.BasePolicy, (default=None), The policy for *Selection* and *Backpropagation* phases, `UCT` by default.

-- *max_node_space*: int, (default=10), Maximum space for node expansion

-- *use_meta_learner*: bool, (default=True), Meta-learner aims to evaluate the performance of unseen samples based on previously evaluated samples. It provides a practical solution to accurately estimate a search branch with many simulations without involving the actual training.

-- *candidates_size*: int, (default=10), The number of samples for the meta-learner to evaluate candidate paths when roll out

-- *optimize_direction*: 'min' or 'max', (default='min'), Whether the search process is approaching the maximum or minimum reward value.

-- *space_sample_validation_fn*: callable or None, (default=None), Used to verify the validity of samples from the search space, and can be used to add specific constraint rules to the search space to reduce the size of the space.

-

-

-## EvolutionSearcher

-

-Evolutionary algorithm (EA) is a subset of evolutionary computation, a generic population-based metaheuristic optimization algorithm. An EA uses mechanisms inspired by biological evolution, such as reproduction, mutation, recombination, and selection. Candidate solutions to the optimization problem play the role of individuals in a population, and the fitness function determines the quality of the solutions (see also loss function). Evolution of the population then takes place after the repeated application of the above operators.

-

-**Code example**

-```

-from hypernets.searchers import EvolutionSearcher

-

-searcher = EvolutionSearcher(search_space_fn, population_size=20, sample_size=5, optimize_direction='min')

-```

-

-**Required Parameters**

-- *space_fn*: callable, A search space function which when called returns a `HyperSpace` instance

-- *population_size*: int, Size of population

-- *sample_size*: int, The number of parent candidates selected in each cycle of evolution

-

-**Optional Parameters**

-- *regularized*: bool, (default=False), Whether to enable regularized

-- *use_meta_learner*: bool, (default=True), Meta-learner aims to evaluate the performance of unseen samples based on previously evaluated samples. It provides a practical solution to accurately estimate a search branch with many simulations without involving the actual training.

-- *candidates_size*: int, (default=10), The number of samples for the meta-learner to evaluate candidate paths when roll out

-- *optimize_direction*: 'min' or 'max', (default='min'), Whether the search process is approaching the maximum or minimum reward value.

-- *space_sample_validation_fn*: callable or None, (default=None), Used to verify the validity of samples from the search space, and can be used to add specific constraint rules to the search space to reduce the size of the space.

-

-

-## RandomSearcher

-

-As its name suggests, Random Search uses random combinations of hyperparameters.

-

-**Code example**

-```

-from hypernets.searchers import RandomSearcher

-

-searcher = RandomSearcher(search_space_fn, optimize_direction='min')

-```

-

-**Required Parameters**

-- *space_fn*: callable, A search space function which when called returns a `HyperSpace` instance

-

-**Optional Parameters**

-- *optimize_direction*: 'min' or 'max', (default='min'), Whether the search process is approaching the maximum or minimum reward value.

-- *space_sample_validation_fn*: callable or None, (default=None), Used to verify the validity of samples from the search space, and can be used to add specific constraint rules to the search space to reduce the size of the space.

-

diff --git a/docs/source/searchers.rst b/docs/source/searchers.rst

new file mode 100644

index 00000000..70ec31af

--- /dev/null

+++ b/docs/source/searchers.rst

@@ -0,0 +1,268 @@

+=============

+Searchers

+=============

+

+

+Single-objective Optimization

+==============================

+

+MCTSSearcher

+------------

+

+Monte-Carlo Tree Search (MCTS) extends the celebrated Multi-armed Bandit algorithm to tree-structured search spaces. The MCTS algorithm iterates over four phases: selection, expansion, playout and backpropagation.

+

+* Selection: In each node of the tree, the child node is selected after a Multi-armed Bandit strategy, e.g. the UCT (Upper Confidence bound applied to Trees) algorithm.

+

+* Expansion: The algorithm adds one or more nodes to the tree. This node corresponds to the first encountered position that was not added in the tree.

+

+* Playout: When reaching the limits of the visited tree, a roll-out strategy is used to select the options until reaching a terminal node and computing the associated reward.

+

+* Backpropagation: The reward value is propagated back, i.e. it is used to update the value associated to all nodes along the visited path up to the root node.

+

+**Code example**

+

+.. code-block:: python

+ :linenos:

+

+ from hypernets.searchers import MCTSSearcher

+

+ searcher = MCTSSearcher(search_space_fn, use_meta_learner=False, max_node_space=10, candidates_size=10, optimize_direction='max')

+

+

+**Required Parameters**

+

+* *space_fn*: callable, A search space function which when called returns a ``HyperSpace`` instance.

+

+**Optional Parameters**

+

+- *policy*: hypernets.searchers.mcts_core.BasePolicy, (default=None), The policy for *Selection* and *Backpropagation* phases, ``UCT`` by default.

+- *max_node_space*: int, (default=10), Maximum space for node expansion

+- *use_meta_learner*: bool, (default=True), Meta-learner aims to evaluate the performance of unseen samples based on previously evaluated samples. It provides a practical solution to accurately estimate a search branch with many simulations without involving the actual training.

+- *candidates_size*: int, (default=10), The number of samples for the meta-learner to evaluate candidate paths when roll out

+- *optimize_direction*: 'min' or 'max', (default='min'), Whether the search process is approaching the maximum or minimum reward value.

+- *space_sample_validation_fn*: callable or None, (default=None), Used to verify the validity of samples from the search space, and can be used to add specific constraint rules to the search space to reduce the size of the space.

+

+

+EvolutionSearcher

+-----------------

+

+Evolutionary algorithm (EA) is a subset of evolutionary computation, a generic population-based metaheuristic optimization algorithm. An EA uses mechanisms inspired by biological evolution, such as reproduction, mutation, recombination, and selection. Candidate solutions to the optimization problem play the role of individuals in a population, and the fitness function determines the quality of the solutions (see also loss function). Evolution of the population then takes place after the repeated application of the above operators.

+

+**Code example**

+

+.. code-block:: python

+ :linenos:

+

+ from hypernets.searchers import EvolutionSearcher

+

+ searcher = EvolutionSearcher(search_space_fn, population_size=20, sample_size=5, optimize_direction='min')

+

+

+**Required Parameters**

+

+- *space_fn*: callable, A search space function which when called returns a ``HyperSpace`` instance

+- *population_size*: int, Size of population

+- *sample_size*: int, The number of parent candidates selected in each cycle of evolution

+

+**Optional Parameters**

+

+- *regularized*: bool, (default=False), Whether to enable regularized

+- *use_meta_learner*: bool, (default=True), Meta-learner aims to evaluate the performance of unseen samples based on previously evaluated samples. It provides a practical solution to accurately estimate a search branch with many simulations without involving the actual training.

+- *candidates_size*: int, (default=10), The number of samples for the meta-learner to evaluate candidate paths when roll out

+- *optimize_direction*: 'min' or 'max', (default='min'), Whether the search process is approaching the maximum or minimum reward value.

+- *space_sample_validation_fn*: callable or None, (default=None), Used to verify the validity of samples from the search space, and can be used to add specific constraint rules to the search space to reduce the size of the space.

+

+

+RandomSearcher

+--------------

+

+As its name suggests, Random Search uses random combinations of hyperparameters.

+

+**Code example**

+

+.. code-block:: python

+ :linenos:

+

+ from hypernets.searchers import RandomSearcher

+ searcher = RandomSearcher(search_space_fn, optimize_direction='min')

+

+

+**Required Parameters**

+

+- *space_fn*: callable, A search space function which when called returns a ``HyperSpace`` instance

+

+**Optional Parameters**

+

+- *optimize_direction*: 'min' or 'max', (default='min'), Whether the search process is approaching the maximum or minimum reward value.

+- *space_sample_validation_fn*: callable or None, (default=None), Used to verify the validity of samples from the search space, and can be used to add specific constraint rules to the search space to reduce the size of the space.

+

+Multi-objective optimization

+============================

+

+NSGA-II: Non-dominated Sorting Genetic Algorithm

+------------------------------------------------

+

+NSGA-II is a dominate-based genetic algorithm used for multi-objective optimization. It rank individuals into levels

+according to the dominance relationship then calculate crowded-distance within a level. The ranking levels and

+crowded-distance are used to sort individuals in population and keep population size to be stable.

+

+.. figure:: ./images/nsga2_procedure.png

+ :align: center

+ :scale: 50%

+

+

+:py:class:`~hypernets.searchers.nsga_searcher.NSGAIISearcher` code example:

+

+ >>> from sklearn.model_selection import train_test_split

+ >>> from sklearn.preprocessing import LabelEncoder

+ >>> from hypernets.core.random_state import set_random_state, get_random_state

+ >>> from hypernets.examples.plain_model import PlainSearchSpace, PlainModel

+ >>> from hypernets.model.objectives import create_objective

+ >>> from hypernets.searchers.genetic import create_recombination

+ >>> from hypernets.searchers.nsga_searcher import NSGAIISearcher

+ >>> from hypernets.tabular.datasets import dsutils

+ >>> from hypernets.tabular.sklearn_ex import MultiLabelEncoder

+ >>> from hypernets.utils import logging as hyn_logging