diff --git a/.github/ISSUE_TEMPLATE.md b/.github/ISSUE_TEMPLATE.md

deleted file mode 100644

index 36e02cda4..000000000

--- a/.github/ISSUE_TEMPLATE.md

+++ /dev/null

@@ -1,65 +0,0 @@

-

-

-## 注意事项

-请确认下列注意事项:

-

-* 我已仔细阅读下列文档,都没有找到答案:

- - [首页文档](https://github.com/hankcs/HanLP)

- - [wiki](https://github.com/hankcs/HanLP/wiki)

- - [常见问题](https://github.com/hankcs/HanLP/wiki/FAQ)

-* 我已经通过[Google](https://www.google.com/#newwindow=1&q=HanLP)和[issue区检索功能](https://github.com/hankcs/HanLP/issues)搜索了我的问题,也没有找到答案。

-* 我明白开源社区是出于兴趣爱好聚集起来的自由社区,不承担任何责任或义务。我会礼貌发言,向每一个帮助我的人表示感谢。

-* [ ] 我在此括号内输入x打钩,代表上述事项确认完毕。

-

-## 版本号

-

-

-当前最新版本号是:

-我使用的版本是:

-

-

-

-## 我的问题

-

-

-

-## 复现问题

-

-

-### 步骤

-

-1. 首先……

-2. 然后……

-3. 接着……

-

-### 触发代码

-

-```

- public void testIssue1234() throws Exception

- {

- CustomDictionary.add("用户词语");

- System.out.println(StandardTokenizer.segment("触发问题的句子"));

- }

-```

-### 期望输出

-

-

-

-```

-期望输出

-```

-

-### 实际输出

-

-

-

-```

-实际输出

-```

-

-## 其他信息

-

-

-

diff --git a/.github/bug_report.md b/.github/bug_report.md

new file mode 100644

index 000000000..ae3589912

--- /dev/null

+++ b/.github/bug_report.md

@@ -0,0 +1,44 @@

+---

+name: 🐛发现一个bug

+about: 需提交版本号、触发代码、错误日志

+title: ''

+labels: bug

+assignees: hankcs

+

+---

+

+

+

+**Describe the bug**

+A clear and concise description of what the bug is.

+

+**Code to reproduce the issue**

+Provide a reproducible test case that is the bare minimum necessary to generate the problem.

+

+```python

+```

+

+**Describe the current behavior**

+A clear and concise description of what happened.

+

+**Expected behavior**

+A clear and concise description of what you expected to happen.

+

+**System information**

+- OS Platform and Distribution (e.g., Linux Ubuntu 16.04):

+- Python/Java version:

+- HanLP version:

+

+**Other info / logs**

+Include any logs or source code that would be helpful to diagnose the problem. If including tracebacks, please include the full traceback. Large logs and files should be attached.

+

+* [ ] I've completed this form and searched the web for solutions.

+

+

+

\ No newline at end of file

diff --git a/.github/config.yml b/.github/config.yml

new file mode 100755

index 000000000..0180a0e49

--- /dev/null

+++ b/.github/config.yml

@@ -0,0 +1,5 @@

+blank_issues_enabled: false

+contact_links:

+ - name: ⁉️ 提问求助请上论坛

+ url: https://bbs.hanlp.com/

+ about: 欢迎前往中文社区求助

diff --git a/.github/feature_request.md b/.github/feature_request.md

new file mode 100644

index 000000000..af4b92452

--- /dev/null

+++ b/.github/feature_request.md

@@ -0,0 +1,36 @@

+---

+name: 🚀新功能请愿

+about: 建议增加一个新功能

+title: ''

+labels: feature request

+assignees: hankcs

+

+---

+

+

+

+**Describe the feature and the current behavior/state.**

+

+**Will this change the current api? How?**

+

+**Who will benefit with this feature?**

+

+**Are you willing to contribute it (Yes/No):**

+

+**System information**

+- OS Platform and Distribution (e.g., Linux Ubuntu 16.04):

+- Python/Java version:

+- HanLP version:

+

+**Any other info**

+

+* [ ] I've carefully completed this form.

+

+

+

\ No newline at end of file

diff --git a/README.md b/README.md

index ec1130891..b90a91473 100644

--- a/README.md

+++ b/README.md

@@ -2,79 +2,69 @@ HanLP: Han Language Processing

=====

汉语言处理包

-[](https://maven-badges.herokuapp.com/maven-central/com.hankcs/hanlp/)

+[](https://mvnrepository.com/artifact/com.hankcs/hanlp)

[](https://github.com/hankcs/hanlp/releases)

[](https://www.apache.org/licenses/LICENSE-2.0.html)

[](https://hub.docker.com/r/samurais/hanlp-api/)

------

-**HanLP**是由一系列模型与算法组成的Java工具包,目标是普及自然语言处理在生产环境中的应用。**HanLP**具备功能完善、性能高效、架构清晰、语料时新、可自定义的特点。

+HanLP是一系列模型与算法组成的NLP工具包,目标是普及自然语言处理在生产环境中的应用。HanLP具备功能完善、性能高效、架构清晰、语料时新、可自定义的特点。内部算法经过工业界和学术界考验,配套书籍[《自然语言处理入门》](http://nlp.hankcs.com/book.php)已经出版。目前,基于深度学习的[HanLP 2.x](https://github.com/hankcs/HanLP/tree/doc-zh)已正式发布,次世代最先进的NLP技术,支持包括简繁中英日俄法德在内的104种语言上的联合任务。如果您在研究中使用了HanLP,请引用我们的[EMNLP论文](https://aclanthology.org/2021.emnlp-main.451/)。

-**HanLP**提供下列功能:

+HanLP提供下列功能:

* 中文分词

- * 最短路分词

- * N-最短路分词

- * CRF分词

- * 索引分词

- * 极速词典分词

- * 用户自定义词典

+ * HMM-Bigram(速度与精度最佳平衡;一百兆内存)

+ * [最短路分词](https://github.com/hankcs/HanLP/tree/1.x#1-%E7%AC%AC%E4%B8%80%E4%B8%AAdemo)、[N-最短路分词](https://github.com/hankcs/HanLP/tree/1.x#5-n-%E6%9C%80%E7%9F%AD%E8%B7%AF%E5%BE%84%E5%88%86%E8%AF%8D)

+ * 由字构词(侧重精度,全世界最大语料库,可识别新词;适合NLP任务)

+ * [感知机分词](https://github.com/hankcs/HanLP/wiki/%E7%BB%93%E6%9E%84%E5%8C%96%E6%84%9F%E7%9F%A5%E6%9C%BA%E6%A0%87%E6%B3%A8%E6%A1%86%E6%9E%B6)、[CRF分词](https://github.com/hankcs/HanLP/tree/1.x#6-crf%E5%88%86%E8%AF%8D)

+ * 词典分词(侧重速度,每秒数千万字符;省内存)

+ * [极速词典分词](https://github.com/hankcs/HanLP/tree/1.x#7-%E6%9E%81%E9%80%9F%E8%AF%8D%E5%85%B8%E5%88%86%E8%AF%8D)

+ * 所有分词器都支持:

+ * [索引全切分模式](https://github.com/hankcs/HanLP/tree/1.x#4-%E7%B4%A2%E5%BC%95%E5%88%86%E8%AF%8D)

+ * [用户自定义词典](https://github.com/hankcs/HanLP/tree/1.x#8-%E7%94%A8%E6%88%B7%E8%87%AA%E5%AE%9A%E4%B9%89%E8%AF%8D%E5%85%B8)

+ * [兼容繁体中文](https://github.com/hankcs/HanLP/blob/1.x/src/test/java/com/hankcs/demo/DemoPerceptronLexicalAnalyzer.java#L29)

+ * [训练用户自己的领域模型](https://github.com/hankcs/HanLP/wiki)

* 词性标注

+ * [HMM词性标注](https://github.com/hankcs/HanLP/blob/1.x/src/main/java/com/hankcs/hanlp/seg/Segment.java#L584)(速度快)

+ * [感知机词性标注](https://github.com/hankcs/HanLP/wiki/%E7%BB%93%E6%9E%84%E5%8C%96%E6%84%9F%E7%9F%A5%E6%9C%BA%E6%A0%87%E6%B3%A8%E6%A1%86%E6%9E%B6)、[CRF词性标注](https://github.com/hankcs/HanLP/wiki/CRF%E8%AF%8D%E6%B3%95%E5%88%86%E6%9E%90)(精度高)

* 命名实体识别

- * 中国人名识别

- * 音译人名识别

- * 日本人名识别

- * 地名识别

- * 实体机构名识别

+ * 基于HMM角色标注的命名实体识别 (速度快)

+ * [中国人名识别](https://github.com/hankcs/HanLP/tree/1.x#9-%E4%B8%AD%E5%9B%BD%E4%BA%BA%E5%90%8D%E8%AF%86%E5%88%AB)、[音译人名识别](https://github.com/hankcs/HanLP/tree/1.x#10-%E9%9F%B3%E8%AF%91%E4%BA%BA%E5%90%8D%E8%AF%86%E5%88%AB)、[日本人名识别](https://github.com/hankcs/HanLP/tree/1.x#11-%E6%97%A5%E6%9C%AC%E4%BA%BA%E5%90%8D%E8%AF%86%E5%88%AB)、[地名识别](https://github.com/hankcs/HanLP/tree/1.x#12-%E5%9C%B0%E5%90%8D%E8%AF%86%E5%88%AB)、[实体机构名识别](https://github.com/hankcs/HanLP/tree/1.x#13-%E6%9C%BA%E6%9E%84%E5%90%8D%E8%AF%86%E5%88%AB)

+ * 基于线性模型的命名实体识别(精度高)

+ * [感知机命名实体识别](https://github.com/hankcs/HanLP/wiki/%E7%BB%93%E6%9E%84%E5%8C%96%E6%84%9F%E7%9F%A5%E6%9C%BA%E6%A0%87%E6%B3%A8%E6%A1%86%E6%9E%B6)、[CRF命名实体识别](https://github.com/hankcs/HanLP/wiki/CRF%E8%AF%8D%E6%B3%95%E5%88%86%E6%9E%90)

* 关键词提取

- * TextRank关键词提取

+ * [TextRank关键词提取](https://github.com/hankcs/HanLP/tree/1.x#14-%E5%85%B3%E9%94%AE%E8%AF%8D%E6%8F%90%E5%8F%96)

* 自动摘要

- * TextRank自动摘要

+ * [TextRank自动摘要](https://github.com/hankcs/HanLP/tree/1.x#15-%E8%87%AA%E5%8A%A8%E6%91%98%E8%A6%81)

* 短语提取

- * 基于互信息和左右信息熵的短语提取

-* 拼音转换

- * 多音字

- * 声母

- * 韵母

- * 声调

-* 简繁转换

- * 繁体中文分词

+ * [基于互信息和左右信息熵的短语提取](https://github.com/hankcs/HanLP/tree/1.x#16-%E7%9F%AD%E8%AF%AD%E6%8F%90%E5%8F%96)

+* [拼音转换](https://github.com/hankcs/HanLP/tree/1.x#17-%E6%8B%BC%E9%9F%B3%E8%BD%AC%E6%8D%A2)

+ * 多音字、声母、韵母、声调

+* [简繁转换](https://github.com/hankcs/HanLP/tree/1.x#18-%E7%AE%80%E7%B9%81%E8%BD%AC%E6%8D%A2)

* 简繁分歧词(简体、繁体、臺灣正體、香港繁體)

-* 文本推荐

- * 语义推荐

- * 拼音推荐

- * 字词推荐

+* [文本推荐](https://github.com/hankcs/HanLP/tree/1.x#19-%E6%96%87%E6%9C%AC%E6%8E%A8%E8%8D%90)

+ * 语义推荐、拼音推荐、字词推荐

* 依存句法分析

- * 基于神经网络的高性能依存句法分析器

- * MaxEnt依存句法分析

- * CRF依存句法分析

-* 文本分类

- * 情感分析

-* word2vec

+ * [基于神经网络的高性能依存句法分析器](https://github.com/hankcs/HanLP/tree/1.x#21-%E4%BE%9D%E5%AD%98%E5%8F%A5%E6%B3%95%E5%88%86%E6%9E%90)

+ * [基于ArcEager转移系统的柱搜索依存句法分析器](https://github.com/hankcs/HanLP/blob/1.x/src/test/java/com/hankcs/demo/DemoDependencyParser.java#L34)

+* [文本分类](https://github.com/hankcs/HanLP/wiki/%E6%96%87%E6%9C%AC%E5%88%86%E7%B1%BB%E4%B8%8E%E6%83%85%E6%84%9F%E5%88%86%E6%9E%90)

+ * [情感分析](https://github.com/hankcs/HanLP/wiki/%E6%96%87%E6%9C%AC%E5%88%86%E7%B1%BB%E4%B8%8E%E6%83%85%E6%84%9F%E5%88%86%E6%9E%90#%E6%83%85%E6%84%9F%E5%88%86%E6%9E%90)

+* [文本聚类](https://github.com/hankcs/HanLP/wiki/%E6%96%87%E6%9C%AC%E8%81%9A%E7%B1%BB)

+ - KMeans、Repeated Bisection、自动推断聚类数目k

+* [word2vec](https://github.com/hankcs/HanLP/wiki/word2vec)

* 词向量训练、加载、词语相似度计算、语义运算、查询、KMeans聚类

* 文档语义相似度计算

-* 语料库工具

- * 分词语料预处理

- * 词频词性词典制作

- * BiGram统计

- * 词共现统计

- * CoNLL语料预处理

- * CoNLL UA/LA/DA评测工具

+* [语料库工具](https://github.com/hankcs/HanLP/tree/1.x/src/main/java/com/hankcs/hanlp/corpus)

+ - 部分默认模型训练自小型语料库,鼓励用户自行训练。所有模块提供[训练接口](https://github.com/hankcs/HanLP/wiki),语料可参考[98年人民日报语料库](http://file.hankcs.com/corpus/pku98.zip)。

-在提供丰富功能的同时,**HanLP**内部模块坚持低耦合、模型坚持惰性加载、服务坚持静态提供、词典坚持明文发布,使用非常方便,同时自带一些语料处理工具,帮助用户训练自己的模型。

+在提供丰富功能的同时,HanLP内部模块坚持低耦合、模型坚持惰性加载、服务坚持静态提供、词典坚持明文发布,使用非常方便。默认模型训练自全世界最大规模的中文语料库,同时自带一些语料处理工具,帮助用户训练自己的模型。

------

## 项目主页

-HanLP下载地址:https://github.com/hankcs/HanLP/releases

-

-国内下载地址:http://hanlp.dksou.com/HanLP.html

-

-Solr、Lucene插件:https://github.com/hankcs/hanlp-solr-plugin

-

-更多细节:https://github.com/hankcs/HanLP/wiki

+[《自然语言处理入门》🔥](http://nlp.hankcs.com/book.php)、[随书代码](https://github.com/hankcs/HanLP/tree/v1.7.5/src/test/java/com/hankcs/book)、[在线演示](http://hanlp.com/)、[Python调用](https://github.com/hankcs/pyhanlp)、[Solr及Lucene插件](https://github.com/hankcs/hanlp-lucene-plugin)、[论坛](https://bbs.hankcs.com/)、[论文引用](https://github.com/hankcs/HanLP/wiki/papers)、[更多信息](https://github.com/hankcs/HanLP/wiki)。

------

@@ -84,70 +74,53 @@ Solr、Lucene插件:https://github.com/hankcs/hanlp-solr-plugin

为了方便用户,特提供内置了数据包的Portable版,只需在pom.xml加入:

-```

+```xml

com.hankcs

hanlp

- portable-1.5.3

+ portable-1.8.6

```

-零配置,即可使用基本功能(除CRF分词、依存句法分析外的全部功能)。如果用户有自定义的需求,可以参考方式二,使用hanlp.properties进行配置。

+零配置,即可使用基本功能(除由字构词、依存句法分析外的全部功能)。如果用户有自定义的需求,可以参考方式二,使用hanlp.properties进行配置(Portable版同样支持hanlp.properties)。

### 方式二、下载jar、data、hanlp.properties

-**HanLP**将数据与程序分离,给予用户自定义的自由。

+HanLP将数据与程序分离,给予用户自定义的自由。

-#### 1、下载jar

-

-[hanlp.jar](https://github.com/hankcs/HanLP/releases)

-

-#### 2、下载data

-

-| 数据包 | 功能 | 体积(MB) |

-| -------- | -----: | :----: |

-| [data.zip](https://github.com/hankcs/HanLP/releases) | 全部 | 255 |

+#### 1、下载:[data.zip](http://nlp.hankcs.com/download.php?file=data)

下载后解压到任意目录,接下来通过配置文件告诉HanLP数据包的位置。

-**HanLP**中的数据分为*词典*和*模型*,其中*词典*是词法分析必需的,*模型*是句法分析必需的。

+HanLP中的数据分为*词典*和*模型*,其中*词典*是词法分析必需的,*模型*是句法分析必需的。

data

│

├─dictionary

└─model

-用户可以自行增删替换,如果不需要句法分析功能的话,随时可以删除model文件夹。

+用户可以自行增删替换,如果不需要句法分析等功能的话,随时可以删除model文件夹。

+

- 模型跟词典没有绝对的区别,隐马模型被做成人人都可以编辑的词典形式,不代表它不是模型。

- GitHub代码库中已经包含了data.zip中的词典,直接编译运行自动缓存即可;模型则需要额外下载。

-#### 3、配置文件

-示例配置文件:[hanlp.properties](https://github.com/hankcs/HanLP/releases)

-在GitHub的发布页中,```hanlp.properties```一般和```jar```打包在同一个```zip```包中。

+#### 2、下载jar和配置文件:[hanlp-release.zip](http://nlp.hankcs.com/download.php?file=jar)

配置文件的作用是告诉HanLP数据包的位置,只需修改第一行

- root=usr/home/HanLP/

+ root=D:/JavaProjects/HanLP/

为data的**父目录**即可,比如data目录是`/Users/hankcs/Documents/data`,那么`root=/Users/hankcs/Documents/` 。

-- 如果选用mini词典的话,则需要修改配置文件:

-```

-CoreDictionaryPath=data/dictionary/CoreNatureDictionary.mini.txt

-BiGramDictionaryPath=data/dictionary/CoreNatureDictionary.ngram.mini.txt

-```

-

-最后将`hanlp.properties`放入classpath即可,对于任何项目,都可以放到src或resources目录下,编译时IDE会自动将其复制到classpath中。

+最后将`hanlp.properties`放入classpath即可,对于多数项目,都可以放到src或resources目录下,编译时IDE会自动将其复制到classpath中。除了配置文件外,还可以使用环境变量`HANLP_ROOT`来设置`root`。安卓项目请参考[demo](https://github.com/hankcs/HanLPAndroidDemo)。

如果放置不当,HanLP会提示当前环境下的合适路径,并且尝试从项目根目录读取数据集。

## 调用方法

-**HanLP**几乎所有的功能都可以通过工具类`HanLP`快捷调用,当你想不起来调用方法时,只需键入`HanLP.`,IDE应当会给出提示,并展示**HanLP**完善的文档。

-

-*推荐用户始终通过工具类`HanLP`调用,这么做的好处是,将来**HanLP**升级后,用户无需修改调用代码。*

+HanLP几乎所有的功能都可以通过工具类`HanLP`快捷调用,当你想不起来调用方法时,只需键入`HanLP.`,IDE应当会给出提示,并展示HanLP完善的文档。

-所有Demo都位于[com.hankcs.demo](https://github.com/hankcs/HanLP/tree/master/src/test/java/com/hankcs/demo)下,比文档覆盖了更多细节,强烈建议运行一遍。

+所有Demo都位于[com.hankcs.demo](https://github.com/hankcs/HanLP/tree/1.x/src/test/java/com/hankcs/demo)下,比文档覆盖了更多细节,更新更及时,**强烈建议运行一遍**。此处仅列举部分常用接口。

### 1. 第一个Demo

@@ -155,10 +128,10 @@ BiGramDictionaryPath=data/dictionary/CoreNatureDictionary.ngram.mini.txt

System.out.println(HanLP.segment("你好,欢迎使用HanLP汉语处理包!"));

```

- 内存要求

- * **HanLP**对词典的数据结构进行了长期的优化,可以应对绝大多数场景。哪怕**HanLP**的词典上百兆也无需担心,因为在内存中被精心压缩过。

- * 如果内存非常有限,请使用小词典。**HanLP**默认使用大词典,同时提供小词典,请参考配置文件章节。

-- 写给正在编译**HanLP**的开发者

- * 如果你正在编译运行从Github检出的**HanLP**代码,并且没有下载data缓存,那么首次加载词典/模型会发生一个*自动缓存*的过程。

+ * 内存120MB以上(-Xms120m -Xmx120m -Xmn64m),标准数据包(35万核心词库+默认用户词典),分词测试正常。全部词典和模型都是惰性加载的,不使用的模型相当于不存在,可以自由删除。

+ * HanLP对词典的数据结构进行了长期的优化,可以应对绝大多数场景。哪怕HanLP的词典上百兆也无需担心,因为在内存中被精心压缩过。如果内存非常有限,请使用小词典。HanLP默认使用大词典,同时提供小词典,请参考配置文件章节。

+- 写给正在编译HanLP的开发者

+ * 如果你正在编译运行从Github检出的HanLP代码,并且没有下载data缓存,那么首次加载词典/模型会发生一个*自动缓存*的过程。

* *自动缓存*的目的是为了加速词典载入速度,在下次载入时,缓存的词典文件会带来毫秒级的加载速度。由于词典体积很大,*自动缓存*会耗费一些时间,请耐心等待。

* *自动缓存*缓存的不是明文词典,而是双数组Trie树、DAWG、AhoCorasickDoubleArrayTrie等数据结构。

@@ -169,7 +142,7 @@ List termList = StandardTokenizer.segment("商品和服务");

System.out.println(termList);

```

- 说明

- * **HanLP**中有一系列“开箱即用”的静态分词器,以`Tokenizer`结尾,在接下来的例子中会继续介绍。

+ * HanLP中有一系列“开箱即用”的静态分词器,以`Tokenizer`结尾,在接下来的例子中会继续介绍。

* `HanLP.segment`其实是对`StandardTokenizer.segment`的包装。

* 分词结果包含词性,每个词性的意思请查阅[《HanLP词性标注集》](http://www.hankcs.com/nlp/part-of-speech-tagging.html#h2-8)。

- 算法详解

@@ -178,11 +151,14 @@ System.out.println(termList);

### 3. NLP分词

```java

-List termList = NLPTokenizer.segment("中国科学院计算技术研究所的宗成庆教授正在教授自然语言处理课程");

-System.out.println(termList);

+System.out.println(NLPTokenizer.segment("我新造一个词叫幻想乡你能识别并标注正确词性吗?"));

+// 注意观察下面两个“希望”的词性、两个“晚霞”的词性

+System.out.println(NLPTokenizer.analyze("我的希望是希望张晚霞的背影被晚霞映红").translateLabels());

+System.out.println(NLPTokenizer.analyze("支援臺灣正體香港繁體:微软公司於1975年由比爾·蓋茲和保羅·艾倫創立。"));

```

- 说明

- * NLP分词`NLPTokenizer`会执行全部命名实体识别和词性标注。

+ * NLP分词`NLPTokenizer`会执行词性标注和命名实体识别,由[结构化感知机序列标注框架](https://github.com/hankcs/HanLP/wiki/%E7%BB%93%E6%9E%84%E5%8C%96%E6%84%9F%E7%9F%A5%E6%9C%BA%E6%A0%87%E6%B3%A8%E6%A1%86%E6%9E%B6)支撑。

+ * 默认模型训练自`9970`万字的大型综合语料库,是已知范围内**全世界最大**的中文分词语料库。语料库规模决定实际效果,面向生产环境的语料库应当在千万字量级。欢迎用户在自己的语料上[训练新模型](https://github.com/hankcs/HanLP/wiki/%E7%BB%93%E6%9E%84%E5%8C%96%E6%84%9F%E7%9F%A5%E6%9C%BA%E6%A0%87%E6%B3%A8%E6%A1%86%E6%9E%B6)以适应新领域、识别新的命名实体。

### 4. 索引分词

@@ -195,6 +171,7 @@ for (Term term : termList)

```

- 说明

* 索引分词`IndexTokenizer`是面向搜索引擎的分词器,能够对长词全切分,另外通过`term.offset`可以获取单词在文本中的偏移量。

+ * 任何分词器都可以通过基类`Segment`的`enableIndexMode`方法激活索引模式。

### 5. N-最短路径分词

@@ -219,23 +196,21 @@ for (String sentence : testCase)

### 6. CRF分词

```java

-Segment segment = new CRFSegment();

-segment.enablePartOfSpeechTagging(true);

-List termList = segment.seg("你看过穆赫兰道吗");

-System.out.println(termList);

-for (Term term : termList)

-{

- if (term.nature == null)

- {

- System.out.println("识别到新词:" + term.word);

- }

-}

+ CRFLexicalAnalyzer analyzer = new CRFLexicalAnalyzer();

+ String[] tests = new String[]{

+ "商品和服务",

+ "上海华安工业(集团)公司董事长谭旭光和秘书胡花蕊来到美国纽约现代艺术博物馆参观",

+ "微软公司於1975年由比爾·蓋茲和保羅·艾倫創立,18年啟動以智慧雲端、前端為導向的大改組。" // 支持繁体中文

+ };

+ for (String sentence : tests)

+ {

+ System.out.println(analyzer.analyze(sentence));

+ }

```

- 说明

* CRF对新词有很好的识别能力,但是开销较大。

- 算法详解

- * [《CRF分词的纯Java实现》](http://www.hankcs.com/nlp/segment/crf-segmentation-of-the-pure-java-implementation.html)

- * [《CRF++模型格式说明》](http://www.hankcs.com/nlp/the-crf-model-format-description.html)

+ * [《CRF中文分词、词性标注与命名实体识别》](https://github.com/hankcs/HanLP/wiki/CRF%E8%AF%8D%E6%B3%95%E5%88%86%E6%9E%90)

### 7. 极速词典分词

@@ -263,7 +238,7 @@ public class DemoHighSpeedSegment

```

- 说明

* 极速分词是词典最长分词,速度极其快,精度一般。

- * 在i7上跑出了2000万字每秒的速度。

+ * 在i7-6700K上跑出了4500万字每秒的速度。

- 算法详解

* [《Aho Corasick自动机结合DoubleArrayTrie极速多模式匹配》](http://www.hankcs.com/program/algorithm/aho-corasick-double-array-trie.html)

@@ -290,7 +265,7 @@ public class DemoCustomDictionary

String text = "攻城狮逆袭单身狗,迎娶白富美,走上人生巅峰"; // 怎么可能噗哈哈!

- // AhoCorasickDoubleArrayTrie自动机分词

+ // AhoCorasickDoubleArrayTrie自动机扫描文本中出现的自定义词语

final char[] charArray = text.toCharArray();

CustomDictionary.parseText(charArray, new AhoCorasickDoubleArrayTrie.IHit()

{

@@ -300,29 +275,22 @@ public class DemoCustomDictionary

System.out.printf("[%d:%d]=%s %s\n", begin, end, new String(charArray, begin, end - begin), value);

}

});

- // trie树分词

- BaseSearcher searcher = CustomDictionary.getSearcher(text);

- Map.Entry entry;

- while ((entry = searcher.next()) != null)

- {

- System.out.println(entry);

- }

- // 标准分词

+ // 自定义词典在所有分词器中都有效

System.out.println(HanLP.segment(text));

}

}

```

- 说明

- * `CustomDictionary`是一份全局的用户自定义词典,可以随时增删,影响全部分词器。

- * 另外可以在任何分词器中关闭它。通过代码动态增删不会保存到词典文件。

+ * `CustomDictionary`是一份全局的用户自定义词典,可以随时增删,影响全部分词器。另外可以在任何分词器中关闭它。通过代码动态增删不会保存到词典文件。

+ * 中文分词≠词典,词典无法解决中文分词,`Segment`提供高低优先级应对不同场景,请参考[FAQ](https://github.com/hankcs/HanLP/wiki/FAQ#%E4%B8%BA%E4%BB%80%E4%B9%88%E4%BF%AE%E6%94%B9%E4%BA%86%E8%AF%8D%E5%85%B8%E8%BF%98%E6%98%AF%E6%B2%A1%E6%9C%89%E6%95%88%E6%9E%9C)。

- 追加词典

* `CustomDictionary`主词典文本路径是`data/dictionary/custom/CustomDictionary.txt`,用户可以在此增加自己的词语(不推荐);也可以单独新建一个文本文件,通过配置文件`CustomDictionaryPath=data/dictionary/custom/CustomDictionary.txt; 我的词典.txt;`来追加词典(推荐)。

* 始终建议将相同词性的词语放到同一个词典文件里,便于维护和分享。

- 词典格式

* 每一行代表一个单词,格式遵从`[单词] [词性A] [A的频次] [词性B] [B的频次] ...` 如果不填词性则表示采用词典的默认词性。

* 词典的默认词性默认是名词n,可以通过配置文件修改:`全国地名大全.txt ns;`如果词典路径后面空格紧接着词性,则该词典默认是该词性。

- * 在基于层叠隐马模型的最短路分词中,并不保证自定义词典中的词一定被切分出来。

+ * 在统计分词中,并不保证自定义词典中的词一定被切分出来。用户可在理解后果的情况下通过`Segment#enableCustomDictionaryForcing`强制生效。

* 关于用户词典的更多信息请参考**词典说明**一章。

- 算法详解

* [《Trie树分词》](http://www.hankcs.com/program/java/tire-tree-participle.html)

@@ -351,6 +319,7 @@ for (String sentence : testCase)

* 目前分词器基本上都默认开启了中国人名识别,比如`HanLP.segment()`接口中使用的分词器等等,用户不必手动开启;上面的代码只是为了强调。

* 有一定的误命中率,比如误命中`关键年`,则可以通过在`data/dictionary/person/nr.txt`加入一条`关键年 A 1`来排除`关键年`作为人名的可能性,也可以将`关键年`作为新词登记到自定义词典中。

* 如果你通过上述办法解决了问题,欢迎向我提交pull request,词典也是宝贵的财富。

+ * 建议NLP用户使用感知机或CRF词法分析器,精度更高。

- 算法详解

* [《实战HMM-Viterbi角色标注中国人名识别》](http://www.hankcs.com/nlp/chinese-name-recognition-in-actual-hmm-viterbi-role-labeling.html)

@@ -409,6 +378,7 @@ for (String sentence : testCase)

- 说明

* 目前标准分词器都默认关闭了地名识别,用户需要手动开启;这是因为消耗性能,其实多数地名都收录在核心词典和用户自定义词典中。

* 在生产环境中,能靠词典解决的问题就靠词典解决,这是最高效稳定的方法。

+ * 建议对命名实体识别要求较高的用户使用[感知机词法分析器](https://github.com/hankcs/HanLP/wiki/%E7%BB%93%E6%9E%84%E5%8C%96%E6%84%9F%E7%9F%A5%E6%9C%BA%E6%A0%87%E6%B3%A8%E6%A1%86%E6%9E%B6)。

- 算法详解

* [《实战HMM-Viterbi角色标注地名识别》](http://www.hankcs.com/nlp/ner/place-names-to-identify-actual-hmm-viterbi-role-labeling.html)

@@ -430,6 +400,7 @@ for (String sentence : testCase)

- 说明

* 目前分词器默认关闭了机构名识别,用户需要手动开启;这是因为消耗性能,其实常用机构名都收录在核心词典和用户自定义词典中。

* HanLP的目的不是演示动态识别,在生产环境中,能靠词典解决的问题就靠词典解决,这是最高效稳定的方法。

+ * 建议对命名实体识别要求较高的用户使用[感知机词法分析器](https://github.com/hankcs/HanLP/wiki/%E7%BB%93%E6%9E%84%E5%8C%96%E6%84%9F%E7%9F%A5%E6%9C%BA%E6%A0%87%E6%B3%A8%E6%A1%86%E6%9E%B6)。

- 算法详解

* [《层叠HMM-Viterbi角色标注模型下的机构名识别》](http://www.hankcs.com/nlp/ner/place-name-recognition-model-of-the-stacked-hmm-viterbi-role-labeling.html)

@@ -563,9 +534,9 @@ public class DemoPinyin

}

```

- 说明

- * **HanLP**不仅支持基础的汉字转拼音,还支持声母、韵母、音调、音标和输入法首字母首声母功能。

- * **HanLP**能够识别多音字,也能给繁体中文注拼音。

- * 最重要的是,**HanLP**采用的模式匹配升级到`AhoCorasickDoubleArrayTrie`,性能大幅提升,能够提供毫秒级的响应速度!

+ * HanLP不仅支持基础的汉字转拼音,还支持声母、韵母、音调、音标和输入法首字母首声母功能。

+ * HanLP能够识别多音字,也能给繁体中文注拼音。

+ * 最重要的是,HanLP采用的模式匹配升级到`AhoCorasickDoubleArrayTrie`,性能大幅提升,能够提供毫秒级的响应速度!

- 算法详解

* [《汉字转拼音与简繁转换的Java实现》](http://www.hankcs.com/nlp/java-chinese-characters-to-pinyin-and-simplified-conversion-realization.html#h2-17)

@@ -586,7 +557,7 @@ public class DemoTraditionalChinese2SimplifiedChinese

}

```

- 说明

- * **HanLP**能够识别简繁分歧词,比如`打印机=印表機`。许多简繁转换工具不能区分“以后”“皇后”中的两个“后”字,**HanLP**可以。

+ * HanLP能够识别简繁分歧词,比如`打印机=印表機`。许多简繁转换工具不能区分“以后”“皇后”中的两个“后”字,HanLP可以。

- 算法详解

* [《汉字转拼音与简繁转换的Java实现》](http://www.hankcs.com/nlp/java-chinese-characters-to-pinyin-and-simplified-conversion-realization.html#h2-17)

@@ -622,7 +593,7 @@ public class DemoSuggester

}

```

- 说明

- * 在搜索引擎的输入框中,用户输入一个词,搜索引擎会联想出最合适的搜索词,**HanLP**实现了类似的功能。

+ * 在搜索引擎的输入框中,用户输入一个词,搜索引擎会联想出最合适的搜索词,HanLP实现了类似的功能。

* 可以动态调节每种识别器的权重

### 20. 语义距离

@@ -675,7 +646,7 @@ public class DemoWord2Vec

```java

/**

- * 依存句法分析(CRF句法模型需要-Xms512m -Xmx512m -Xmn256m,MaxEnt和神经网络句法模型需要-Xms1g -Xmx1g -Xmn512m)

+ * 依存句法分析(MaxEnt和神经网络句法模型需要-Xms1g -Xmx1g -Xmn512m)

* @author hankcs

*/

public class DemoDependencyParser

@@ -708,14 +679,12 @@ public class DemoDependencyParser

```

- 说明

* 内部采用`NeuralNetworkDependencyParser`实现,用户可以直接调用`NeuralNetworkDependencyParser.compute(sentence)`

- * 也可以调用基于最大熵的依存句法分析器`MaxEntDependencyParser.compute(sentence)`

+ * 也可以调用基于ArcEager转移系统的柱搜索依存句法分析器`KBeamArcEagerDependencyParser`

- 算法详解

* [《基于神经网络分类模型与转移系统的判决式依存句法分析器》](http://www.hankcs.com/nlp/parsing/neural-network-based-dependency-parser.html)

- * [《最大熵依存句法分析器的实现》](http://www.hankcs.com/nlp/parsing/to-achieve-the-maximum-entropy-of-the-dependency-parser.html)

- * [《基于CRF序列标注的中文依存句法分析器的Java实现》](http://www.hankcs.com/nlp/parsing/crf-sequence-annotation-chinese-dependency-parser-implementation-based-on-java.html)

## 词典说明

-本章详细介绍**HanLP**中的词典格式,满足用户自定义的需要。**HanLP**中有许多词典,它们的格式都是相似的,形式都是文本文档,随时可以修改。

+本章详细介绍HanLP中的词典格式,满足用户自定义的需要。HanLP中有许多词典,它们的格式都是相似的,形式都是文本文档,随时可以修改。

### 基本格式

词典分为词频词性词典和词频词典。

@@ -733,9 +702,9 @@ public class DemoDependencyParser

### 数据结构

-Trie树(字典树)是**HanLP**中使用最多的数据结构,为此,我实现了通用的Trie树,支持泛型、遍历、储存、载入。

+Trie树(字典树)是HanLP中使用最多的数据结构,为此,我实现了通用的Trie树,支持泛型、遍历、储存、载入。

-用户自定义词典采用AhoCorasickDoubleArrayTrie和二分Trie树储存,其他词典采用基于[双数组Trie树(DoubleArrayTrie)](http://www.hankcs.com/program/java/%E5%8F%8C%E6%95%B0%E7%BB%84trie%E6%A0%91doublearraytriejava%E5%AE%9E%E7%8E%B0.html)实现的[AC自动机AhoCorasickDoubleArrayTrie](http://www.hankcs.com/program/algorithm/aho-corasick-double-array-trie.html)。

+用户自定义词典采用AhoCorasickDoubleArrayTrie和二分Trie树储存,其他词典采用基于[双数组Trie树(DoubleArrayTrie)](http://www.hankcs.com/program/java/%E5%8F%8C%E6%95%B0%E7%BB%84trie%E6%A0%91doublearraytriejava%E5%AE%9E%E7%8E%B0.html)实现的[AC自动机AhoCorasickDoubleArrayTrie](http://www.hankcs.com/program/algorithm/aho-corasick-double-array-trie.html)。关于一些常用数据结构的性能评估,请参考[wiki](https://github.com/hankcs/HanLP/wiki/%E6%95%B0%E6%8D%AE%E7%BB%93%E6%9E%84)。

### 储存形式

@@ -763,23 +732,37 @@ HanLP.Config.enableDebug();

* 基于角色标注的命名实体识别比较依赖词典,所以词典的质量大幅影响识别质量。

* 这些词典的格式与原理都是类似的,请阅读[相应的文章](http://www.hankcs.com/category/nlp/ner/)或代码修改它。

-如果问题解决了,欢迎向我提交一个pull request,这是我在代码库中保留明文词典的原因,众人拾柴火焰高!

+若还有疑问,请参考[《自然语言处理入门》](http://nlp.hankcs.com/book.php)相应章节。如果问题解决了,欢迎向我提交一个pull request,这是我在代码库中保留明文词典的原因,众人拾柴火焰高!

------

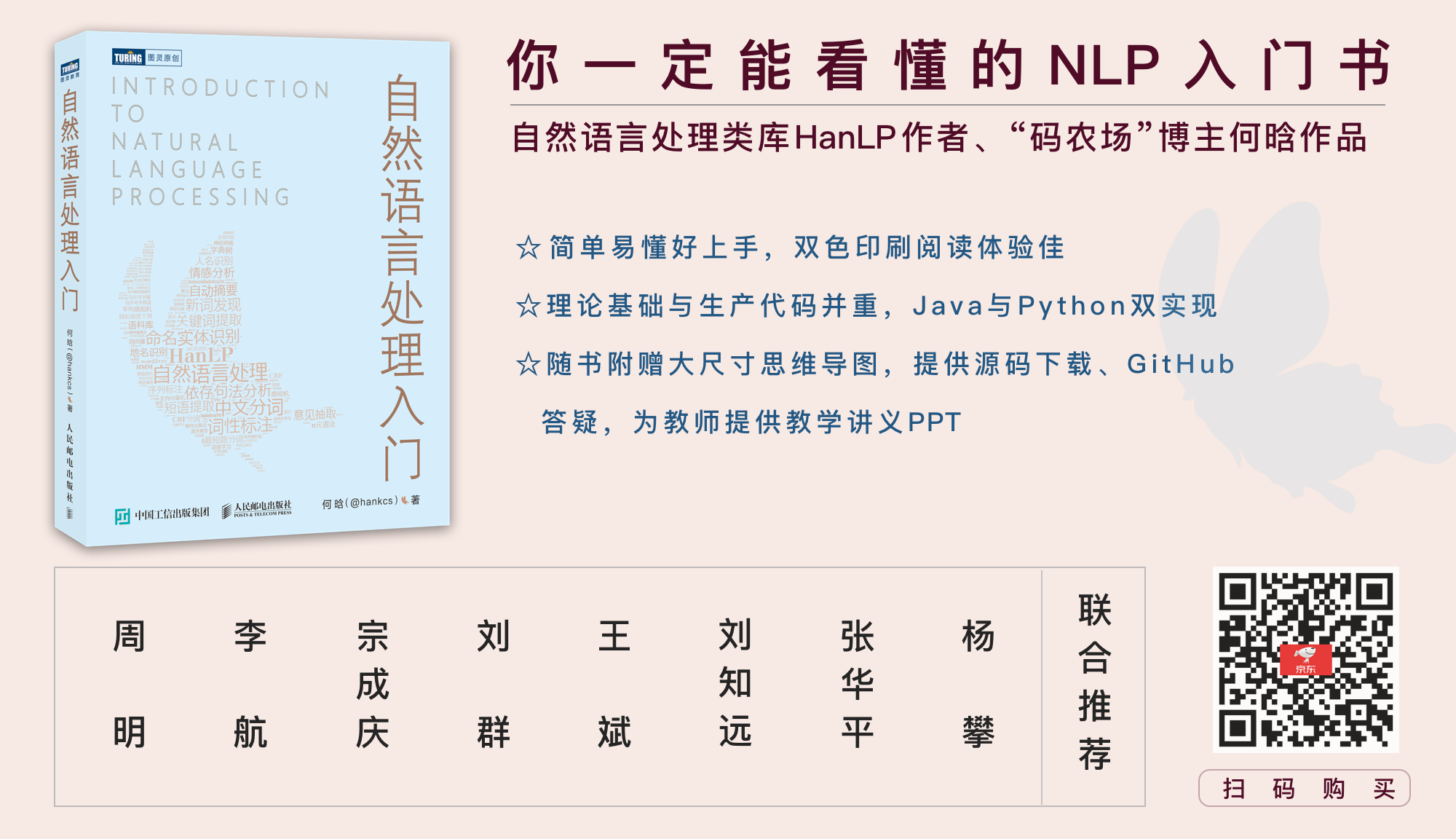

+## [《自然语言处理入门》](http://nlp.hankcs.com/book.php)

+

+

+

+一本配套HanLP的NLP入门书,基础理论与生产代码并重,Python与Java双实现。从基本概念出发,逐步介绍中文分词、词性标注、命名实体识别、信息抽取、文本聚类、文本分类、句法分析这几个热门问题的算法原理与工程实现。书中通过对多种算法的讲解,比较了它们的优缺点和适用场景,同时详细演示生产级成熟代码,助你真正将自然语言处理应用在生产环境中。

+

+[《自然语言处理入门》](http://nlp.hankcs.com/book.php)由南方科技大学数学系创系主任夏志宏、微软亚洲研究院副院长周明、字节跳动人工智能实验室总监李航、华为诺亚方舟实验室语音语义首席科学家刘群、小米人工智能实验室主任兼NLP首席科学家王斌、中国科学院自动化研究所研究员宗成庆、清华大学副教授刘知远、北京理工大学副教授张华平和52nlp作序推荐。感谢各位前辈老师,希望这个项目和这本书能成为大家工程和学习上的“蝴蝶效应”,帮助大家在NLP之路上蜕变成蝶。

+

## 版权

-### Apache License Version 2.0

+HanLP 的授权协议为 **Apache License 2.0**,可免费用做商业用途。请在产品说明中附加HanLP的链接和授权协议。HanLP受版权法保护,侵权必究。

+

+##### 自然语义(青岛)科技有限公司

+

+HanLP从v1.7版起独立运作,由自然语义(青岛)科技有限公司作为项目主体,主导后续版本的开发,并拥有后续版本的版权。

+

+##### 大快搜索

+

+HanLP v1.3~v1.65版由大快搜索主导开发,继续完全开源,大快搜索拥有相关版权。

-如不特殊注明,所有模块都以此协议授权使用。

+##### 上海林原公司

-### 上海林原信息科技有限公司

-- HanLP产品初始知识产权归上海林原信息科技有限公司所有,任何人和企业可以无偿使用,可以对产品、源代码进行任何形式的修改,可以打包在其他产品中进行销售。

-- 任何使用了HanLP的全部或部分功能、词典、模型的项目、产品或文章等形式的成果必须显式注明HanLP及此项目主页。

+HanLP 早期得到了上海林原公司的大力支持,并拥有1.28及前序版本的版权,相关版本也曾在上海林原公司网站发布。

### 其他版权方

-- 自`1.2.4`后是个人维护,还会接受任何人与任何公司向本项目开源的模块。

-- 充分尊重所有版权方的贡献,本项目不占有这些新模块的版权。

+- 实施上由个人维护,欢迎任何人与任何公司向本项目开源模块。

+- 充分尊重所有版权方的贡献,本项目不占有用户贡献模块的版权。

### 鸣谢

感谢下列优秀开源项目:

@@ -799,9 +782,9 @@ HanLP.Config.enableDebug();

- An Efficient Implementation of Trie Structures, JUN-ICHI AOE AND KATSUSHI MORIMOTO

- TextRank: Bringing Order into Texts, Rada Mihalcea and Paul Tarau

-感谢上海林原信息科技有限公司的刘先生,允许我利用工作时间开发HanLP,提供服务器和域名,并且促成了开源。感谢诸位用户的关注和使用,HanLP并不完善,未来还恳求各位NLP爱好者多多关照,提出宝贵意见。

+感谢诸位用户的关注和使用,HanLP并不完善,未来还恳求各位NLP爱好者多多关照,提出宝贵意见。

作者 [@hankcs](http://weibo.com/hankcs/)

-2014年12月16日

+2016年9月16日

diff --git a/data/dictionary/pinyin/pinyin.txt b/data/dictionary/pinyin/pinyin.txt

new file mode 100644

index 000000000..58432c069

--- /dev/null

+++ b/data/dictionary/pinyin/pinyin.txt

@@ -0,0 +1,30728 @@

+〇=ling2

+一=yi1

+一丁点儿=yi1,ding1,dian3,er5

+一不小心=yi1,bu4,xiao3,xin1

+一丘之貉=yi1,qiu1,zhi1,he2

+一丝不差=yi4,si1,bu4,cha1

+一丝不苟=yi1,si1,bu4,gou3

+一个=yi1,ge4

+一个半个=yi1,ge4,ban4,ge4

+一个巴掌拍不响=yi1,ge4,ba1,zhang3,pai1,bu4,xiang3

+一个萝卜一个坑=yi1,ge4,luo2,bo5,yi1,ge4,keng1

+一举两得=yi1,ju3,liang3,de2

+一之为甚=yi1,zhi1,wei2,shen4

+一了=yi1,liao3

+一了百了=yi1,liao3,bai3,liao3

+一了百当=yi1,liao3,bai3,dang4

+一事无成=yi1,shi4,wu2,cheng2

+一五一十=yi4,wu3,yi4,shi2

+一些=yi1,xie1

+一些半些=yi1,xie1,ban4,xie1

+一人做事一人当=yi1,ren2,zuo4,shi4,yi1,ren2,dang1

+一仆二主=yi1,pu2,er4,zhu3

+一代=yi1,dai4

+一代不如一代=yi1,dai4,bu4,ru2,yi1,dai4

+一代楷模=yi1,dai4,kai3,mo2

+一代风流=yi1,dai4,feng1,liu2

+一令纸=yi1,ling3,zhi3

+一会=yi1,hui4

+一会儿=yi1,hui4,er5

+一体两面=yi1,ti3,liang3,mian4

+一倍=yi2,bei4

+一倡百和=yi1,chang4,bai3,he4

+一共=yi1,gong4

+一出戏=yi4,chu1,xi4

+一刀两断=yi1,dao1,liang3,duan4

+一分为二=yi1,fen1,wei2,er4

+一分子=yi1,fen4,zi3

+一分钟=yi4,fen1,zhong1

+一切=yi1,qie4

+一切万物=yi1,qie1,wan4,wu4

+一动不动=yi1,dong4,bu4,dong4

+一劳永逸=yi1,lao2,yong3,yi4

+一匹=yi1,pi3

+一匹马=yi4,pi3,ma3

+一半=yi1,ban4

+一卷=yi1,juan4

+一厢情愿=yi1,xiang1,qing2,yuan4

+一去不复返=yi1,qu4,bu4,fu4,fan3

+一发=yi1,fa4

+一发千钧=yi1,fa4,qian1,jun1

+一口咬定=yi1,kou3,yao3,ding4

+一口气=yi1,kou3,qi4

+一只=yi1,zhi1

+一叶扁舟=yi1,ye4,pian1,zhou1

+一吐为快=yi1,tu3,wei2,kuai4

+一向=yi1,xiang4

+一呼百应=yi1,hu1,bai3,ying4

+一命呜呼=yi1,ming4,wu1,hu1

+一哄而上=yi1,hong3,er2,shang4

+一哄而散=yi2,hong4,er2,san4

+一哄而起=yi1,hong4,er2,qi3

+一哭二闹=yi4,ku1,er4,nao4

+一唱一和=yi1,chang4,yi1,he4

+一回儿=yi4,hui2,er5

+一场=yi4,chang2

+一场春梦=yi1,chang3,chun1,meng4

+一场梦=yi4,chang2,meng4

+一场空=yi1,chang2,kong1

+一场雨=yi1,chang2,yu3

+一块石头落了地=yi1,kuai4,shi2,tou5,luo4,le5,di4

+一块石头落地=yi1,kuai4,shi2,tou2,luo4,di4

+一声不吭=yi1,sheng1,bu4,keng1

+一声不响=yi1,sheng1,bu4,xiang3

+一夕一朝=yi1,xi1,yi1,zhao1

+一夕之间=yi4,xi1,zhi1,jian1

+一夜情=yi1,ye4,qing2

+一夜走红=yi2,ye4,zou3,hong2

+一大堆=yi1,da4,dui1

+一天星斗=yi1,tian1,xing1,dou3

+一如既往=yi1,ru2,ji4,wang3

+一定=yi1,ding4

+一定不易=yi1,ding4,bu4,yi4

+一定不移=yi1,ding4,bu4,yi2

+一宿=yi1,xiu3

+一尘不到=yi1,chen2,bu4,dao4

+一尘不染=yi1,chen2,bu4,ran3

+一差二错=yi1,cha1,er4,cuo4

+一帆风顺=yi4,fan1,feng1,shun4

+一幕=yi1,mu4

+一年一度=yi1,nian2,yi1,du4

+一年到头=yi1,nian2,dao4,tou2

+一年半载=yi1,nian2,ban4,zai3

+一年级=yi1,nian2,ji2

+一度=yi1,du4

+一得=yi1,de5

+一得之功=yi1,de2,zhi1,gong1

+一得之愚=yi1,de2,zhi1,yu2

+一得之见=yi1,de2,zhi1,jian4

+一心一意=yi1,xin1,yi1,yi4

+一心向往=yi4,xin1,xiang4,wang3

+一念之差=yi1,nian4,zhi1,cha1

+一念之间=yi1,nian4,zhi1,jian1

+一怒之下=yi1,nu4,zhi1,xia4

+一怔=yi1,zheng1

+一意孤行=yi1,yi4,gu1,xing2

+一成=yi1,cheng2

+一成不变=yi1,cheng2,bu4,bian4

+一成不易=yi1,cheng2,bu4,yi4

+一扎=yi1,za1

+一打=yi1,da3

+一技之长=yi1,ji4,zhi1,chang2

+一把子=yi1,ba4,zi5

+一抔黄沙=yi4,pou2,huang2,sha1

+一报还一报=yi1,bao4,huan2,yi1,bao4

+一掊土=yi1,pou2,tu3

+一撮=yi1,zuo3

+一文不值=yi1,wen2,bu4,zhi2

+一方面=yi1,fang1,mian4

+一无可取=yi1,wu2,ke3,qu3

+一无所得=yi1,wu2,suo3,de2

+一无所有=yi1,wu2,suo3,you3

+一无所知=yi1,wu2,suo3,zhi1

+一无是处=yi1,wu2,shi4,chu4

+一无长物=yi1,wu2,chang2,wu4

+一日三省=yi1,ri4,san1,xing3

+一日三秋=yi1,ri4,san1,qiu1

+一旦=yi1,dan4

+一时千载=yi1,shi2,qian1,zai3

+一时半会儿=yi1,shi2,ban4,hui4,er5

+一时得逞=yi4,shi2,de2,cheng3

+一时疏忽=yi4,shi2,shu1,hu1

+一暴十寒=yi1,pu4,shi2,han2

+一曝十寒=yi1,pu4,shi2,han2

+一月=yi1,yue4

+一服=yi1,fu4

+一服药=yi1,fu4,yao4

+一望无垠=yi1,wang4,wu2,yin2

+一望无涯=yi1,wang4,wu2,ya2

+一望无际=yi1,wang4,wu2,ji4

+一朝=yi1,zhao1

+一朝一夕=yi1,zhao1,yi1,xi1

+一朝之忿=yi1,zhao1,zhi1,fen4

+一朝天子一朝臣=yi1,chao2,tian1,zi3,yi1,chao2,chen2

+一棍子打死=yi1,gun4,zi5,da3,si3

+一模一样=yi4,mu2,yi2,yang4

+一次性=yi1,ci4,xing4

+一步一个脚印=yi1,bu4,yi1,ge4,jiao3,yin4

+一步一步=yi2,bu4,yi2,bu4

+一步步=yi2,bu4,bu4

+一步步地=yi2,bu4,bu4,de5

+一毛不拔=yi1,mao2,bu4,ba2

+一毫不差=yi1,hao2,bu4,cha1

+一气之下=yi1,qi4,zhi1,xia4

+一沓纸=yi4,da2,zhi3

+一沓钞票=yi4,da2,chao1,piao4

+一波三折=yi1,bo1,san1,zhe2

+一泻千里=yi1,xie4,qian1,li3

+一派胡言=yi1,pai4,hu2,yan2

+一派胡言乱语=yi2,pai4,hu2,yan2,luan4,yu3

+一流=yi1,liu2

+一清二楚=yi1,qing1,er4,chu3

+一清宿弊=yi4,qing1,su4,bi4

+一溜儿=yi1,liu4,er2

+一溜烟=yi2,liu4,yan1

+一溜烟跑掉=yi2,liu4,yan1,pao3,diao4

+一点=yi1,dian3

+一点钟=yi4,dian3,zhong1

+一片散沙=yi1,pian4,san4,sha1

+一片昏黑=yi2,pian4,hun1,hei1

+一片混乱=yi1,pian4,hun4,luan4

+一片空白=yi1,pian4,kong1,bai2

+一物降一物=yi1,wu4,xiang2,yi1,wu4

+一现昙华=yi1,xian4,tan2,hua1

+一生一世=yi1,sheng1,yi1,shi4

+一百=yi1,bai3

+一百二十行=yi1,bai3,er4,shi2,hang2

+一百亿=yi4,bai3,yi4

+一百八十度=yi1,bai3,ba1,shi2,du4

+一盘散沙=yi1,pan2,san3,sha1

+一目了然=yi1,mu4,liao3,ran2

+一目五行=yi1,mu4,wu3,hang2

+一目十行=yi1,mu4,shi2,hang2

+一目数行=yi1,mu4,shu4,hang2

+一直=yi1,zhi2

+一直走=yi4,zhi2,zou3

+一窍不通=yi1,qiao4,bu4,tong1

+一笑了之=yi1,xiao4,liao3,zhi1

+一笑了事=yi1,xiao4,le5,shi4

+一笔抹摋=yi1,bi3,mo4,sa4

+一笔抹煞=yi1,bi3,mo3,sha1

+一等奖=yi1,deng3,jiang3

+一筹莫展=yi1,chou2,mo4,zhan3

+一箭上垛=yi1,jian4,shang4,duo4

+一篇文章=yi4,pian1,wen2,zhang1

+一线之隔=yi1,xian4,zhi1,ge2

+一缘一会=yi1,yuan2,yi1,hui4

+一网打尽=yi1,wang3,da3,jin4

+一而再=yi4,er2,zai4

+一肚子=yi1,du3,zi5

+一股劲=yi1,gu3,jin4

+一股劲儿=yi1,gu3,jin4,er5

+一臂之力=yi1,bi4,zhi1,li4

+一致=yi1,zhi4

+一般=yi1,ban1

+一般见识=yi1,ban1,jian4,shi2

+一艘船=yi4,sou1,chuan2

+一见了然=yi1,jian4,le5,ran2

+一见钟情=yi1,jian4,zhong1,qing2

+一视同仁=yi1,shi4,tong2,ren2

+一览无遗=yi4,lan3,wu2,yi2

+一觉=yi1,jiao4

+一言中的=yi1,yan2,zhong1,di4

+一言为定=yi1,yan2,wei2,ding4

+一言九鼎=yi1,yan2,jiu3,ding3

+一言兴邦=yi1,yan2,xing1,bang1

+一言半语=yi1,yan2,ban4,yu3

+一言难尽=yi1,yan2,nan2,jin4

+一语中的=yi1,yu3,zhong4,di4

+一语破的=yi1,yu3,po4,di4

+一诺千金=yi1,nuo4,qian1,jin1

+一贫如洗=yi1,pin2,ru2,xi3

+一贯=yi1,guan4

+一走了之=yi1,zou3,liao3,zhi1

+一起=yi1,qi3

+一路平安=yi1,lu4,ping2,an1

+一路神祇=yi1,lu4,shen2,qi2

+一路顺风=yi1,lu4,shun4,feng1

+一蹴而就=yi1,cu4,er2,jiu4

+一蹶不兴=yi1,jue3,bu4,xing1

+一蹶不振=yi1,jue2,bu4,zhen4

+一边倒=yi1,bian1,dao3

+一还一报=yi1,huan2,yi1,bao4

+一退六二五=yi1,tui1,liu4,er4,wu3

+一通=yi2,tong4

+一邱之貉=yi1,qiu1,zhi1,he4

+一重一掩=yi1,chong2,yi1,yan3

+一针见血=yi1,zhen1,jian4,xie3

+一钱不落虚空地=yi1,qian2,bu4,luo4,xu1,kong1,di4

+一隅=yi1,yu2

+一隅之地=yi1,yu2,zhi1,di4

+一隅之见=yi1,yu2,zhi1,jian4

+一隅之说=yi1,yu2,zhi1,shuo1

+一面=yi1,mian4

+一面之缘=yi1,mian4,zhi1,yuan2

+一面之识=yi1,mian4,zhi1,shi2

+一面倒=yi2,mian4,dao3

+一鞭先著=yi1,bian1,xian1,zhuo2

+一驮粮=yi2,duo4,liang2

+一骨碌=yi1,gu1,lu4

+一鸣惊人=yi1,ming2,jing1,ren2

+丁=ding1,zheng1

+丁点儿=ding1,dian3,er5

+丂=kao3,qiao3,yu2

+七=qi1

+七十二行=qi1,shi2,er4,hang2

+七拱八翘=qi1,gong3,ba1,qiao4

+七次量衣一次裁=qi1,ci4,liang2,yi1,yi1,ci4,cai2

+七行俱下=qi1,hang2,ju4,xia4

+七返还丹=qi1,fan3,huan2,dan1

+丄=shang4

+丅=xia4

+丆=han3

+万=wan4,mo4

+万俟=mo4,qi2

+万别千差=wan4,bie2,qian1,cha1

+万卷=wan4,juan4

+万夫不当=wan4,fu1,bu4,dang1

+万夫不当之勇=wan4,fu1,bu4,dang1,zhi1,yong3

+万头攒动=wan4,tou2,cuan2,dong4

+万应灵丹=wan4,ying4,ling2,dan1

+万应锭=wan4,ying4,ding4

+万户侯=wan4,hu4,hou4

+万无一失=wan4,wu2,yi1,shi1

+万箭攒心=wan4,jian4,cuan2,xin1

+万象更新=wan4,xiang4,geng1,xin1

+万贯家私=wan4,guan4,ji5,si1

+万里长城=wan4,li3,chang2,cheng2

+万里长征=wan4,li3,chang2,zheng1

+丈=zhang4

+丈量=zhang4,liang2

+三=san1

+三不拗六=san1,bu4,niu4,liu4

+三人为众=san1,ren2,wei4,zhong4

+三十六行=san1,shi2,liu4,hang2

+三占从二=san1,zhan1,cong2,er4

+三句不离本行=san1,ju4,bu4,li2,ben3,hang2

+三句话不离本行=san1,ju4,hua4,bu4,li2,ben3,hang2

+三只手=san1,zhi1,shou3

+三天两宿=san1,tian1,liang3,xiu3

+三差两错=san1,cha1,liang3,cuo4

+三差五错=san1,cha1,wu3,cuo4

+三年五载=san1,nian2,wu3,zai3

+三座大山=san1,zuo4,da4,shan1

+三徙成都=san1,xi3,cheng2,dou1

+三战三北=san1,zhan1,san1,bei3

+三折肱为良医=san1,zhe2,gong1,wei2,liang2,yi1

+三更=san1,geng1

+三灾八难=san1,zai1,ba1,nan4

+三百六十行=san1,bai3,liu4,shi2,hang2

+三省吾身=san1,xing3,wu2,shen1

+三色堇=san1,se4,jin3

+三藏=san1,zang4

+三角裤衩=san1,jiao3,ku4,cha3

+三重=san1,chong2

+上=shang4,shang3

+上供=shang4,gong4

+上卷=shang4,juan4

+上去=shang3,qu4

+上吐下泻=shang4,tu3,xia4,xie4

+上声=shang3,sheng1

+上头=shang4,tou5

+上将=shang4,jiang4

+上岁数=shang4,sui4,shu4

+上当=shang4,dang4

+上当学乖=shang4,dang1,xue2,guai1

+上相=shang4,xiang4

+上调=shang4,diao4

+上铺=shang4,pu4

+下=xia4

+下不为例=xia4,bu4,wei2,li4

+下乘=xia4,sheng4

+下卷=xia4,juan4

+下处=xia4,chu3

+下头=xia4,tou5

+下巴颏=xia4,ba1,ke1

+下调=xia4,diao4

+下辈子=xia4,bei4,zi5

+下铺=xia4,pu4

+丌=qi2,ji1

+不=bu4,fou3

+不一会儿=bu2,yi4,hui2,er5

+不一定=bu4,yi1,ding4

+不一样=bu4,yi2,yang4

+不三不四=bu4,san1,bu4,si4

+不为五斗米折腰=bu4,wei4,wu3,dou3,mi3,zhe2,yao1

+不为人知=bu4,wei2,ren2,zhi1

+不为名不为利=bu4,wei2,ming2,bu4,wei2,li4

+不为已甚=bu4,wei2,yi3,shen4

+不义=bu4,yi4

+不义之财=bu4,yi4,zhi1,cai2

+不了=bu4,liao3

+不了不当=bu4,liao3,bu4,dang4

+不了了之=bu4,liao3,liao3,zhi1

+不了而了=bu4,liao3,er2,liao3

+不亦乐乎=bu4,yi4,le4,hu1

+不亦善夫=bu4,yi5,shan4,fu1

+不亦说乎=bu4,yi4,yue4,hu1

+不以为然=bu4,yi3,wei2,ran2

+不以语人=bu4,yi3,yu4,ren2

+不会=bu4,hui4

+不但=bu4,dan4

+不住=bu4,zhu4

+不值识者一笑=bu4,zhi2,shi2,zhe3,yi2,xiao4

+不兴=bu4,xing1

+不切实际=bu2,qie4,shi2,ji4

+不切题=bu2,qie4,ti2

+不到=bu4,dao4

+不到位=bu2,dao4,wei4

+不到长城非好汉=bu4,dao4,chang2,cheng2,fei1,hao3,han4

+不到黄河心不死=bu4,dao4,huang2,he2,xin1,bu4,si3

+不务正业=bu4,wu4,zheng4,ye4

+不动如山=bu2,dong4,ru2,shan1

+不卑不亢=bu4,bei1,bu4,kang4

+不厌其烦=bu4,yan4,qi2,fan2

+不可奈何=bu4,ke3,mai4,he2

+不可揆度=bu4,ke3,kui2,duo2

+不可胜举=bu4,ke3,sheng4,ju4

+不吝赐教=bu4,lin4,ci4,jiao4

+不含糊=bu4,han2,hu4

+不在乎=bu2,zai4,hu1

+不堪一击=bu4,kan1,yi1,ji1

+不声不吭=bu4,sheng1,bu4,keng1

+不大方便=bu2,da4,fang1,bian4

+不安分=bu4,an1,fen4

+不客气=bu2,ke4,qi4

+不寐=bu2,mei4

+不对=bu4,dui4

+不对劲=bu4,dui4,jin4

+不对头=bu4,dui4,tou2

+不对茬儿=bu2,dui4,cha2,er2

+不屑=bu4,xie4

+不屑一读=bu2,xie4,yi4,du2

+不屑一顾=bu4,xie4,yi1,gu4

+不屑于=bu2,xie4,yu2

+不屑意=bu2,xie4,yi4

+不屑教诲=bu4,xie4,jiao4,hui4

+不屑毁誉=bu4,xie4,hui3,yu4

+不差=bu4,cha4

+不差上下=bu4,cha1,shang4,xia4

+不差什么=bu4,cha4,shi2,mo3

+不差毫厘=bu4,cha1,hao2,li2

+不差毫发=bu4,cha1,hao2,fa4

+不差累黍=bu4,cha1,lei3,shu3

+不干=bu4,gan4

+不干不净=bu4,gan1,bu4,jing4

+不幸而言中=bu4,xing4,er2,yan2,zhong4

+不当=bu2,dang4

+不当不正=bu4,dang1,bu4,zheng4

+不当人子=bu4,dang1,ren2,zi3

+不徇私情=bu4,xun2,si1,qing2

+不得了=bu4,de2,liao3

+不得已而为之=bu4,de2,yi3,er2,wei2,zhi1

+不必=bu4,bi4

+不忘宿志=bu2,wang4,su4,zhi4

+不忿=bu4,fen4

+不怀好意=bu4,huai2,hao4,yi4

+不悦=bu2,yue4

+不惜一切代价=bu4,xi1,yi2,qie4,dai4,jia4

+不愧=bu4,kui4

+不愧下学=bu4,kui4,xia4,xue2

+不愧不作=bu4,kui4,bu4,zuo4

+不愧不怍=bu4,kui4,bu4,zuo4

+不愧屋漏=bu4,kui4,wu1,lou4

+不慎=bu4,shen4

+不懈=bu4,xie4

+不战而胜=bu2,zhan4,er2,sheng4

+不战而降=bu2,zhan4,er2,xiang2

+不拔一毛=bu4,ba2,yi4,mao2

+不拘形迹=bu4,ju1,xing2,ji1

+不揣冒昧=bu4,chuai3,mao4,mei4

+不揪不睬=bu4,chou3,bu4,cai3

+不敢为天下先=bu4,gan3,wei2,tian1,xia4,xian1

+不敢越雷池一步=bu4,gan3,yue4,lei2,chi2,yi1,bu4

+不料=bu4,liao4

+不易之论=bu4,yi4,zhi1,lun4

+不是=bu2,shi4

+不是冤家不聚头=bu4,shi4,yuan1,jia1,bu4,ju4,tou2

+不是味儿=bu2,shi4,wei4,er2

+不根之谈=bu4,gan1,zhi1,tan2

+不正常=bu2,zheng4,chang2

+不治之症=bu4,zhi4,zhi1,zheng4

+不点儿=bu4,dian3,er5

+不爽累黍=bu4,shuang3,lei4,shu3

+不犯着=bu4,fan4,zhao2

+不甚了了=bu4,shen4,liao3,liao3

+不用说=bu2,yong4,shuo1

+不由得=bu4,you2,de5

+不痛不痒=bu4,tong4,bu4,yang3

+不登大雅=bu4,deng1,da4,ya3

+不登大雅之堂=bu4,deng1,da4,ya3,zhi1,tang2

+不相为谋=bu4,xiang1,wei2,mou2

+不省人事=bu4,xing3,ren2,shi4

+不着边际=bu4,zhuo2,bian1,ji4

+不知薡蕫=bu4,zhi1,ding1,dong3

+不紧不慢=bu4,jin1,bu4,man4

+不绝如发=bu4,jue2,ru2,fa4

+不置可否=bu4,zhi4,ke3,fou3

+不翼而飞=bu4,yi4,er2,fei1

+不老少=bu4,lao3,shao4

+不耐烦=bu4,nai4,fan2

+不肖=bu2,xiao4

+不肖子=bu2,xiao4,zi3

+不肖子孙=bu4,xiao4,zi3,sun1

+不胜=bu4,sheng4

+不胜其任=bu4,sheng4,qi2,ren4

+不胜其烦=bu4,sheng4,qi2,fan2

+不胜其苦=bu4,sheng4,qi2,ku3

+不胜杯杓=bu4,sheng4,bei1,shao2

+不胜枚举=bu4,sheng4,mei2,ju3

+不胜桮杓=bu4,sheng4,bei1,shao2

+不胫而走=bu4,jing4,er2,zou3

+不能登大雅之堂=bu4,neng2,deng1,da4,ya3,zhi1,tang2

+不自量力=bu4,zi4,liang4,li4

+不舒服=bu4,shu1,fu5

+不蔓不枝=bu4,man4,bu4,zhi1

+不要=bu4,yao4

+不要怕=bu2,yao4,pa4

+不要紧=bu4,yao4,jin3

+不要脸=bu4,yao4,lian3

+不见=bu4,jian4

+不见不散=bu2,jian4,bu2,san4

+不见了=bu2,jian4,le5

+不见天日=bu4,jian4,tian1,ri4

+不见得=bu2,jian4,de2

+不见棺材不下泪=bu4,jian4,guan1,cai2,bu4,xia4,lei4

+不见棺材不落泪=bu4,jian4,guan1,cai2,bu4,luo4,lei4

+不见经传=bu4,jian4,jing1,zhuan4

+不见舆薪=bu4,jian4,yu2,xin1

+不计其数=bu4,ji4,qi2,shu4

+不计得失=bu2,ji4,de2,shi1

+不记得=bu2,ji4,de2

+不论=bu4,lun4

+不论如何=bu2,lun4,ru2,he2

+不费吹灰之力=bu4,fei4,chui1,hui1,zhi1,li4

+不赖=bu4,lai4

+不赞一词=bu4,zan4,yi1,ci2

+不越雷池=bu4,yue4,lei2,shi5

+不足为凭=bu4,zu2,wei2,ping2

+不足为外人道=bu4,zu2,wei2,wai4,ren2,dao4

+不足为奇=bu4,zu2,wei2,qi2

+不足为意=bu4,zu2,wei2,yi4

+不足为据=bu4,zu2,wei2,ju4

+不足为法=bu4,zu2,wei2,fa3

+不足为训=bu4,zu2,wei2,xun4

+不辟斧钺=bu4,bi4,fu3,yue4

+不辩菽麦=bu4,bian4,shu1,mai4

+不适合=bu2,shi4,he2

+不透明=bu2,tou4,ming2

+不速之客=bu4,su4,zhi1,ke4

+不遂=bu4,sui2

+不遑启处=bu4,huang2,qi3,chu3

+不遑宁处=bu4,huang2,ning2,chu3

+不道德=bu4,dao4,de2

+不重要=bu2,zhong4,yao4

+不错=bu4,cuo4

+不问事实真相=bu2,wen4,shi4,shi2,zhen1,xiang4

+不问年龄大小=bu2,wen4,nian2,ling3,da4,xiao3

+不问是非曲直=bu2,wen4,shi4,fei1,qu1,zhi2

+不间不界=bu4,gan1,bu4,ga4

+不闻不问=bu4,wen2,bu4,wen4

+不随以止=bu4,sui2,yi3,zhi3

+不露圭角=bu4,lu4,gui1,jiao3

+不露声色=bu4,lu4,sheng1,se4

+不露形色=bu4,lu4,xing2,se4

+不露神色=bu4,lu4,shen2,se4

+不露锋芒=bu4,lu4,feng1,mang2

+不露锋铓=bu4,lu4,feng1,hui4

+不顾一切=bu4,gu4,yi1,qie4

+与=yu3,yu4,yu2

+与世无争=yu2,shi4,wu2,zheng1

+与世沉浮=yu2,shi4,chen2,fu2

+与人为善=yu3,ren2,wei2,shan4

+与会=yu4,hui4

+与时消息=yu3,shi2,xiao1,xi4

+与民更始=yu3,ren2,geng1,shi3

+与民除害=yu3,hu3,chu2,hai4

+与赛=yu4,sai4

+与闻=yu4,wen2

+丏=mian3

+丐=gai4

+丑=chou3

+丑媳妇总得见公婆=chou3,xi2,fu4,zong3,de5,jian4,gong1,po2

+丑态毕露=chou3,tai4,bi4,lu4

+丑相=chou3,xiang4

+丑角=chou3,jue2

+丒=chou3

+专=zhuan1

+专差=zhuan1,chai1

+专心一致=zhuan1,xin1,yi1,zhi4

+专横=zhuan1,heng4

+专横跋扈=zhuan1,heng4,ba2,hu4

+且=qie3,ju1

+且住为佳=qie3,zhu4,wei2,jia1

+且食蛤蜊=qie3,shi2,ha2,li2

+丕=pi1

+世=shi4

+丗=shi4

+丘=qiu1

+丙=bing3

+业=ye4

+丛=cong2

+东=dong1

+东三省=dong1,san1,xing3

+东墙处子=dong1,qiang2,chu3,zi3

+东扯西拽=dong1,che3,xi1,zhuai1

+东抹西涂=dong1,mo4,xi1,tu2

+东方将白=dong4,fang5,jiang4,bai4

+东猜西揣=dong1,cai1,xi1,chuai1

+东莞=dong1,guan3

+东西南北人=dong1,xi4,nan2,bei3,ren2

+东西南北客=dong1,xi4,nan2,bei3,ke4

+东观西望=dong1,guang1,xi1,wang4

+东躲西藏=dong1,duo3,xi1,cang2

+东量西折=dong1,liang4,xi1,she2

+东阿=dong1,e1

+丝=si1

+丝恩发怨=si1,en1,fa4,yuan4

+丞=cheng2

+丞相=cheng2,xiang4

+丟=diu1

+丠=qiu1

+両=liang3

+丢=diu1

+丢三落四=diu1,san1,la4,si4

+丢下耙儿弄扫帚=diu1,xia4,pa2,er5,nong4,sao4,zhou3

+丢卒保车=diu1,zu2,bao3,ju1

+丢面子=diu1,mian4,zi5

+丣=you3

+两=liang3

+两世为人=liang3,shi4,wei2,ren2

+两口子=liang3,kou3,zi5

+严=yan2

+严丝合缝=yan2,si1,he2,feng4

+严处=yan2,chu3

+严查=yan2,zha1

+严禁=yan2,jin4

+並=bing4

+丧=sang4,sang1

+丧乱=sang1,luan4

+丧事=sang1,shi4

+丧假=sang1,jia4

+丧偶=sang4,ou3

+丧失=sang4,shi1

+丧家=sang1,jia1

+丧家之犬=sang4,jia1,zhi1,quan3

+丧家之狗=sang4,jia1,zhi1,gou3

+丧家犬=sang4,jia1,quan3

+丧尽天良=sang4,jin4,tian1,liang2

+丧服=sang1,fu2

+丧权=sang4,quan2

+丧气=sang4,qi4

+丧生=sang4,sheng1

+丧礼=sang1,li3

+丧胆=sang4,dan3

+丧胆销魂=sang4,hun2,xiao1,hun2

+丧葬=sang1,zang4

+丧钟=sang1,zhong1

+丧魂落魄=sang4,hun2,luo4,po4

+丨=gun3

+丩=jiu1

+个=ge4,ge3

+个头儿=ge4,tou5,er5

+个子=ge4,zi5

+丫=ya1

+丫头=ya1,tou5

+丫杈=ya1,cha4

+丬=pan2

+中=zhong1,zhong4

+中伤=zhong4,shang1

+中卷=zhong1,juan4

+中奖=zhong4,jiang3

+中将=zhong1,jiang4

+中弹=zhong4,dan4

+中彩=zhong4,cai3

+中招=zhong4,zhao1

+中暑=zhong4,shu3

+中标=zhong4,biao1

+中毒=zhong4,du2

+中牟=zhong1,mu4

+中的=zhong1,di4

+中缝=zhong1,feng4

+中行=zhong1,hang2

+中计=zhong4,ji4

+中靶=zhong4,ba3

+中风=zhong4,feng1

+中风瘫痪=zhong4,feng1,tan1,huan4

+丮=ji3

+丯=jie4

+丰=feng1

+丰屋蔀家=feng1,wu1,zhi1,jia1

+丱=guan4,kuang4

+串=chuan4

+丳=chan3

+临=lin2

+临危不惧=lin2,wei1,bu4,ju4

+临帖=lin2,tie4

+临敌易将=lin2,di2,yi4,jiang4

+临深履薄=lin2,shen1,lv3,bo2

+临难=lin2,nan4

+临难不恐=lin2,nan4,bu4,kong3

+临难不惧=lin2,nan4,bu4,ju3

+临难不避=lin2,nan2,bu4,bi4

+临难无慑=lin2,nan2,wu2,she4

+临难苟免=lin2,nan4,gou3,mian3

+临难铸兵=lin2,nan4,zhu4,bing1

+丵=zhuo2

+丶=zhu3

+丷=ba1

+丸=wan2

+丸子=wan2,zi5

+丹=dan1

+丹凤朝阳=dan1,feng4,chao2,yang2

+丹参=dan1,shen1

+丹心碧血=dan1,xin1,bi4,xue4

+为=wei4,wei2

+为主=wei2,zhu3

+为之语塞=wei2,zhi1,yu3,se4

+为五斗米折腰=wei4,wu3,dou3,mi3,zhe2,yao1

+为人=wei2,ren2

+为人作嫁=wei4,ren2,zuo4,jia4

+为人师表=wei2,ren2,shi1,biao3

+为人说项=wei4,ren2,shuo1,xiang4

+为什么=wei4,shen2,me5

+为仁不富=wei2,ren2,bu4,fu4

+为伍=wei2,wu3

+为啥=wei4,sha2

+为善最乐=wei2,shan4,zui4,le4

+为国为民=wei2,guo2,wei2,min2

+为国捐躯=wei4,guo2,juan1,qu1

+为好成歉=wei2,hao3,cheng2,qian4

+为害=wei2,hai4

+为富不仁=wei2,fu4,bu4,ren2

+为山止篑=wei2,shan1,zhi3,kui4

+为德不卒=wei2,de2,bu4,zu2

+为德不终=wei2,de2,bu4,zhong1

+为恶不悛=wei2,e4,bu4,quan1

+为患=wei2,huan4

+为所欲为=wei2,suo3,yu4,wei2

+为政=wei2,zheng4

+为数=wei2,shu4

+为时=wei2,shi2

+为时过早=wei2,shi2,guo4,zao3

+为期=wei2,qi1

+为止=wei2,zhi3

+为此=wei4,ci3

+为民父母=wei2,min2,fu4,mu3

+为法自弊=wei2,fa3,zi4,bi4

+为生=wei2,sheng1

+为着=wei2,zhe5

+为虎作伥=wei4,hu3,zuo4,chang1

+为虺弗摧=wei2,hui3,fu2,cui1

+为蛇添足=wei2,she2,tian1,zu2

+为蛇画足=wei2,she2,hua4,zu2

+为裘为箕=wei2,qiu2,wei2,ji1

+为限=wei2,xian4

+为难=wei2,nan2

+为非作恶=wei2,fei1,zuo4,e4

+为非作歹=wei2,fei1,zuo4,dai3

+为饥寒所迫=wei2,ji1,han2,suo3,po4

+为首=wei2,shou3

+为鬼为蜮=wei2,gui3,wei2,yu4

+主=zhu3

+主仆=zhu3,pu2

+主将=zhu3,jiang4

+主干=zhu3,gan4

+主干线=zhu3,gan4,xian4

+主角=zhu3,jue2

+主调=zhu3,diao4

+丼=jing3

+丽=li4,li2

+丽水=li2,shui3

+丽都=li4,du1

+举=ju3

+举不胜举=ju3,bu4,sheng4,ju3

+举手相庆=ju3,shou3,xiang1,qing4

+举措不当=ju3,cuo4,bu4,dang4

+丿=pie3

+乀=fu2

+乁=yi2,ji2

+乂=yi4

+乃=nai3

+乄=wu3

+久=jiu3

+久要不忘=jiu3,yao1,bu4,wang4

+乆=jiu3

+乇=tuo1,zhe2

+么=me5,mo2,ma5,yao1

+义=yi4

+义薄云天=yi4,bo2,yun2,tian1

+乊=yi1

+之=zhi1

+乌=wu1

+乌头白马生角=wu1,tou2,bai2,ma3,sheng1,jiao3

+乌拉=wu4,la5

+乍=zha4

+乎=hu1

+乏=fa2

+乏累=fa2,lei4

+乐=le4,yue4,yao4,lao4

+乐善好施=le4,shan4,hao4,shi1

+乐器=yue4,pu3

+乐团=yue4,tuan2

+乐坛=yue4,tan2

+乐子=le4,zi5

+乐官=yue4,guan1

+乐山乐水=yao4,shan1,yao4,shui3

+乐工=yue4,gong1

+乐师=yue4,shi1

+乐府=yue4,fu3

+乐府诗=yue4,fu3,shi1

+乐律=yue4,lv4

+乐得=le4,de5

+乐感=yue4,gan3

+乐户=yue4,hu4

+乐曲=yue4,qu3

+乐歌=yue4,ge1

+乐毅=yue4,yi4

+乐池=yue4,chi2

+乐清=yue4,qing1

+乐章=yue4,zhang1

+乐舞=yue4,wu3

+乐谱=yue4,qi4

+乐迷=yue4,mi2

+乐道好古=le4,dao4,hao3,gu3

+乐队=yue4,dui4

+乐音=yue4,yin1

+乑=yin2

+乒=ping1

+乓=pang1

+乔=qiao2

+乕=hu3

+乖=guai1

+乗=cheng2,sheng4

+乘=cheng2,sheng4

+乘便=cheng2,bian4

+乘兴=cheng2,xing4

+乘势=cheng2,shi4

+乘壶=sheng4,hu2

+乘客=cheng2,ke4

+乘数=cheng2,shu4

+乘肥衣轻=cheng2,fei2,yi4,qing1

+乘舆=sheng4,yu2

+乘舆播越=cheng2,yu2,bo1,yue4

+乘间伺隙=cheng2,jian1,si4,xi4

+乘风兴浪=cheng2,feng1,xing1,lang4

+乘风破浪=cheng2,feng1,po4,lang4

+乙=yi3

+乚=hao2,yi3

+乛=yi3

+乜=mie1,nie4

+乜斜=nie4,xie2

+乜斜缠帐=nie4,xie2,chan2,zhang4

+九=jiu3

+九大行星=jiu3,da4,hang2,xing1

+九曲回肠=jiu3,qu1,hui2,chang2

+九死一生=jiu3,si3,yi1,sheng1

+九牛一毛=jiu3,niu2,yi1,mao2

+九牛拉不转=jiu3,niu2,la1,bu4,zhuan4

+九蒸三熯=jiu3,zheng1,san1,sheng1

+九行八业=jiu3,hang2,ba1,ye4

+九转功成=jiu3,zhuan4,gong1,cheng2

+九重霄=jiu3,chong2,xiao1

+九鼎不足为重=jiu3,ding3,bu4,zu2,wei2,zhong4

+乞=qi3

+乞降=qi3,xiang2

+也=ye3

+习=xi2

+习以为常=xi2,yi3,wei2,chang2

+习焉不察=xi1,yan1,bu4,cha2

+乡=xiang1

+乡曲=xiang1,qu1

+乢=gai4

+乣=jiu3

+乤=xia4

+书=shu1

+书卷=shu1,juan4

+书卷气=shu1,juan4,qi4

+书缺有间=shu1,que1,you3,jian4

+书背=shu1,bei4

+乧=dou3

+乨=shi3

+乩=ji1

+乪=nang2

+乫=jia1

+乬=ju4

+乭=shi2

+乮=mao3

+乯=hu1

+买=mai3

+买得起=mai3,de5,qi3

+买椟还珠=mai3,du2,huan2,zhu1

+乱=luan4

+乱七八糟=luan4,qi1,ba1,zao1

+乱作胡为=luan4,zuo4,hu2,wei2

+乱哄哄=luan4,hong3,hong3

+乱成一团=luan4,cheng2,yi1,tuan2

+乱箭攒心=luan4,jian4,cuan2,xin1

+乳=ru3

+乳晕=ru3,yun4

+乳臭=ru3,xiu4

+乳臭未除=ru3,chou4,wei4,chu2

+乴=xue2

+乵=yan3

+乶=fu3

+乷=sha1

+乸=na3

+乹=qian2

+乺=suo3

+乻=yu2

+乼=zhu4

+乽=zhe3

+乾=qian2,gan1

+乿=zhi4,luan4

+亀=gui1

+亁=qian2

+亂=luan4

+亃=lin3,lin4

+亄=yi4

+亅=jue2

+了=le5,liao3

+了不可见=liao3,bu4,ke3,jian4

+了不得=liao3,bu4,de2

+了不起=liao3,bu4,qi3

+了不长进=liao3,bu4,zhang3,jin3

+了了=liao3,liao3

+了了可见=liao3,liao3,ke3,jian4

+了事=liao3,shi4

+了却=liao3,que4

+了如指掌=liao3,ru2,zhi3,zhang3

+了局=liao3,ju2

+了当=liao3,dang4

+了得=liao3,de2

+了悟=liao3,wu4

+了断=liao3,duan4

+了无=liao3,wu2

+了无惧色=liao3,wu2,ju4,se4

+了望台=liao4,wang4,tai2

+了然=liao3,ran2

+了然于胸=liao3,ran2,yu2,xiong1

+了然无闻=liao3,ran2,wu2,wen2

+了结=liao3,jie2

+了若指掌=liao3,ruo4,zhi3,zhang3

+了解=liao3,jie3

+了账=liao3,zhang4

+了身达命=liao3,shen1,da2,ming4

+亇=ge4,ma1

+予=yu2,yu3

+予人口实=yu3,ren2,kou3,shi2

+予以=yu3,yi3

+予取予求=yu2,qu3,yu2,qiu2

+予夺生杀=yu3,duo2,sheng1,sha1

+予齿去角=yu3,chi3,qu4,jiao3

+争=zheng1

+争得=zheng1,de5

+亊=shi4

+事=shi4

+事与心违=shi4,yu4,xin1,wei2

+事假=shi4,jia4

+事危累卵=shi4,wei1,lei4,luan3

+事后诸葛亮=shi4,hou4,zhu1,ge2,liang4

+事在人为=shi4,zai4,ren2,wei2

+二=er4

+二万五千里长征=er4,wan4,wu3,qian1,li3,chang2,zheng1

+二人转=er4,ren2,zhuan4

+二十八宿=er4,shi2,ba1,xiu4

+二竖为虐=er4,shu4,wei2,nve4

+二重=er4,chong2

+二重奏=er4,chong2,zou4

+二重性=er4,chong2,xing4

+二重根=er4,chong2,gen1

+亍=chu4

+于=yu2

+于今为烈=yu2,jin1,wei2,lie4

+于家为国=yu2,jia1,wei2,guo2

+于思=yu2,sai1

+亏=kui1

+亏得=kui1,de5

+亏累=kui1,lei3

+亐=yu2

+云=yun2

+云兴霞蔚=yun2,xing1,xia2,wei4

+云屯席卷=yun2,tun2,xi2,juan3

+云朝雨暮=yun2,zhao1,yu3,mu4

+云泥之差=yun2,ni2,zhi1,cha1

+互=hu4

+互为因果=hu4,wei2,yin1,guo4

+互为表里=hu4,wei2,biao3,li3

+互见=hu4,xian4

+亓=qi2

+五=wu3

+五侯七贵=wu3,hou4,qi1,gui4

+五侯蜡烛=wu3,hou4,la4,zhu2

+五斗折腰=wu3,dou3,zhe2,yao1

+五斗柜=wu3,dou3,gui4

+五斗橱=wu3,dou3,chu2

+五方杂处=wu3,fang1,za2,chu3

+五更=wu3,geng1

+五石六鹢=wu3,shi2,liu4,yi1

+五羖大夫=wu3,gu3,da4,fu1

+五脊六兽=wu3,ji2,liu4,shou4

+五色相宣=wu3,se4,xiang1,xuan1

+五行八作=wu3,hang2,ba1,zuo4

+五行并下=wu3,hang2,bing4,xia4

+五行生克=wu3,xing2,sheng1,ke4

+五金行=wu3,jin1,hang2

+五陵年少=wu3,ling2,nian2,shao4

+井=jing3

+井底虾蟆=jing3,di3,xia1,ma2

+井底蛤蟆=jing3,di3,ha2,ma2

+亖=si4

+亗=sui4

+亘=gen4

+亘古奇闻=gen4,gu3,qi1,wen2

+亙=gen4

+亚=ya4

+亚得里亚海=ya4,de5,li3,ya4,hai3

+亚肩叠背=ya4,jian1,die2,bei4

+亚肩迭背=ya4,jian1,die2,bei4

+些=xie1,suo4

+亜=ya4

+亝=qi2,zhai1

+亞=ya4,ya1

+亟=ji2,qi4

+亠=tou2

+亡=wang2,wu2

+亡国大夫=wang2,guo2,da4,fu1

+亡魂失魄=wang2,hun2,shi1,hun2

+亢=kang4

+亣=da4

+交=jiao1

+交卷=jiao1,juan4

+交响乐=jiao1,xiang3,yue4

+交差=jiao1,chai1

+交恶=jiao1,wu4

+交白卷=jiao1,bai2,juan4

+交行=jiao1,hang2

+交还=jiao1,huan2

+交通梗塞=jiao1,tong1,geng3,se4

+亥=hai4

+亦=yi4

+产=chan3

+产假=chan3,jia4

+亨=heng1,peng1

+亩=mu3

+亪=ye5

+享=xiang3

+京=jing1

+京片子=jing1,pian4,zi5

+京都=jing1,du1

+亭=ting2

+亭子=ting2,zi5

+亮=liang4

+亮相=liang4,xiang4

+亯=xiang3

+亰=jing1

+亱=ye4

+亲=qin1,qing4

+亲切=qin1,qie4

+亲家=qing4,jia1

+亲密无间=qin1,mi4,wu2,jian4

+亳=bo2

+亴=you4

+亵=xie4

+亶=dan3,dan4

+亷=lian2

+亸=duo3

+亹=wei3,men2

+亹亹不倦=tan1,wei3,bu4,juan4

+人=ren2

+人不可以貌相=ren2,bu4,ke3,yi3,mao4,xiang4

+人不可貌相=ren2,bu4,ke3,mao4,xiang4

+人中狮子=ren2,zhong1,shi1,zi3

+人为=ren2,wei2

+人为刀俎=ren2,wei2,dao1,zu3

+人事不省=ren2,shi4,bu4,xing3

+人们=ren2,men5

+人参=ren2,shen1

+人口总数=ren2,kou3,zong3,shu4

+人多阙少=ren2,duo1,que4,shao3

+人心向背=ren2,xin1,xiang4,bei4

+人才难得=ren2,cai2,cai2,de2

+人数=ren2,shu4

+人数众多=ren2,shu4,zhong4,duo1

+人模狗样=ren2,mu2,gou3,yang4

+人涉卬否=ren2,she4,ang2,fou3

+人满为患=ren2,man3,wei2,huan4

+人生朝露=ren2,sheng1,chao2,lu4

+人生自古谁无死=ren2,sheng1,zi4,gu3,shui2,wu2,si3

+人给家足=ren2,ji3,jia1,zu2

+人自为战=ren2,zi4,wei2,zhan4

+人自为政=ren2,zi4,wei2,zheng4

+人行道=ren2,xing2,dao4

+人言藉藉=ren2,yan2,ji2,ji2

+人足家给=ren2,zu2,jia1,ji3

+人轧人=ren2,ga2,ren2

+亻=ren2

+亼=ji2

+亽=ji2

+亾=wang2

+亿=yi4

+什=shen2,shi2

+什么=shen2,me5

+什件儿=shi2,jian4,er2

+什伍东西=shi2,wu3,dong1,xi1

+什围伍攻=shi2,wei2,wu3,gong1

+什物=shi2,wu4

+什袭以藏=shi2,xi2,yi3,cang2

+什袭珍藏=shi2,xi2,zhen1,cang2

+什袭而藏=shi2,xi2,er2,cang2

+什锦=shi2,jin3

+仁=ren2

+仂=le4

+仃=ding1

+仄=ze4

+仅=jin3,jin4

+仆=pu1,pu2

+仆人=pu2,ren2

+仆仆亟拜=pu2,pu2,ji2,bai4

+仆仆道途=pu2,pu2,dao4,tu2

+仆仆风尘=pu2,pu2,feng1,chen2

+仆从=pu2,cong2

+仆妇=pu2,fu4

+仆役=pu2,yi4

+仇=chou2,qiu2

+仇姓=qiu2,xing4

+仈=ba1

+仉=zhang3

+今=jin1

+今朝=jin1,zhao1

+今朝有酒今朝醉=jin1,zhao1,you3,jiu3,jin1,zhao1,zui4

+介=jie4

+仌=bing1

+仍=reng2

+从=cong2,zong4

+从一而终=cong2,yi1,er2,zhong1

+从从容容=cong2,cong2,rong2,rong2

+从俗就简=cong2,su2,jiu4,jia3

+从容不迫=cong2,rong2,bu4,po4

+从容自在=cong1,rong2,zi4,zai4

+从容自若=cong2,rong2,zi4,ruo4

+仏=fo2

+仐=jin1,san3

+仑=lun2

+仒=bing1

+仓=cang1

+仓卒=cang1,cu4

+仓卒主人=cang1,cu4,zhu3,ren2

+仓卒之际=cang1,cu4,zhi1,ji4

+仔=zi3,zi1,zai3

+仔猪=zai3,zhu1

+仔鸡=zai3,ji1

+仕=shi4

+他=ta1

+他们=ta1,men5

+他们俩=ta1,men1,lia3

+他们的=ta1,men5,de5

+他们自己=ta1,men5,zi4,ji3

+他们自己的=ta1,men5,zi4,ji3,de5

+他处=ta1,chu3

+仗=zhang4

+付=fu4

+付之一炬=fu4,zhi1,yi1,ju4

+仙=xian1

+仙露明珠=xian1,lu4,ming2,zhu1

+仚=xian1

+仛=tuo1,cha4,duo2

+仜=hong2

+仝=tong2

+仞=ren4

+仟=qian1

+仠=gan3,han4

+仡=yi4,ge1

+仡佬族=ge1,lao3,zu2

+仢=bo2

+代=dai4

+代为=dai4,wei2

+代为说项=dai4,wei2,shuo1,xiang4

+代人说项=dai4,ren2,shuo1,xiang4

+代数=dai4,shu4

+代数和=dai4,shu4,he2

+代数式=dai4,shu4,shi4

+代数方程=dai4,shu4,fang1,cheng2

+令=ling4,ling2,ling3

+令人发指=ling4,ren2,fa4,zhi3

+令人捧腹=ling4,ren2,peng3,fu3

+令原之戚=ling2,yuan2,zhi1,qi1

+令狐=ling2,hu2

+以=yi3

+以一当十=yi3,yi1,dang1,shi2

+以不济可=yi3,fou3,ji4,ke3

+以为=yi3,wei2

+以书为御=yi3,shu1,wei2,yu4

+以人为鉴=yi3,ren2,wei2,jian4

+以人为镜=yi3,ren2,wei2,jing4

+以冠补履=yi3,guan1,bu3,lv3

+以利累形=yi3,li4,lei3,xing2

+以升量石=yi3,sheng1,liang2,dan4

+以古为鉴=yi3,gu3,wei2,jian4

+以古为镜=yi3,gu3,wei2,jing4

+以夜继朝=yi3,ye4,ji4,zhao1

+以大恶细=yi3,da4,wu4,xi4

+以天下为己任=yi3,tian1,xia4,wei2,ji3,ren4

+以守为攻=yi3,shou3,wei2,gong1

+以宫笑角=yi3,gong1,xiao4,jue2

+以己度人=yi3,ji3,duo2,ren2

+以微知着=yi3,wei1,zhi1,zhu4

+以忍为阍=yi3,ren3,wei2,hun1

+以意为之=yi3,yi4,wei2,zhi1

+以慎为键=yi3,shen4,wei2,jian4

+以攻为守=yi3,gong1,wei2,shou3

+以日为年=yi3,ri4,wei2,nian2

+以毁为罚=yi3,hui3,wei2,fa2

+以毛相马=yi3,mao2,xiang4,ma3

+以水洗血=yi3,shui3,xi3,xue4

+以水济水=yi3,shui3,ji3,shui3

+以法为教=yi3,fa3,wei2,jiao4

+以泽量尸=yi3,ze2,liang2,shi1

+以牙还牙=yi3,ya2,huan2,ya2

+以疏间亲=yi3,shu1,jian4,qin1

+以白为黑=yi3,bai2,wei2,hei1

+以眼还眼=yi3,yan3,huan2,yan3

+以筌为鱼=yi3,quan2,wei2,yu2

+以紫为朱=yi3,zi3,wei2,zhu1

+以耳为目=yi3,er3,wei2,mu4

+以茶当酒=yi3,cha2,dang4,jiu3

+以蠡测海=yi3,li2,ce4,hai3

+以血洗血=yi3,xue4,xi3,xue4

+以规为瑱=yi3,gui1,wei2,tian4

+以言为讳=yi3,yan2,wei2,hui4

+以誉为赏=yi3,yu4,wei2,shang3

+以讹传讹=yi3,e2,chuan2,e2

+以身许国=yi3,sheng1,xu3,guo2

+以还=yi3,huan2

+以退为进=yi3,tui4,wei2,jin4

+以邻为壑=yi3,lin2,wei2,he4

+以郄视文=yi3,xi4,shi4,wen2

+以鹿为马=yi3,lu4,wei2,ma3

+以黑为白=yi3,hei1,wei2,bai2

+仦=chao4

+仧=chang2,zhang3

+仨=sa1

+仩=chang2

+仪=yi2

+仫=mu4

+们=men2

+仭=ren4

+仮=fan3

+仯=chao4,miao3

+仰=yang3,ang2

+仰不愧天=yang3,bu4,kui4,tian1

+仰事俯畜=yang3,shi4,fu3,xu4

+仰屋着书=yang3,wu1,zhu4,shu1

+仰给=yang3,ji3

+仱=qian2

+仲=zhong4

+仳=pi3,pi2

+仴=wo4

+仵=wu3

+件=jian4

+件数=jian4,shu4

+价=jia4,jie4,jie5

+仸=yao3,fo2

+仹=feng1

+仺=cang1

+任=ren4,ren2

+任丘=ren2,qiu1

+任姓=ren2,xing4

+任达不拘=ren4,lao2,bu4,ju1

+仼=wang2

+份=fen4,bin1

+份子=fen4,zi5

+仾=di1

+仿=fang3

+仿佛=fang3,fu2

+伀=zhong1

+企=qi3

+伂=pei4

+伃=yu2

+伄=diao4

+伅=dun4

+伆=wen3

+伇=yi4

+伈=xin3

+伉=kang4

+伊=yi1

+伋=ji2

+伌=ai4

+伍=wu3

+伎=ji4,qi2

+伏=fu2

+伏帖=fu2,tie1

+伏瘕=fu3,jia3

+伏而咶天=fu2,er2,shi4,tian1

+伏虎降龙=fu2,hu3,xiang2,long2

+伐=fa2

+休=xiu1,xu3

+休假=xiu1,jia4

+伒=jin4,yin2

+伓=pi1

+伔=dan3

+伕=fu1

+伖=tang3

+众=zhong4

+众啄同音=zhong4,zhou4,tong2,yin1

+众好众恶=zhong4,hao4,zhong4,wu4

+众数=zhong4,shu4

+众星攒月=zhong4,xing1,cuan2,yue4

+众毛攒裘=zhong4,mao2,cuan2,qiu2

+众生相=zhong4,sheng1,xiang4

+众矢之的=zhong4,shi3,zhi1,di4

+优=you1

+优孟衣冠=you1,meng4,yi1,guan1

+伙=huo3

+会=hui4,kuai4

+会儿=hui4,er5

+会计=kuai4,ji4

+伛=yu3

+伛偻=yu3,lv3

+伜=cui4

+伝=yun2

+伞=san3

+伟=wei3

+传=chuan2,zhuan4

+传为佳话=chuan2,wei2,jia1,hua4

+传为笑柄=chuan2,wei2,xiao4,bing3

+传为笑谈=chuan2,wei2,xiao4,tan2

+传为美谈=chuan2,wei2,mei3,tan2

+传单炸弹=chuan2,dan1,zha4,dan4

+传承=chuan2,cheng2

+传略=zhuan4,lve4

+传纸条=chuan2,chi3,tiao2

+传记=zhuan4,ji4

+传说=chuan2,shuo1

+传问=chuan2,wen4

+传闻=chuan2,wen2

+传风扇火=chuan2,feng1,shan1,huo3

+传风搧火=chuan2,feng1,you3,huo3

+伡=che1,ju1

+伢=ya2

+伣=qian4

+伤=shang1

+伤言扎语=shang1,yan2,zha1,yu3

+伥=chang1

+伦=lun2

+伧=cang1,chen5

+伨=xun4

+伩=xin4

+伪=wei3

+伪君子=wei3,jun1,zi3

+伫=zhu4

+伬=chi3

+伭=xian2,xuan2

+伮=nu2,nu3

+伯=bo2,bai3,ba4

+伯乐一顾=bo1,le4,yi1,gu4

+伯乐相马=bo2,le4,xiang4,ma3

+伯伯=bo2,bo5

+估=gu1,gu4

+估摸=gu1,mo5

+估衣=gu4,yi1

+估量=gu1,liang5

+伱=ni3

+伲=ni3,ni4

+伳=xie4

+伴=ban4

+伵=xu4

+伶=ling2

+伷=zhou4

+伸=shen1

+伸手不见五指=shen1,shou3,bu4,jian4,wu3,zhi3

+伸曲=shen1,qu1

+伹=qu1

+伺=ci4,si4

+伺侯=ci4,hou4

+伺候=ci4,hou4

+伺机=si4,ji1

+伺瑕导蠙=si4,xia2,dao3,pin2

+伺瑕导隙=si4,xia2,dao3,xi4

+伺瑕抵蠙=si4,xia2,di3,pin2

+伺瑕抵隙=si4,xia2,di3,xi4

+伺隙=si4,xi4

+伻=beng1

+似=si4,shi4

+似的=shi4,de5

+伽=jia1,qie2,ga1

+伽蓝=qie2,lan2

+伽马=ga1,ma3

+伾=pi1

+伿=yi4

+佀=si4

+佁=yi3,chi4

+佂=zheng1

+佃=dian4,tian2

+佄=han1,gan4

+佅=mai4

+但=dan4

+佇=zhu4

+佈=bu4

+佉=qu1

+佊=bi3

+佋=zhao1,shao4

+佌=ci3

+位=wei4

+低=di1

+低唱浅斟=di1,chang4,qian3,zhen1

+低唱浅酌=di4,chang4,qian3,zhuo2

+低情曲意=di1,qing2,qu1,yi4

+低血压=di1,xue4,ya1

+低调=di1,diao4

+住=zhu4

+住一宿=zhu4,yi4,xiu3

+佐=zuo3

+佑=you4

+佒=yang3

+体=ti3,ti1

+体己=ti1,ji5

+体胀系数=ti3,zhang4,xi4,shu4

+佔=zhan4,dian1

+何=he2,he1,he4

+何乐不为=he2,le4,bu4,wei2

+何乐而不为=he2,le4,er2,bu4,wei2

+何处=he2,chu3

+何所不为=he2,suo3,bu4,wei2

+何曾=he2,zeng1

+何足为奇=he2,zu2,wei2,qi2

+佖=bi4

+佗=tuo2

+佘=she2

+余=yu2

+余勇可贾=yu2,yong3,ke3,gu3

+余数=yu2,shu4

+佚=yi4,die2

+佛=fo2,fu2,bi4,bo2

+佛头著粪=fo2,tou2,zhuo2,fen4

+作=zuo4

+作为=zuo4,wei2

+作乐=zuo4,yue4

+作兴=zuo4,xing1

+作坊=zuo1,fang5

+作嫁衣裳=zuo4,jia4,yi1,shang1

+作弄=zuo1,nong4

+作数=zuo4,shu4

+作歹为非=zuo4,dai3,wei2,fei1

+作浪兴风=zuo4,lang4,xing1,feng1

+佝=gou1,kou4

+佝偻=gou1,lou2

+佝偻着腰=gou1,lou2,zhe5,yao1

+佞=ning4

+佟=tong2

+你=ni3

+你们=ni3,men5

+你们俩=ni3,men1,lia3

+你们的=ni3,men5,de5

+你们自己=ni3,men5,zi4,ji3

+你们自己的=ni3,men5,zi4,ji3,de5

+佡=xian1

+佢=qu2

+佣=yong1,yong4

+佣中佼佼=yong4,zhong1,jiao3,jiao3

+佣人=yong4,ren2

+佣工=yong4,gong1

+佣金=yong4,jin1

+佣钱=yong4,qian2

+佤=wa3

+佥=qian1

+佦=you4

+佧=ka3

+佨=bao1

+佩=pei4

+佪=hui2,huai2

+佫=ge2

+佬=lao3

+佭=xiang2

+佮=ge2

+佯=yang2

+佰=bai3

+佱=fa3

+佲=ming3

+佳=jia1

+佳人薄命=jia1,ren2,bo2,ming4

+佴=er4,nai4

+併=bing4

+佶=ji2

+佷=hen3

+佸=huo2

+佹=gui3

+佺=quan2

+佻=tiao1

+佻薄=tiao1,bo2

+佼=jiao3

+佽=ci4

+佾=yi4

+使=shi3

+使出浑身解数=shi3,chu1,hun2,shen1,xie4,shu4

+使得=shi3,de5

+使性子=shi3,xing4,zi5

+使羊将狼=shi3,yang2,jiang4,lang2

+侀=xing2

+侁=shen1

+侂=tuo1

+侃=kan3

+侃大山=kan3,tai4,shan1

+侄=zhi2

+侄儿=zhi2,er5

+侄女儿=zhi2,nv3,er5

+侄子=zhi2,zi5

+侅=gai1

+來=lai2

+侇=yi2

+侈=chi3

+侉=kua3

+侉子=kua3,zi5

+侊=gong1

+例=li4

+例假=li4,jia4

+例子=li4,zi5

+例直禁简=li4,zhi2,jin4,jian3

+侌=yin1

+侍=shi4

+侍应生=shi4,ying4,sheng1

+侎=mi3

+侏=zhu1

+侏儒一节=zhu1,ru3,yi1,jie2

+侏儒观戏=zhu1,ru3,guan1,xi4

+侐=xu4

+侑=you4

+侒=an1

+侓=lu4

+侔=mou2

+侔色揣称=mou2,se4,chuai3,chen4

+侕=er2

+侖=lun2

+侗=dong4,tong2,tong3

+侘=cha4

+侙=chi4

+侚=xun4

+供=gong4,gong1

+供不应求=gong1,bu4,ying4,qiu2

+供人=gong1,ren2

+供佛=gong4,fo2

+供养=gong4,yang3

+供品=gong4,pin3

+供奉=gong4,feng4

+供孩子上学=gong1,hai2,zi5,shang4,xue2

+供应=gong1,ying4

+供应站=gong1,ying4,zhan4

+供料=gong1,liao4

+供旅客休息=gong1,lv3,ke4,xiu1,xi1

+供暖=gong1,nuan3

+供果=gong4,guo3

+供桌=gong4,zhuo1

+供欣赏=gong1,xin1,shang3

+供气=gong1,qi4

+供水=gong1,shui3

+供求=gong1,qiu2

+供状=gong4,zhuang4

+供献=gong4,xian4

+供电=gong1,dian4

+供给=gong1,ji3

+供职=gong4,zhi2

+供认=gong4,ren4

+供认不讳=gong4,ren4,bu4,hui4

+供词=gong4,ci2

+供读者参考=gong1,du2,zhe3,can1,kao3

+供过于求=gong1,guo4,yu2,qiu2

+供销=gong1,xiao1

+供需=gong1,xu1

+侜=zhou1

+侜张为幻=zhou1,zhang1,wei2,huan4

+依=yi1

+依丱附木=yi1,kuang4,fu4,mu4

+依头缕当=yi1,tou2,lv3,dang4

+依阿取容=yi1,e1,qu3,rong2

+侞=ru2

+侟=cun2,jian4

+侠=xia2

+価=si4

+侢=dai4

+侣=lv3

+侤=ta5

+侥=jiao3,yao2

+侥幸=jiao3,xing4

+侦=zhen1

+侦查=zhen1,zha1

+侦缉=zhen1,ji1

+侧=ce4,ze4,zhai1

+侧歪=zhai1,wai1

+侨=qiao2

+侩=kuai4

+侪=chai2

+侫=ning4

+侬=nong2

+侭=jin3

+侮=wu3

+侯=hou2,hou4

+侰=jiong3

+侱=cheng3,ting3

+侲=zhen4,zhen1

+侳=zuo4

+侴=hao4

+侵=qin1

+侵占=qin1,zhan1

+侶=lv3

+侷=ju2

+侸=shu4,dou1

+侹=ting3

+侺=shen4

+侻=tuo2,tui4

+侼=bo2

+侽=nan2

+侾=xiao1

+便=bian4,pian2

+便了=bian4,liao3

+便人=bian4,ren2

+便佞=pian2,ning4

+便嬖=pian2,bi4

+便宜=pian2,yi5

+便宜从事=bian4,yu2,cong2,shi4

+便宜行事=bian4,yi2,xing2,shi4

+便宜货=bian4,yi2,huo4

+便当=bian4,dang4

+便溺=bian4,niao4

+便血=bian4,xie3

+俀=tui3

+俁=yu3

+係=xi4

+促=cu4

+俄=e2

+俅=qiu2

+俆=xu2

+俇=guang4

+俈=ku4

+俉=wu4

+俊=jun4

+俋=yi4

+俌=fu3

+俍=liang2

+俎=zu3

+俏=qiao4,xiao4

+俏头=qiao4,tou5

+俐=li4

+俑=yong3

+俒=hun4

+俓=jing4

+俔=qian4

+俕=san4

+俖=pei3

+俗=su2

+俘=fu2

+俙=xi1

+俚=li3

+俛=fu3

+俛拾地芥=bi4,shi2,di4,jie4

+俛首帖耳=ma3,shou3,tie1,er3

+俜=ping1

+保=bao3

+保不住=bao3,bu2,zhu4

+保得住=bao3,de5,zhu4

+俞=yu2,yu4,shu4

+俟=si4,qi2

+俠=xia2

+信=xin4,shen1

+信号弹=xin4,hao4,dan4

+信差=xin4,chai1

+信皮儿=xin4,pi2,er5

+俢=xiu1

+俣=yu3

+俤=di4

+俥=che1,ju1

+俦=chou2

+俧=zhi4

+俨=yan3

+俩=liang3,lia3

+俩人=lia3,ren2

+俪=li4

+俫=lai2

+俬=si1

+俭=jian3

+俭不中礼=jian3,bu4,zhong4,li3

+俭朴=jian3,pu3

+修=xiu1

+俯=fu3

+俯首帖耳=fu3,shou3,tie1,er3

+俰=huo4

+俱=ju4

+俲=xiao4

+俳=pai2

+俴=jian4

+俵=biao4

+俶=chu4,ti4

+俷=fei4

+俸=feng4

+俹=ya4

+俺=an3

+俺们=an3,men5

+俻=bei4

+俼=yu4

+俽=xin1

+俾=bi3

+俿=hu3,chi2

+倀=chang1

+倁=zhi1

+倂=bing4

+倃=jiu4

+倄=yao2

+倅=cui4,zu2

+倆=liang3,lia3

+倇=wan3

+倈=lai2

+倉=cang1

+倊=zong3

+個=ge4,ge3

+倌=guan1

+倍=bei4

+倍数=bei4,shu4

+倎=tian3

+倏=shu1

+倐=shu1

+們=men2

+倒=dao3,dao4

+倒不=dao4,bu4

+倒不是=dao4,bu2,shi4

+倒不错=dao4,bu2,cuo4

+倒也=dao4,ye3

+倒产=dao4,chan3

+倒仓=dao3,cang1

+倒伏=dao3,fu3

+倒像=dao4,xiang4

+倒儿爷=dao3,er5,ye2

+倒冠落佩=dao3,guan1,luo4,pei4

+倒出=dao4,chu1

+倒出去=dao4,chu1,qu4

+倒出来=dao4,chu1,lai2

+倒刺=dao4,ci4

+倒剪=dao4,jian3

+倒卖违禁品=dao3,mai4,wei2,jin4,pin3

+倒卵形=dao4,luan3,xing2

+倒卷=dao4,juan3

+倒反=dao4,fan3

+倒叙=dao4,xu4

+倒吊=dao4,diao4

+倒向=dao4,xiang4

+倒嗓=dao3,sang3

+倒嚼=dao3,jiao4

+倒因为果=dao3,yin1,wei2,guo3

+倒圈=dao3,juan4

+倒在地上=dao3,zai4,di4,shang5

+倒垂=dao4,chui2

+倒垃圾=dao4,la1,ji1

+倒头便睡=dao4,tou2,bian4,shui4

+倒好=dao4,hao3

+倒好儿=dao4,hao3,er5

+倒屣相迎=dao4,xi3,xiang1,ying2

+倒带=dao4,dai4

+倒带键=dao4,dai4,jian4

+倒序=dao4,xu4

+倒序词典=dao4,xu4,ci2,dian3

+倒彩=dao4,cai3

+倒影=dao4,ying3

+倒悬=dao4,xuan2

+倒憋气=dao4,bie1,qi4

+倒戈=dao3,ge1

+倒打一瓦=dao4,da3,yi1,wa3

+倒打一耙=dao4,da3,yi1,pa2

+倒找=dao4,zhao3

+倒抽一口冷气=dao4,chou1,yi4,kou3,leng3,qi4

+倒持太阿=dao4,chi2,tai4,e1

+倒持泰阿=dao4,chi2,tai4,e1

+倒挂=dao4,gua4

+倒接=dao4,jie1

+倒插=dao4,cha1

+倒插门=dao4,cha1,men2

+倒收付息=dao4,shou1,fu4,xi1

+倒放=dao4,fang4

+倒数=dao4,shu4

+倒数式=dao4,shu4,shi4

+倒数比=dao4,shu4,bi3

+倒数计时=dao4,shu3,ji4,shi2

+倒映=dao4,ying4

+倒春寒=dao4,chun1,han2

+倒是=dao4,shi4

+倒板=dao3,ban3

+倒果为因=dao4,guo3,wei2,yin1

+倒栽葱=dao4,zai1,cong1

+倒档=dao4,dang4

+倒欠=dao4,qian4

+倒水=dao4,shui3

+倒流=dao4,liu2

+倒灌=dao4,guan4

+倒片=dao4,pian4

+倒片机=dao4,pian4,ji1

+倒牌子=dao3,pai2,zi5

+倒睫=dao4,jie2

+倒空=dao4,kong1

+倒立=dao4,li4

+倒算=dao4,suan4

+倒粪=dao4,fen4

+倒绷孩儿=dao4,beng1,hai2,er2

+倒置=dao4,zhi4

+倒背如流=dao4,bei4,ru2,liu2

+倒背手=dao4,bei4,shou3

+倒脉冲=dao4,mai4,chong1

+倒苦水=dao4,ku3,shui3

+倒茶=dao4,cha2

+倒虹吸管=dao4,hong2,xi1,guan3

+倒血霉=dao3,xue4,mei2

+倒行逆施=dao4,xing2,ni4,shi1

+倒装=dao4,zhuang1

+倒装句=dao4,zhuang1,ju4

+倒装词序=dao4,zhuang1,ci2,xu4

+倒裳索领=dao4,chang2,suo3,ling3

+倒许=dao4,xu3

+倒读数=dao4,du2,shu4

+倒账卷逃=dao3,zhang4,juan3,tao3

+倒贴=dao4,tie1

+倒赔=dao4,pei2

+倒踏门=dao4,ta4,men2

+倒车=dao4,che1

+倒转=dao4,zhuan4

+倒转来说=dao4,zhuan3,lai2,shuo1

+倒轮闸=dao4,lun2,zha2

+倒载干戈=dao4,zai4,gan1,ge1

+倒过儿=dao4,guo4,er2

+倒过去=dao4,guo4,qu4

+倒过来=dao4,guo4,lai2

+倒退=dao4,tui4

+倒退一步=dao4,tui4,yi2,bu4

+倒酒=dao4,jiu3

+倒金字塔=dao4,jin1,zi4,ta3

+倒锁=dao4,suo3

+倒锁上门=dao4,suo3,shang4,men2

+倒风=dao4,feng1

+倒飞=dao4,fei1

+倓=tan2,tan4

+倔=jue4,jue2

+倔头倔脑=jue4,tou2,jue4,nao3

+倔头强脑=jue4,tou2,jiang4,nao3

+倔强=jue2,jiang4

+倕=chui2

+倖=xing4

+倗=peng2

+倘=tang3,chang2

+倘佯=chang2,yang2

+候=hou4

+倚=yi3

+倛=qi1

+倜=ti4

+倝=gan4

+倞=liang4,jing4

+借=jie4

+借尸还阳=jie4,shi1,huan2,yang2

+借尸还魂=jie4,shi1,huan2,hun2

+借调=jie4,diao4

+倠=sui1

+倡=chang4,chang1

+倡条冶叶=chang1,tiao2,ye3,ye4

+倡而不和=chang4,er2,bu4,he4

+倢=jie2

+倣=fang3

+値=zhi2

+倥=kong1,kong3

+倥侗=kong1,tong2

+倦=juan4

+倦鸟知还=juan4,niao3,zhi1,huan2

+倧=zong1

+倨=ju4

+倩=qian4

+倪=ni2

+倫=lun2

+倬=zhuo1

+倭=wo1,wei1

+倮=luo3

+倯=song1

+倰=leng4

+倱=hun4

+倲=dong1

+倳=zi4

+倴=ben4

+倵=wu3

+倶=ju4

+倷=nai3

+倸=cai3

+倹=jian3

+债=zhai4

+债务重组=zhai4,wu4,chong2,zu3

+倻=ye1

+值=zhi2

+值当=zhi2,dang4

+值得=zhi2,de5

+值得一提=zhi2,de2,yi4,ti2

+倽=sha4

+倾=qing1

+倾筐倒庋=qing1,kuang1,dao4,gui3

+倾筐倒箧=qing1,kuang1,dao4,qie4

+倾箱倒箧=qing1,xiang1,dao4,qie4

+倾肠倒肚=qing1,chang2,dao4,du3

+倿=ning4

+偀=ying1

+偁=cheng1,chen4

+偂=qian2

+偃=yan3

+偃旗仆鼓=yan3,qi2,pu2,gu3

+偃武兴文=yan3,wu3,xing1,wen2

+偃革为轩=yan3,ge2,wei2,xuan1

+偄=ruan3

+偅=zhong4,tong2

+偆=chun3

+假=jia3,jia4

+假分数=jia3,fen1,shu4

+假日=jia4,ri4

+假期=jia4,qi1

+假条=jia4,tiao2

+假洋鬼子=jia3,yang2,gui3,zi5

+偈=ji4,jie2

+偈语=ji4,yu3

+偉=wei3

+偊=yu3

+偋=bing3,bing4

+偌=ruo4

+偍=ti2

+偎=wei1

+偎干就湿=wei1,gan4,jiu4,shi1

+偏=pian1

+偏差=pian1,cha1

+偏裨=pian1,pi2

+偐=yan4

+偑=feng1

+偒=tang3,dang4

+偓=wo4

+偔=e4

+偕=xie2

+偖=che3

+偗=sheng3

+偘=kan3

+偙=di4

+做=zuo4

+做好事=zuo4,hao3,shi4

+做衣服=zuo4,yi1,fu5

+偛=cha1

+停=ting2

+停当=ting2,dang4

+停泊=ting2,bo2

+停留长智=ting2,liu2,zhang3,zhi4

+偝=bei4

+偞=xie4

+偟=huang2

+偠=yao3

+偡=zhan4

+偢=chou3,qiao4

+偣=an1

+偤=you2

+健=jian4

+健将=jian4,jiang4

+偦=xu1

+偧=zha1

+偨=ci1

+偩=fu4

+偪=bi1

+偫=zhi4

+偬=zong3

+偭=mian3

+偮=ji2

+偯=yi3

+偰=xie4

+偱=xun2

+偲=cai1,si1

+偳=duan1

+側=ce4,ze4,zhai1

+偵=zhen1

+偶=ou3

+偶一为之=ou3,yi1,wei2,zhi1

+偷=tou1

+偷空=tou1,kong4

+偷鸡不着蚀把米=tou1,ji1,bu4,zhao2,shi2,ba3,mi3

+偸=tou1

+偹=bei4

+偺=zan2,za2,za3

+偻=lv3,lou2

+偼=jie2

+偽=wei3

+偾=fen4

+偿=chang2

+偿还=chang2,huan2

+傀=kui3,gui1

+傁=sou3

+傂=zhi4,si1

+傃=su4

+傄=xia1

+傅=fu4

+傆=yuan4,yuan2

+傇=rong3

+傈=li4

+傉=nu4

+傊=yun4

+傋=jiang3,gou4

+傌=ma4

+傍=bang4

+傍若无人=pang2,ruo4,wu2,ren2

+傎=dian1

+傏=tang2

+傐=hao4

+傑=jie2

+傒=xi1,xi4

+傓=shan1

+傔=qian4,jian1

+傕=que4,jue2

+傖=cang1,chen5

+傗=chu4

+傘=san3

+備=bei4

+傚=xiao4

+傛=rong2

+傜=yao2

+傝=ta4,tan4

+傞=suo1

+傟=yang3

+傠=fa2

+傡=bing4

+傢=jia1

+傣=dai3

+傤=zai4

+傥=tang3

+傦=gu3

+傧=bin1

+傧相=bin1,xiang4

+储=chu3

+储备=chu3,bei4

+储存=chu3,cun2

+储蓄=chu3,xu4

+储蓄银行=chu3,xu4,yin2,hang2

+储藏=chu2,cang2

+傩=nuo2

+傪=can1,can4

+傫=lei3

+催=cui1

+催泪弹=cui1,lei4,dan4

+催泪炸弹=cui1,lei4,zha4,dan4

+傭=yong1

+傮=zao1,cao2

+傯=zong3

+傰=peng2

+傱=song3

+傲=ao4

+傲不可长=ao4,bu4,ke3,zhang3

+傳=chuan2,zhuan4

+傴=yu3

+債=zhai4

+傶=qi1,cou4

+傷=shang1

+傸=chuang3

+傹=jing4

+傺=chi4

+傻=sha3

+傼=han4

+傽=zhang1

+傾=qing1

+傿=yan1,yan4

+僀=di4

+僁=xie4

+僂=lv3,lou2

+僃=bei4

+僄=piao4,biao1

+僅=jin3,jin4

+僆=lian4

+僇=lu4

+僈=man4

+僉=qian1

+僊=xian1

+僋=tan3,tan4

+僌=ying2

+働=dong4

+僎=zhuan4

+像=xiang4

+像煞有介事=xiang4,sha4,you3,jie4,shi4

+僐=shan4

+僑=qiao2

+僒=jiong3

+僓=tui3,tui2

+僔=zun3

+僕=pu2

+僖=xi1

+僗=lao2

+僘=chang3

+僙=guang1

+僚=liao2

+僛=qi1

+僜=cheng1,deng1

+僝=zhan4,zhuan4,chan2

+僞=wei3

+僟=ji1

+僠=bo1

+僡=hui4

+僢=chuan3

+僣=tie3,jian4

+僤=dan4

+僥=jiao3,yao2

+僦=jiu4

+僧=seng1

+僨=fen4

+僩=xian4

+僪=yu4,ju2

+僫=e4,wu4,wu1

+僬=jiao1

+僬侥=jiao1,yao2

+僭=jian4

+僮=tong2,zhuang4

+僮仆=tong2,pu2

+僯=lin3

+僰=bo2

+僱=gu4

+僲=xian1

+僳=su4

+僴=xian4

+僵=jiang1

+僶=min3

+僷=ye4

+僸=jin4

+價=jia4,jie5

+僺=qiao4

+僻=pi4

+僼=feng1

+僽=zhou4

+僾=ai4

+僿=sai4

+儀=yi2

+儁=jun4

+儂=nong2

+儃=chan2,tan3,shan4

+億=yi4

+儅=dang1,dang4

+儆=jing3

+儇=xuan1

+儈=kuai4

+儉=jian3

+儊=chu4

+儋=dan1,dan4

+儋石之储=dan4,shi2,zhi1,chu3

+儌=jiao3

+儍=sha3

+儎=zai4

+儏=can4

+儐=bin1,bin4

+儑=an2,an4

+儒=ru2

+儒将=ru2,jiang4

+儓=tai2

+儔=chou2

+儕=chai2

+儖=lan2

+儗=ni3,yi4

+儗不于伦=li3,bu4,yu2,lun2

+儘=jin3

+儙=qian4

+儚=meng2

+儛=wu3

+儜=ning2

+儝=qiong2

+儞=ni3

+償=chang2

+儠=lie4

+儡=lei3

+儢=lv3

+儣=kuang3

+儤=bao4

+儥=yu4

+儦=biao1

+儧=zan3

+儨=zhi4

+儩=si4

+優=you1

+儫=hao2

+儬=qing4

+儭=chen4

+儮=li4

+儯=teng2

+儰=wei3

+儱=long3,long2,long4

+儲=chu3

+儳=chan2,chan4

+儴=rang2,xiang1

+儵=shu1

+儶=hui4,xie2

+儷=li4

+儸=luo2

+儹=zan3

+儺=nuo2

+儻=tang3

+儼=yan3

+儽=lei2

+儾=nang4,nang1

+儿=er2

+儿女成行=er2,nv3,cheng2,hang2

+儿媳妇儿=er2,xi2,fu5,er5

+儿子=er2,zi5

+兀=wu4

+兀秃=wu4,tu1

+允=yun3

+允当=yun3,dang4

+兂=zan1

+元=yuan2

+兄=xiong1

+兄死弟及=xiong1,si3,di4,ji2

+兄长=xiong1,zhang3

+充=chong1

+充分=chong1,fen4

+充塞=chong1,se4

+充数=chong1,shu4

+充血=chong1,xue4

+兆=zhao4

+兆头=zhao4,tou5

+兆载永劫=zhao4,zai3,yong3,jie2

+兇=xiong1

+先=xian1

+先下手为强=xian1,xia4,shou3,wei2,qiang2

+先入为主=xian1,ru4,wei2,zhu3

+先睹为快=xian1,du3,wei2,kuai4

+光=guang1

+光晕=guang1,yun4

+光杆=guang1,gan3

+光杆儿=guang1,gan3,er2

+光栅=guang1,shan1

+兊=dui4,rui4,yue4

+克=ke4

+克什米尔=ke4,shi2,mi3,er3

+克分子=ke4,fen4,zi3

+兌=dui4,rui4,yue4

+免=mian3

+免冠=mian3,guan1

+免得=mian3,de5

+免服=wen4,fu2

+免袒=mian3,tan3

+免麻=mian3,ma2

+兎=tu4

+兏=chang2,zhang3

+児=er2

+兑=dui4,rui4,yue4

+兒=er2

+兓=qin1

+兔=tu4

+兔丝燕麦=tu4,si1,yan4,mai4

+兔头麞脑=tu4,tou2,suo1,nao3

+兔子=tu4,zi5

+兔葵燕麦=tu4,kui2,yan4,mai4

+兔角龟毛=tu4,jiao3,gui1,mao2