Built for machine learning practitioners requiring flexible and robust hyperparameter tuning, ConfOpt delivers superior optimization performance through conformal uncertainty quantification and a wide selection of surrogate models.

Install ConfOpt from PyPI using pip:

pip install confoptFor the latest development version:

git clone https://github.com/rick12000/confopt.git

cd confopt

pip install -e .The example below shows how to optimize hyperparameters for a RandomForest classifier. You can find more examples in the documentation.

from confopt.tuning import ConformalTuner

from confopt.wrapping import IntRange, FloatRange, CategoricalRange

from sklearn.ensemble import RandomForestClassifier

from sklearn.datasets import load_wine

from sklearn.model_selection import train_test_split

from sklearn.metrics import accuracy_scoreWe import the necessary libraries for tuning and model evaluation. The load_wine function is used to load the wine dataset, which serves as our example data for optimizing the hyperparameters of the RandomForest classifier (the dataset is trivial and we can easily reach 100% accuracy, this is for example purposes only).

def objective_function(configuration):

X, y = load_wine(return_X_y=True)

X_train, X_test, y_train, y_test = train_test_split(

X, y, test_size=0.3, random_state=42, stratify=y

)

model = RandomForestClassifier(

n_estimators=configuration['n_estimators'],

max_features=configuration['max_features'],

criterion=configuration['criterion'],

random_state=42

)

model.fit(X_train, y_train)

predictions = model.predict(X_test)

return accuracy_score(y_test, predictions)This function defines the objective we want to optimize. It loads the wine dataset, splits it into training and testing sets, and trains a RandomForest model using the provided configuration. The function returns test accuracy, which will be the objective value ConfOpt will optimize for.

search_space = {

'n_estimators': IntRange(min_value=50, max_value=200),

'max_features': FloatRange(min_value=0.1, max_value=1.0),

'criterion': CategoricalRange(choices=['gini', 'entropy', 'log_loss'])

}Here, we specify the search space for hyperparameters. In this Random Forest example, this includes defining the range for the number of estimators, the proportion of features to consider when looking for the best split, and the criterion for measuring the quality of a split.

tuner = ConformalTuner(

objective_function=objective_function,

search_space=search_space,

minimize=False

)

tuner.tune(max_searches=50, n_random_searches=10)We initialize the ConformalTuner with the objective function and search space. The tune method then kickstarts hyperparameter search and finds the hyperparameters that maximize test accuracy.

best_params = tuner.get_best_params()

best_score = tuner.get_best_value()

print(f"Best accuracy: {best_score:.4f}")

print(f"Best parameters: {best_params}")Finally, we retrieve the optimization's best parameters and test accuracy score and print them to the console for review.

For detailed examples and explanations see the documentation.

- Classification Example: RandomForest hyperparameter tuning on a classification task.

- Regression Example: RandomForest hyperparameter tuning on a regression task.

- Architecture Overview: System design and module interactions.

- API Reference: Complete reference for main classes, methods, and parameters.

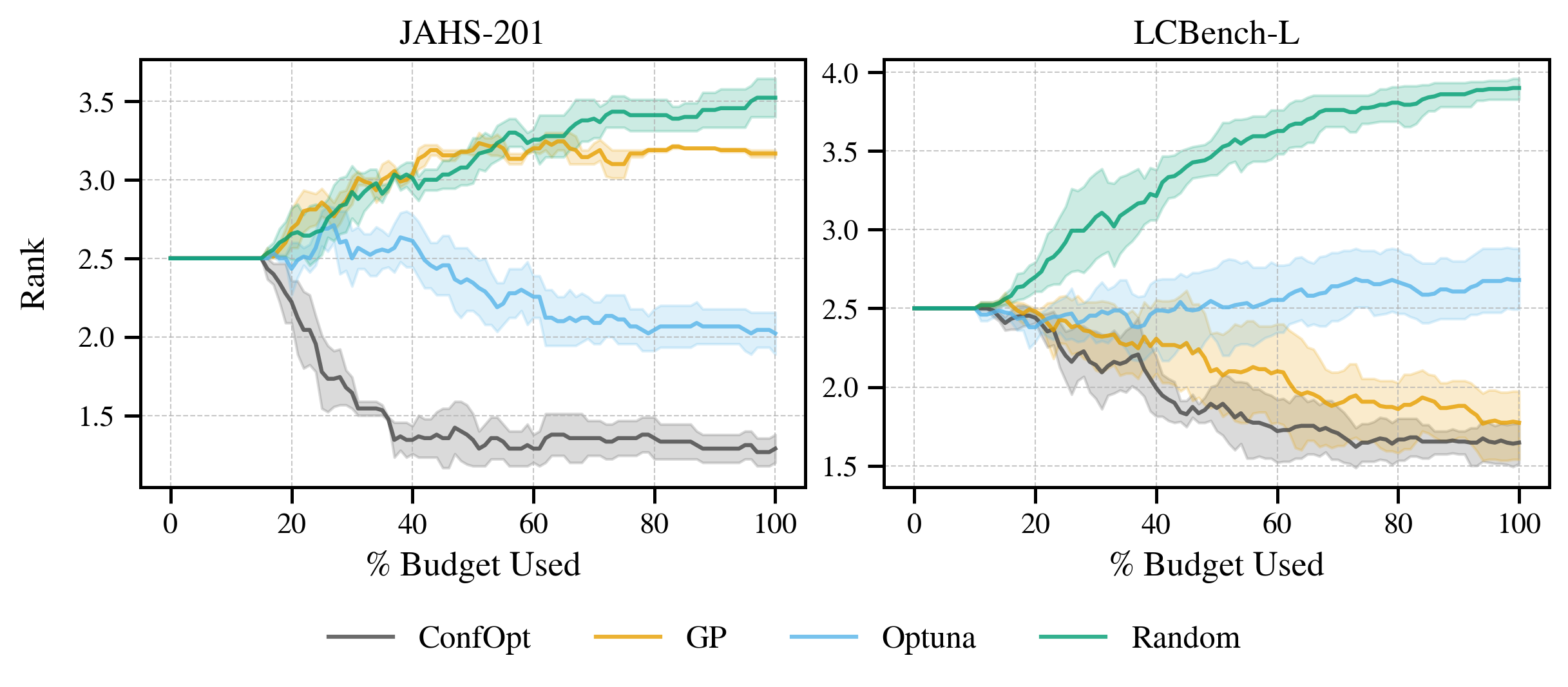

ConfOpt is significantly better than plain old random search, but it also beats established tools like Optuna or traditional Gaussian Processes!

The above benchmark considers neural architecture search on complex image recognition datasets (JAHS-201) and neural network tuning on tabular classification datasets (LCBench-L).

For a fuller analysis of caveats and benchmarking results, refer to the latest methodological paper.

ConfOpt implements surrogate models and acquisition functions from the following papers:

Adaptive Conformal Hyperparameter Optimization arXiv, 2022

Optimizing Hyperparameters with Conformal Quantile Regression PMLR, 2023

Enhancing Performance and Calibration in Quantile Hyperparameter Optimization arXiv, 2025

If you'd like to contribute, please email [email protected] with a quick summary of the feature you'd like to add and we can discuss it before setting up a PR!

If you want to contribute a fix relating to a new bug, first raise an issue on GitHub, then email [email protected] referencing the issue. Issues will be regularly monitored, only send an email if you want to contribute a fix.