-

Notifications

You must be signed in to change notification settings - Fork 29k

[SPARK-24549][SQL] Support Decimal type push down to the parquet data sources #21556

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

|

Test build #91769 has finished for PR 21556 at commit

|

| case decimal: DecimalType if DecimalType.is32BitDecimalType(decimal) => | ||

| (n: String, v: Any) => FilterApi.eq( | ||

| intColumn(n), | ||

| Option(v).map(_.asInstanceOf[java.math.BigDecimal].unscaledValue().intValue() |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

REF:

Line 219 in 21a7bfd

| val unscaledLong = row.getDecimal(ordinal, precision, scale).toUnscaledLong |

|

Test build #91881 has finished for PR 21556 at commit

|

|

Jenkins, retest this please. |

|

Test build #91894 has finished for PR 21556 at commit

|

|

Jenkins, retest this please. |

|

Test build #91909 has finished for PR 21556 at commit

|

|

Test build #92413 has finished for PR 21556 at commit

|

|

Jenkins, retest this please. |

|

Can you benchmark code and results (on your env) in |

|

Test build #92414 has finished for PR 21556 at commit

|

| .booleanConf | ||

| .createWithDefault(true) | ||

|

|

||

| val PARQUET_READ_LEGACY_FORMAT = buildConf("spark.sql.parquet.readLegacyFormat") |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This property doesn't mention pushdown, but the description says it is only valid for push-down. Can you make the property name more clear?

| Option(v).map(_.asInstanceOf[JBigDecimal].unscaledValue().longValue() | ||

| .asInstanceOf[java.lang.Long]).orNull) | ||

| case decimal: DecimalType | ||

| if pushDownDecimal && ((DecimalType.is32BitDecimalType(decimal) && readLegacyFormat) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Please add comments here to explain what differs when readLegacyFormat is true.

| if pushDownDecimal && (DecimalType.is32BitDecimalType(decimal) && !readLegacyFormat) => | ||

| (n: String, v: Any) => FilterApi.eq( | ||

| intColumn(n), | ||

| Option(v).map(_.asInstanceOf[JBigDecimal].unscaledValue().intValue() |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Does this need to validate the scale of the decimal, or is scale adjusted in the analyzer?

| test("filter pushdown - decimal") { | ||

| Seq(true, false).foreach { legacyFormat => | ||

| withSQLConf(SQLConf.PARQUET_WRITE_LEGACY_FORMAT.key -> legacyFormat.toString) { | ||

| Seq(s"_1 decimal(${Decimal.MAX_INT_DIGITS}, 2)", // 32BitDecimalType |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Since this is providing a column name, it would be better to use something more readable than _1.

| } | ||

| } | ||

|

|

||

| test("incompatible parquet file format will throw exeception") { |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

If we can detect the case where the data is written with the legacy format, then why do we need a property to read with the legacy format? Why not do the right thing without a property?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Have create a PR: #21696

After this PR. Support decimal should be like this: https://github.com/wangyum/spark/blob/refactor-decimal-pushdown/sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/parquet/ParquetFilters.scala#L118-L146

|

@wangyum Thanks for the benchmarks! |

# Conflicts: # sql/catalyst/src/main/scala/org/apache/spark/sql/internal/SQLConf.scala # sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/parquet/ParquetFileFormat.scala # sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/parquet/ParquetFilters.scala # sql/core/src/test/scala/org/apache/spark/sql/execution/datasources/parquet/ParquetFilterSuite.scala

| intColumn(n), | ||

| Option(v).map(date => dateToDays(date.asInstanceOf[Date]).asInstanceOf[Integer]).orNull) | ||

|

|

||

| case ParquetSchemaType(DECIMAL, INT32, decimal) if pushDownDecimal => |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

DecimalType contains variable: decimalMetadata. It seems difficult to make a constants like before.

| Option(v).map(_.asInstanceOf[JBigDecimal].unscaledValue().longValue() | ||

| .asInstanceOf[java.lang.Long]).orNull) | ||

| // Legacy DecimalType | ||

| case ParquetSchemaType(DECIMAL, FIXED_LEN_BYTE_ARRAY, decimal) if pushDownDecimal && |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

The binary used for the legacy type and for fixed-length storage should be the same, so I don't understand why there are two different conversion methods. Also, because this is using the Parquet schema now, there's no need to base the length of this binary on what older versions of Spark did -- in other words, if the underlying Parquet type is fixed, then just convert the decimal to that size fixed without worrying about legacy types.

I think this should pass in the fixed array's length and convert the BigDecimal value to that length array for all cases. That works no matter what the file contains.

| intColumn(n), | ||

| Option(v).map(date => dateToDays(date.asInstanceOf[Date]).asInstanceOf[Integer]).orNull) | ||

|

|

||

| case ParquetSchemaType(DECIMAL, INT32, decimal) if pushDownDecimal => |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Since this uses the file schema, I think it should validate that the file uses the same scale as the value passed in. That's a cheap sanity check to ensure correctness.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Seems invalidate value already filtered by:

spark/sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/DataSourceStrategy.scala

Line 439 in e76b012

| protected[sql] def translateFilter(predicate: Expression): Option[Filter] = { |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

That doesn't validate the value against the decimal scale from the file, which is what I'm suggesting. The decimal scale must match exactly and this is a good place to check because this has the file information. If the scale doesn't match, then the schema used to read this file is incorrect, which would cause data corruption.

In my opinion, it is better to add a check if it is cheap instead of debating whether or not some other part of the code covers the case. If this were happening per record then I would opt for a different strategy, but because this is at the file level it is a good idea to add it here.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I see. I will do it.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Add check method to canMakeFilterOn and add a test case:

val decimal = new JBigDecimal(10).setScale(scale)

assert(decimal.scale() === scale)

assertResult(Some(lt(intColumn("cdecimal1"), 1000: Integer))) {

parquetFilters.createFilter(parquetSchema, sources.LessThan("cdecimal1", decimal))

}

val decimal1 = new JBigDecimal(10).setScale(scale + 1)

assert(decimal1.scale() === scale + 1)

assertResult(None) {

parquetFilters.createFilter(parquetSchema, sources.LessThan("cdecimal1", decimal1))

}|

Test build #92619 has finished for PR 21556 at commit

|

| private case class ParquetSchemaType( | ||

| originalType: OriginalType, | ||

| primitiveTypeName: PrimitiveTypeName, | ||

| decimalMetadata: DecimalMetadata) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Don't need DecimalMetadata.

|

Test build #92641 has finished for PR 21556 at commit

|

|

Test build #92843 has finished for PR 21556 at commit

|

| case _ => false | ||

| } | ||

|

|

||

| // Since SPARK-24716, ParquetFilter accepts parquet file schema to convert to |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Is this issue reference correct? The PR says this is for SPARK-24549.

| (n: String, v: Any) => | ||

| FilterApi.gtEq(intColumn(n), dateToDays(v.asInstanceOf[Date]).asInstanceOf[Integer]) | ||

|

|

||

| case ParquetSchemaType(DECIMAL, INT32, 0, _) if pushDownDecimal => |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Why match 0 instead of _?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

In fact, the length is always 0, I replaced it to _.

| case ParquetStringType => value.isInstanceOf[String] | ||

| case ParquetBinaryType => value.isInstanceOf[Array[Byte]] | ||

| case ParquetDateType => value.isInstanceOf[Date] | ||

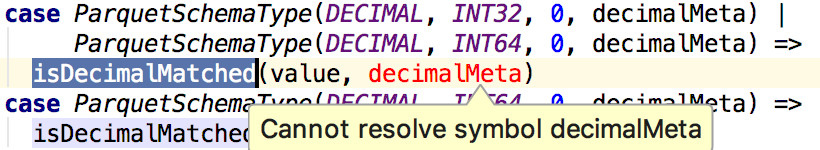

| case ParquetSchemaType(DECIMAL, INT32, 0, decimalMeta) => |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Can the decimal cases be collapsed to a single case on ParquetSchemaType(DECIMAL, _, _, decimalMetadata)?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Have you tried not using | and ignoring the physical type with _?

| case ParquetDoubleType => value.isInstanceOf[JDouble] | ||

| case ParquetStringType => value.isInstanceOf[String] | ||

| case ParquetBinaryType => value.isInstanceOf[Array[Byte]] | ||

| case ParquetDateType => value.isInstanceOf[Date] |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Why is there no support for timestamp?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Originally it is not supported. Do we need to support it?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Not in this PR that adds Decimal support. We should consider it in the future, though.

| System.arraycopy(bytes, 0, decimalBuffer, numBytes - bytes.length, bytes.length) | ||

| decimalBuffer | ||

| } | ||

| Binary.fromReusedByteArray(fixedLengthBytes, 0, numBytes) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This byte array is not reused, it is allocated each time this function runs. This should use the fromConstantByteArray variant. That tells Parquet that it isn't necessary to make defensive copies of the bytes.

| Native ORC Vectorized 3981 / 4049 4.0 253.1 1.0X | ||

| Native ORC Vectorized (Pushdown) 702 / 735 22.4 44.6 5.4X | ||

| Parquet Vectorized 4407 / 4852 3.6 280.2 1.0X | ||

| Parquet Vectorized (Pushdown) 1602 / 1634 9.8 101.8 2.8X |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Any thoughts on why this is slower than the other tests with decimal(18, 2) and decimal(38, 2)? This seems very strange to me.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Maybe it is that the data is more dense, so we need to read more values in the row group that contains the one we're looking for?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Because 1024 * 1024 * 15 is out of decimal(9, 2) range. so no stats for that column. I will update benchmark.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I'm not sure I understand. That's less than 2^24, so it should fit in an int. It should also fit in 8 base-ten digits so decimal(9,2) should work. And last, if the values don't fit in an int, I'm not sure how we would be able to store them in the first place, regardless of how stats are handled.

Did you verify that there are no stats for the file produced here? If that's the case, it would make sense with these numbers. I think we just need to look for a different reason why stats are missing.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Here is a test:

// decimal(9, 2) max values is 9999999.99

// 1024 * 1024 * 15 = 15728640

val path = "/tmp/spark/parquet"

spark.range(1024 * 1024 * 15).selectExpr("cast((id) as decimal(9, 2)) as id").orderBy("id").write.mode("overwrite").parquet(path)The generated parquet metadata:

$ java -jar ./parquet-tools/target/parquet-tools-1.10.1-SNAPSHOT.jar meta /tmp/spark/parquet

file: file:/tmp/spark/parquet/part-00000-26b38556-494a-4b89-923e-69ea73365488-c000.snappy.parquet

creator: parquet-mr version 1.10.0 (build 031a6654009e3b82020012a18434c582bd74c73a)

extra: org.apache.spark.sql.parquet.row.metadata = {"type":"struct","fields":[{"name":"id","type":"decimal(9,2)","nullable":true,"metadata":{}}]}

file schema: spark_schema

--------------------------------------------------------------------------------

id: OPTIONAL INT32 O:DECIMAL R:0 D:1

row group 1: RC:5728640 TS:36 OFFSET:4

--------------------------------------------------------------------------------

id: INT32 SNAPPY DO:0 FPO:4 SZ:38/36/0.95 VC:5728640 ENC:PLAIN,BIT_PACKED,RLE ST:[no stats for this column]

file: file:/tmp/spark/parquet/part-00001-26b38556-494a-4b89-923e-69ea73365488-c000.snappy.parquet

creator: parquet-mr version 1.10.0 (build 031a6654009e3b82020012a18434c582bd74c73a)

extra: org.apache.spark.sql.parquet.row.metadata = {"type":"struct","fields":[{"name":"id","type":"decimal(9,2)","nullable":true,"metadata":{}}]}

file schema: spark_schema

--------------------------------------------------------------------------------

id: OPTIONAL INT32 O:DECIMAL R:0 D:1

row group 1: RC:651016 TS:2604209 OFFSET:4

--------------------------------------------------------------------------------

id: INT32 SNAPPY DO:0 FPO:4 SZ:2604325/2604209/1.00 VC:651016 ENC:PLAIN,BIT_PACKED,RLE ST:[min: 0.00, max: 651015.00, num_nulls: 0]

file: file:/tmp/spark/parquet/part-00002-26b38556-494a-4b89-923e-69ea73365488-c000.snappy.parquet

creator: parquet-mr version 1.10.0 (build 031a6654009e3b82020012a18434c582bd74c73a)

extra: org.apache.spark.sql.parquet.row.metadata = {"type":"struct","fields":[{"name":"id","type":"decimal(9,2)","nullable":true,"metadata":{}}]}

file schema: spark_schema

--------------------------------------------------------------------------------

id: OPTIONAL INT32 O:DECIMAL R:0 D:1

row group 1: RC:3231146 TS:12925219 OFFSET:4

--------------------------------------------------------------------------------

id: INT32 SNAPPY DO:0 FPO:4 SZ:12925864/12925219/1.00 VC:3231146 ENC:PLAIN,BIT_PACKED,RLE ST:[min: 651016.00, max: 3882161.00, num_nulls: 0]

file: file:/tmp/spark/parquet/part-00003-26b38556-494a-4b89-923e-69ea73365488-c000.snappy.parquet

creator: parquet-mr version 1.10.0 (build 031a6654009e3b82020012a18434c582bd74c73a)

extra: org.apache.spark.sql.parquet.row.metadata = {"type":"struct","fields":[{"name":"id","type":"decimal(9,2)","nullable":true,"metadata":{}}]}

file schema: spark_schema

--------------------------------------------------------------------------------

id: OPTIONAL INT32 O:DECIMAL R:0 D:1

row group 1: RC:2887956 TS:11552408 OFFSET:4

--------------------------------------------------------------------------------

id: INT32 SNAPPY DO:0 FPO:4 SZ:11552986/11552408/1.00 VC:2887956 ENC:PLAIN,BIT_PACKED,RLE ST:[min: 3882162.00, max: 6770117.00, num_nulls: 0]

file: file:/tmp/spark/parquet/part-00004-26b38556-494a-4b89-923e-69ea73365488-c000.snappy.parquet

creator: parquet-mr version 1.10.0 (build 031a6654009e3b82020012a18434c582bd74c73a)

extra: org.apache.spark.sql.parquet.row.metadata = {"type":"struct","fields":[{"name":"id","type":"decimal(9,2)","nullable":true,"metadata":{}}]}

file schema: spark_schema

--------------------------------------------------------------------------------

id: OPTIONAL INT32 O:DECIMAL R:0 D:1

row group 1: RC:3229882 TS:12920163 OFFSET:4

--------------------------------------------------------------------------------

id: INT32 SNAPPY DO:0 FPO:4 SZ:12920808/12920163/1.00 VC:3229882 ENC:PLAIN,BIT_PACKED,RLE ST:[min: 6770118.00, max: 9999999.00, num_nulls: 0]As you can see file:/tmp/spark/parquet/part-00000-26b38556-494a-4b89-923e-69ea73365488-c000.snappy.parquet have not generated stats for that column.

scala> spark.read.parquet(path).filter("id is null").count

res0: Long = 5728640

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Okay, I see. The tenths and hundredths are always 0, which makes the precision-8 numbers actually precision-10. It is still odd that this is causing Parquet to have no stats, but I'm happy with the fix. Thanks for explaining.

| Binary.fromReusedByteArray(fixedLengthBytes, 0, numBytes) | ||

| } | ||

|

|

||

| private val makeEq: PartialFunction[ParquetSchemaType, (String, Any) => FilterPredicate] = { |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Since makeEq is called for EqualsNullSafe and valueCanMakeFilterOn allows null values through, I think these could be null, like the String case. I think this should use the Option pattern from String for all values, unless I'm missing some reason why these will never be null.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

ParquetBooleanType, ParquetLongType, ParquetFloatType and ParquetDoubleType do not need Option. Here is a example:

scala> import org.apache.parquet.io.api.Binary

import org.apache.parquet.io.api.Binary

scala> Option(null).map(s => Binary.fromString(s.asInstanceOf[String])).orNull

res7: org.apache.parquet.io.api.Binary = null

scala> Binary.fromString(null.asInstanceOf[String])

java.lang.NullPointerException

at org.apache.parquet.io.api.Binary$FromStringBinary.encodeUTF8(Binary.java:224)

at org.apache.parquet.io.api.Binary$FromStringBinary.<init>(Binary.java:214)

at org.apache.parquet.io.api.Binary.fromString(Binary.java:554)

... 52 elided

scala> null.asInstanceOf[java.lang.Long]

res9: Long = null

scala> null.asInstanceOf[java.lang.Boolean]

res10: Boolean = null

scala> Option(null).map(_.asInstanceOf[Number].intValue.asInstanceOf[Integer]).orNull

res11: Integer = null

scala> null.asInstanceOf[Number].intValue.asInstanceOf[Integer]

java.lang.NullPointerException

... 52 elidedThere was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Sounds good. Thanks!

| makeEq.lift(nameToType(name)).map(_(name, value)) | ||

| case sources.Not(sources.EqualNullSafe(name, value)) if canMakeFilterOn(name) => | ||

| case sources.Not(sources.EqualNullSafe(name, value)) if canMakeFilterOn(name, value) => | ||

| makeNotEq.lift(nameToType(name)).map(_(name, value)) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Since makeNotEq is also used for EqualNullSafe, I think it should handle null values as well.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I handled null values at valueCanMakeFilterOn:

def valueCanMakeFilterOn(name: String, value: Any): Boolean = {

value == null || (nameToType(name) match {

case ParquetBooleanType => value.isInstanceOf[JBoolean]

case ParquetByteType | ParquetShortType | ParquetIntegerType => value.isInstanceOf[Number]

case ParquetLongType => value.isInstanceOf[JLong]

case ParquetFloatType => value.isInstanceOf[JFloat]

case ParquetDoubleType => value.isInstanceOf[JDouble]

case ParquetStringType => value.isInstanceOf[String]

case ParquetBinaryType => value.isInstanceOf[Array[Byte]]

case ParquetDateType => value.isInstanceOf[Date]

case ParquetSchemaType(DECIMAL, INT32, _, decimalMeta) =>

isDecimalMatched(value, decimalMeta)

case ParquetSchemaType(DECIMAL, INT64, _, decimalMeta) =>

isDecimalMatched(value, decimalMeta)

case ParquetSchemaType(DECIMAL, FIXED_LEN_BYTE_ARRAY, _, decimalMeta) =>

isDecimalMatched(value, decimalMeta)

case _ => false

})

}There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Maybe I'm missing something, but that returns true for all null values.

|

Test build #92936 has finished for PR 21556 at commit

|

|

Test build #92938 has finished for PR 21556 at commit

|

|

@wangyum, can you explain what was happening with the Also, |

|

@rdblue, so basically you mean it looks both equality comparison and nullsafe equality comparison are identically pushed down and looks it should be distinguished; otherwise, there could be a potential problem? If so, yup. I agree with it. I think we won't have actually a chance to push down equality comparison or nullsafe equality comparison with actual Thing is, I remember I checked the inside of Parquet's equality comparison API itself is actually nullsafe a long ago like few years ago - this of course should be double checked. Since this PR doesn't change the existing behaviour on this and looks needing some more investigation (e.g., checking if it is still (or it has been) true what I remembered and checked about Parquet's equality comparison), probably, it might be okay to leave it as is here and proceed separately. |

| case _ => false | ||

| } | ||

|

|

||

| // Decimal type must make sure that filter value's scale matched the file. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Shall we leave this comment around the decimal cases below or around isDecimalMatched?

|

|

||

| // Decimal type must make sure that filter value's scale matched the file. | ||

| // If doesn't matched, which would cause data corruption. | ||

| // Other types must make sure that filter value's type matched the file. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I would say like .. Parquet's type in the given file should be matched to the value's type in the pushed filter in order to push down the filter to Parquet.

|

@HyukjinKwon, even if the values are null, the makeEq function only casts null to Java Integer so the handling is still safe. It just looks odd that And, passing null in an equals predicate is supported by Parquet. |

|

+1, I think this looks ready to go. |

|

Test build #92996 has finished for PR 21556 at commit

|

|

@rdblue, ah, I misunderstood then. thanks for clarifying it. |

|

I misunderstood how it was safe as well. It was Yuming's clarification that helped. |

# Conflicts: # sql/catalyst/src/main/scala/org/apache/spark/sql/internal/SQLConf.scala # sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/parquet/ParquetFileFormat.scala # sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/parquet/ParquetFilters.scala # sql/core/src/test/scala/org/apache/spark/sql/execution/datasources/parquet/ParquetFilterSuite.scala

|

Test build #93014 has finished for PR 21556 at commit

|

|

retest this please |

|

Test build #93017 has finished for PR 21556 at commit

|

|

thanks, merging to master! |

What changes were proposed in this pull request?

Support Decimal type push down to the parquet data sources.

The Decimal comparator used is:

BINARY_AS_SIGNED_INTEGER_COMPARATOR.How was this patch tested?

unit tests and manual tests.

manual tests: