-

Notifications

You must be signed in to change notification settings - Fork 29k

[SPARK-26742][K8S] Update Kubernetes-Client version to 4.1.2 #23814

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

a97ddb9 to

3433f32

Compare

|

Hi, @Jeffwan . Thank you for your first contribution. |

|

ok to test |

|

Kubernetes integration test starting |

|

Kubernetes integration test status failure |

|

Test build #102431 has finished for PR 23814 at commit

|

3433f32 to

d344445

Compare

|

Kubernetes integration test starting |

|

Kubernetes integration test status failure |

|

Test build #102438 has finished for PR 23814 at commit

|

|

I am trying to resolve integration test failure and submit revision later. |

|

@Jeffwan @dongjoon-hyun I am already updating the version here: #23514 |

|

Good to know. I didn't see PR links in original ticket and thought no one working on it. @skonto. Could you help upgrade to v4.1.2? There's several bug fixes important for spark to talk to kubernetes. We want to leverage this latest version. Once that's included, I can close this PR. |

|

@Jeffwan I didnt see the ticket because my PR is a bit older but I needed that new version with the k8s updated model for long so that local storage can work (didnt do the complete upgrade) ;) Anyway I can rebase mine if yours is merged. How do you want me to help? What does not work for you? Do you mean moving these changes to my PR or do you want me try run your PR? I dont mind whatever works for you but its better to resolve the issues independently as there are two different tickets. |

|

@shaneknapp What version of K8S do the integration tests run against? I notice from the compatibility matrix given by the Fabric8 folks that 4.1.1 and above requires K8S 1.9.0 or later. If the integration tests are running against an older K8S version that could explain the test failures. @Jeffwan @skonto A side effect of this change is that this will make the minimum supported K8S version 1.9.0. Personally I think this is fine since 1.9 is already EOL and not receiving updates from the K8S community but we would need to make sure this is updated in the docs appropriately |

|

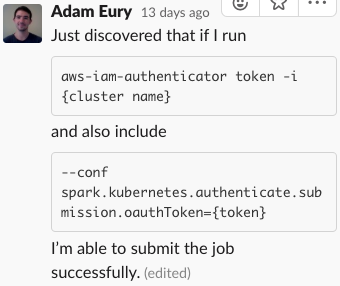

I would also note that a workaround for the AWS EKS issue is that user can first manually log in with AWS and then pass the OAuth token explicitly to (The above from a recent discussion on the #spark-operator K8S slack channel - https://kubernetes.slack.com/archives/CALBDHMTL/p1549469998419600?thread_ts=1549416963.416700&cid=CALBDHMTL) |

|

@rvesse Right, 1.9.0 is already sunset. Should be safe to use it now. The walkround will definitely work but token expired 20mins later. User doesn't like to refresh token frequently. This is not just for EKS, all the authentication using plugin to retrieve token will be impacted. For long term, I think we need to have this patch |

|

@skonto It takes me sometime to reproduce integration test failure in my env, not sure what's broken there. Will update thread later. |

right now we're testing against: minikube v0.25.0 i have a "plan" in the not-so-distant-future to get us updated to: minikube v0.34.1

sgtm |

|

FYI, upgrading to 4.1.2 also fixes SPARK-25590, so that one could be closed when this change goes in. |

|

re: kube version support, our originally proposed policy was to support current version and previous, and so technically supporting 1.12 and 1.13 is now sufficient, although supporting more is obviously even better. |

|

Might make sense to match the k8s community and patch releases - 1.11 is still getting actively patched (for a total of 9 months since it came out). With the 3 month release cycle, we should maybe target 3 versions and deprecated 1.11 support only after 1.14 comes out (https://github.com/kubernetes/sig-release/tree/master/releases/release-1.14#tldr); which is the same schedule that k8s upstream follows. |

|

fyi i can't help out on this for the next ~2 weeks, as we're moving back in

to our lab after a major remodel.

it shouldn't be too much trouble to test against different k8s versions as

we can pass that in as an arg when starting minikube.

it will be a PITA to ensure that all of the pods, etc, are properly

configured to allow the tests to run. for example, i'm currently testing

against minikube v0.33.4 and kubernetes 1.13 and running in to service

account/api access issues (which i will revisit once the dust settles here

in meatspace).

i would hesitate to have regularly scheduled integration tests for this

matrix, however, as we have limited physical resources available (number of

ubuntu build nodes).

if someone would like to open a jira for this, we could move the

conversation there.

…On Thu, Feb 21, 2019 at 11:30 AM Anirudh Ramanathan < ***@***.***> wrote:

Might make sense to match the k8s community and patch releases - 1.11 is

still getting actively patched (for a total of 9 months since it came out).

With the 3 month release cycle, we should maybe target 3 versions and

deprecated 1.11 support only after 1.14 comes out (

https://github.com/kubernetes/sig-release/tree/master/releases/release-1.14#tldr);

which is the same schedule that k8s upstream follows.

—

You are receiving this because you were mentioned.

Reply to this email directly, view it on GitHub

<#23814 (comment)>, or mute

the thread

<https://github.com/notifications/unsubscribe-auth/ABiDrATByFF-7idydGPKgpqDDshDoDC7ks5vPvO4gaJpZM4a_ZuQ>

.

|

|

I think supporting two out of the three mentioned here: https://kubernetes.io/docs/setup/version-skew-policy like 1.12 and 1.13 would be good enough. But that means running tests twice, on the other hand this is master so we should be up to date at least with 1.13. I will create a jira @shaneknapp. |

|

That version skew policy document talks about skew between various components in a k8s system. The release cycle maintains 3 releases at all times - for example, right now, 1.11, 1.12 and 1.13 are all actively maintained. Here is the relevant doc: I am the current 1.11 release czar for k8s and will be cutting a release till 1.14 goes out. I don't mind going back to a policy with 2 releases supported if there's a good rationale for it but we would be discontinuing support for an actively patched and maintained version of K8s. Happy to discuss more on the JIRA. I am in complete agreement that we should make the test infrastructure's job a lot simpler with these types of things - new k8s versions, etc. If there's any ideas on what we can do to help, I'm happy to put in some effort and help with it. |

srowen

left a comment

srowen

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

The version bump itself seems fine to me. Does it need to be backported?

|

Should be okay to backport into 2.4 I think. There's probably more EKS users than anyone running k8s 1.8 :) |

|

@foxish EKS is on 1.10 & 1.11 btw. So in general I guess the versioning is more critical when we do a release and trying to cover versions people actually use for good or bad at that point in time (that could be different than master). So in that phase maybe we need more version coverage, but I see that is hard to align everything here. |

|

Merged to master. 2.4 is still on older major versions of the client, so I hesitate to back-port it directly. If it's essential, that probably needs another look and PR. |

|

Just wanted to point out that this was merged without a successful run of the integration tests. Now all integration tests are failing. I see in the discussion that the new version is supposed to be compatible with k8s 1.9, and that seems to be the version running on jenkins (minikube 0.25 says it ships with k8s 1.9). Might be a bug in 4.1.2. Perhaps we should revert this until we figure out what's going on. Anyway, I filed SPARK-26997; I might revert this patch if we don't figure out what's wrong soon. |

|

Oh, I apologize, I now see those K8S tests are separate and failing. I'll revert it if it's not obvious how to fix-forward. |

|

|

Ok, I'll revert the patch. |

|

Sorry about that.. I have not figured it out and notice github doesn't show failures.. I will try to fix it soon. |

|

It does show failures: #23814 (comment) Maybe it should be a little bit louder, though, like the regular PR builder failures. Anyway, people are looking at this as part of SPARK-26997, so if you have more info, please post it there. |

|

@vanzin @srowen there is a compatibility matrix for the fabric8io client and the k8s support. 4.1.2 is not listed there so I am wondering if 1.10 is supported any more. |

i believe this is the case. i'm currently confirming this, and then looking in to upgrading minikube to something more recent. our current version, v0.25.0 is only able to support up to k8s 1.10.0. a minikube upgrade is not trivial. |

|

It's super weird to drop compatibility in .z releases, but hey, I'm not an RM on that project... :-/ |

|

we're definitely incompatible w/4.1.2. i'll need to do a pretty serious upgrade on our end, and hope that minikube is keeping up w/k8s mainline in a reasonable fashion. |

|

TL;DR: i got this to work! we can deploy the infra changes next week, get this merged and move on. |

|

@shaneknapp Thanks! Good to know it's a minikube issue. I was not sure integration failures and get stucked there. Anyone working on these items now? If not, I will have a try to make it work |

|

@Jeffwan we're tracking work here: https://issues.apache.org/jira/browse/SPARK-26742 once we decide which version of k8s to test against, i can get the infra deployed simultaneously with the merging of both this PR and #23993. |

What changes were proposed in this pull request?

Changed the

kubernetes-clientversion to 4.1.2. Latest version fix error with exec credentials (used by aws eks) and this will be used to talk with kubernetes API server. Users can submit spark job to EKS api endpoint now with this patch.How was this patch tested?

unit tests and manual tests.