-

Notifications

You must be signed in to change notification settings - Fork 29k

[SPARK-27402][SQL][test-hadoop3.2][test-maven] Fix hadoop-3.2 test issue(except the hive-thriftserver module) #24391

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Changes from all commits

9c0046a

490a751

24598ee

70b4b0b

d2f83f6

0893172

262be84

e321f61

aaf2952

994b5a7

4372759

8520124

27e5b6e

File filter

Filter by extension

Conversations

Jump to

Diff view

Diff view

There are no files selected for viewing

| Original file line number | Diff line number | Diff line change |

|---|---|---|

|

|

@@ -15,9 +15,17 @@ | |

| # limitations under the License. | ||

| # | ||

|

|

||

| from __future__ import print_function | ||

| from functools import total_ordering | ||

| import itertools | ||

| import re | ||

| import os | ||

|

|

||

| if os.environ.get("AMPLAB_JENKINS"): | ||

| hadoop_version = os.environ.get("AMPLAB_JENKINS_BUILD_PROFILE", "hadoop2.7") | ||

| else: | ||

| hadoop_version = os.environ.get("HADOOP_PROFILE", "hadoop2.7") | ||

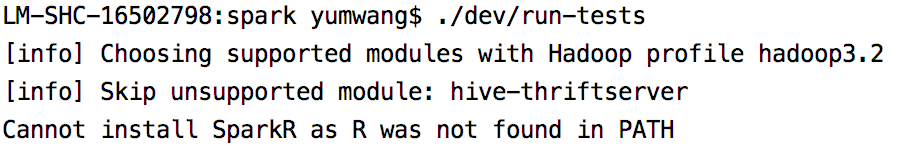

| print("[info] Choosing supported modules with Hadoop profile", hadoop_version) | ||

|

|

||

| all_modules = [] | ||

|

|

||

|

Member

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. We should have a log message to show which profile we are using; otherwise, it is hard for us to know which profile is activated.

Member

Author

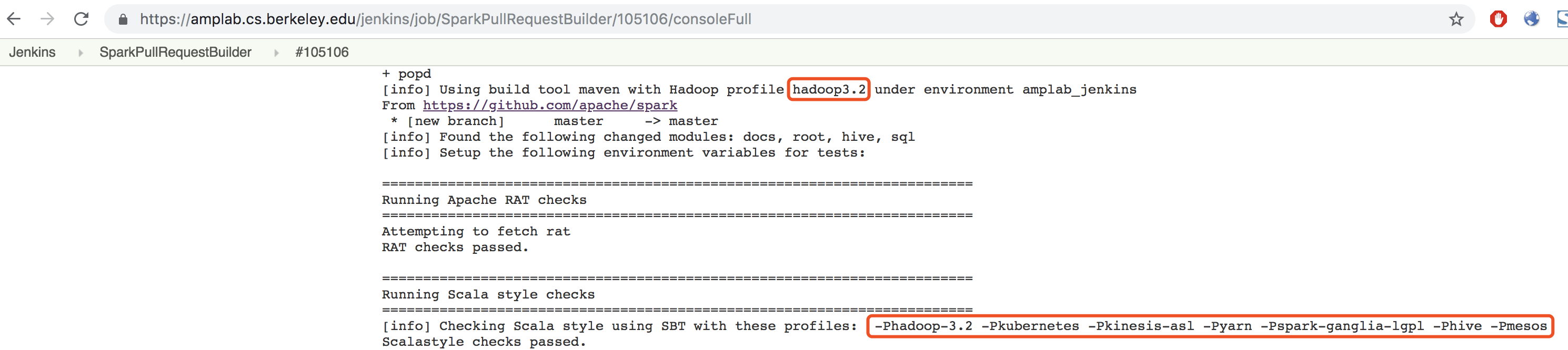

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. Yes. we already have these log message:

Member

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. What I mean, we should have a log message to show which profile is being actually used. Just relying on the command parameter lists does not sound very reliable

Member

Author

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. |

||

|

|

@@ -72,7 +80,11 @@ def __init__(self, name, dependencies, source_file_regexes, build_profile_flags= | |

| self.dependent_modules = set() | ||

| for dep in dependencies: | ||

| dep.dependent_modules.add(self) | ||

| all_modules.append(self) | ||

| # TODO: Skip hive-thriftserver module for hadoop-3.2. remove this once hadoop-3.2 support it | ||

| if name == "hive-thriftserver" and hadoop_version == "hadoop3.2": | ||

|

Member

Author

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. Used to skip hive-thriftserver module for hadoop-3.2. Will revert this change once we can merge.

Member

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. Maybe leave a TODO here just to try to make sure that doesn't get lost |

||

| print("[info] Skip unsupported module:", name) | ||

| else: | ||

| all_modules.append(self) | ||

|

|

||

| def contains_file(self, filename): | ||

| return any(re.match(p, filename) for p in self.source_file_prefixes) | ||

|

|

||

| Original file line number | Diff line number | Diff line change |

|---|---|---|

|

|

@@ -28,6 +28,7 @@ import org.apache.commons.io.{FileUtils, IOUtils} | |

| import org.apache.commons.lang3.{JavaVersion, SystemUtils} | ||

| import org.apache.hadoop.conf.Configuration | ||

| import org.apache.hadoop.hive.conf.HiveConf.ConfVars | ||

| import org.apache.hadoop.hive.shims.ShimLoader | ||

|

|

||

| import org.apache.spark.SparkConf | ||

| import org.apache.spark.deploy.SparkSubmitUtils | ||

|

|

@@ -196,6 +197,7 @@ private[hive] class IsolatedClientLoader( | |

| protected def isBarrierClass(name: String): Boolean = | ||

| name.startsWith(classOf[HiveClientImpl].getName) || | ||

| name.startsWith(classOf[Shim].getName) || | ||

| name.startsWith(classOf[ShimLoader].getName) || | ||

|

Member

Author

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. Add build/sbt "hive/testOnly *.VersionsSuite" -Phadoop-3.2 -Phive

...

[info] - 0.12: create client *** FAILED *** (36 seconds, 207 milliseconds)

[info] java.lang.reflect.InvocationTargetException:

[info] at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

[info] at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

[info] at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

[info] at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

[info] at org.apache.spark.sql.hive.client.IsolatedClientLoader.createClient(IsolatedClientLoader.scala:295)

[info] at org.apache.spark.sql.hive.client.HiveClientBuilder$.buildClient(HiveClientBuilder.scala:58)

[info] at org.apache.spark.sql.hive.client.VersionsSuite.$anonfun$new$7(VersionsSuite.scala:130)

[info] at org.scalatest.OutcomeOf.outcomeOf(OutcomeOf.scala:85)

[info] at org.scalatest.OutcomeOf.outcomeOf$(OutcomeOf.scala:83)

[info] at org.scalatest.OutcomeOf$.outcomeOf(OutcomeOf.scala:104)

[info] at org.scalatest.Transformer.apply(Transformer.scala:22)

[info] at org.scalatest.Transformer.apply(Transformer.scala:20)

[info] at org.scalatest.FunSuiteLike$$anon$1.apply(FunSuiteLike.scala:186)

[info] at org.apache.spark.SparkFunSuite.withFixture(SparkFunSuite.scala:105)

[info] at org.scalatest.FunSuiteLike.invokeWithFixture$1(FunSuiteLike.scala:184)

[info] at org.scalatest.FunSuiteLike.$anonfun$runTest$1(FunSuiteLike.scala:196)

[info] at org.scalatest.SuperEngine.runTestImpl(Engine.scala:289)

[info] at org.scalatest.FunSuiteLike.runTest(FunSuiteLike.scala:196)

[info] at org.scalatest.FunSuiteLike.runTest$(FunSuiteLike.scala:178)

[info] at org.scalatest.FunSuite.runTest(FunSuite.scala:1560)

[info] at org.scalatest.FunSuiteLike.$anonfun$runTests$1(FunSuiteLike.scala:229)

[info] at org.scalatest.SuperEngine.$anonfun$runTestsInBranch$1(Engine.scala:396)

[info] at scala.collection.immutable.List.foreach(List.scala:392)

[info] at org.scalatest.SuperEngine.traverseSubNodes$1(Engine.scala:384)

[info] at org.scalatest.SuperEngine.runTestsInBranch(Engine.scala:379)

[info] at org.scalatest.SuperEngine.runTestsImpl(Engine.scala:461)

[info] at org.scalatest.FunSuiteLike.runTests(FunSuiteLike.scala:229)

[info] at org.scalatest.FunSuiteLike.runTests$(FunSuiteLike.scala:228)

[info] at org.scalatest.FunSuite.runTests(FunSuite.scala:1560)

[info] at org.scalatest.Suite.run(Suite.scala:1147)

[info] at org.scalatest.Suite.run$(Suite.scala:1129)

[info] at org.scalatest.FunSuite.org$scalatest$FunSuiteLike$$super$run(FunSuite.scala:1560)

[info] at org.scalatest.FunSuiteLike.$anonfun$run$1(FunSuiteLike.scala:233)

[info] at org.scalatest.SuperEngine.runImpl(Engine.scala:521)

[info] at org.scalatest.FunSuiteLike.run(FunSuiteLike.scala:233)

[info] at org.scalatest.FunSuiteLike.run$(FunSuiteLike.scala:232)

[info] at org.apache.spark.SparkFunSuite.org$scalatest$BeforeAndAfterAll$$super$run(SparkFunSuite.scala:54)

[info] at org.scalatest.BeforeAndAfterAll.liftedTree1$1(BeforeAndAfterAll.scala:213)

[info] at org.scalatest.BeforeAndAfterAll.run(BeforeAndAfterAll.scala:210)

[info] at org.scalatest.BeforeAndAfterAll.run$(BeforeAndAfterAll.scala:208)

[info] at org.apache.spark.SparkFunSuite.run(SparkFunSuite.scala:54)

[info] at org.scalatest.tools.Framework.org$scalatest$tools$Framework$$runSuite(Framework.scala:314)

[info] at org.scalatest.tools.Framework$ScalaTestTask.execute(Framework.scala:507)

[info] at sbt.ForkMain$Run$2.call(ForkMain.java:296)

[info] at sbt.ForkMain$Run$2.call(ForkMain.java:286)

[info] at java.util.concurrent.FutureTask.run(FutureTask.java:266)

[info] at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

[info] at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

[info] at java.lang.Thread.run(Thread.java:748)

[info] Cause: java.lang.RuntimeException: org.apache.hadoop.hive.ql.metadata.HiveException: java.lang.RuntimeException: java.lang.IllegalArgumentException: Unrecognized Hadoop major version number: 3.2.0

[info] at org.apache.hadoop.hive.ql.session.SessionState.start(SessionState.java:286)

[info] at org.apache.spark.sql.hive.client.HiveClientImpl.newState(HiveClientImpl.scala:187)

[info] at org.apache.spark.sql.hive.client.HiveClientImpl.<init>(HiveClientImpl.scala:119) |

||

| barrierPrefixes.exists(name.startsWith) | ||

|

|

||

| protected def classToPath(name: String): String = | ||

|

|

||

| Original file line number | Diff line number | Diff line change |

|---|---|---|

|

|

@@ -100,8 +100,14 @@ class StatisticsSuite extends StatisticsCollectionTestBase with TestHiveSingleto | |

| .asInstanceOf[HiveTableRelation] | ||

|

|

||

| val properties = relation.tableMeta.ignoredProperties | ||

| assert(properties("totalSize").toLong <= 0, "external table totalSize must be <= 0") | ||

| assert(properties("rawDataSize").toLong <= 0, "external table rawDataSize must be <= 0") | ||

| if (HiveUtils.isHive23) { | ||

| // Since HIVE-6727, Hive fixes table-level stats for external tables are incorrect. | ||

| assert(properties("totalSize").toLong == 6) | ||

| assert(properties.get("rawDataSize").isEmpty) | ||

| } else { | ||

| assert(properties("totalSize").toLong <= 0, "external table totalSize must be <= 0") | ||

| assert(properties("rawDataSize").toLong <= 0, "external table rawDataSize must be <= 0") | ||

| } | ||

|

|

||

| val sizeInBytes = relation.stats.sizeInBytes | ||

| assert(sizeInBytes === BigInt(file1.length() + file2.length())) | ||

|

|

@@ -865,17 +871,25 @@ class StatisticsSuite extends StatisticsCollectionTestBase with TestHiveSingleto | |

| val totalSize = extractStatsPropValues(describeResult, "totalSize") | ||

| assert(totalSize.isDefined && totalSize.get > 0, "totalSize is lost") | ||

|

|

||

| // ALTER TABLE SET/UNSET TBLPROPERTIES invalidates some Hive specific statistics, but not | ||

| // Spark specific statistics. This is triggered by the Hive alterTable API. | ||

| val numRows = extractStatsPropValues(describeResult, "numRows") | ||

| assert(numRows.isDefined && numRows.get == -1, "numRows is lost") | ||

| val rawDataSize = extractStatsPropValues(describeResult, "rawDataSize") | ||

| assert(rawDataSize.isDefined && rawDataSize.get == -1, "rawDataSize is lost") | ||

|

|

||

| if (analyzedBySpark) { | ||

| if (HiveUtils.isHive23) { | ||

| // Since HIVE-15653(Hive 2.3.0), Hive fixs some ALTER TABLE commands drop table stats. | ||

| assert(numRows.isDefined && numRows.get == 500) | ||

|

Member

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. Finally, Hive fixed this annoying bug. The statistics are very important for us too. Please add this in the migration guide and document the behavior difference when they used different Hadoop profile. |

||

| val rawDataSize = extractStatsPropValues(describeResult, "rawDataSize") | ||

| assert(rawDataSize.isDefined && rawDataSize.get == 5312) | ||

| checkTableStats(tabName, hasSizeInBytes = true, expectedRowCounts = Some(500)) | ||

| } else { | ||

| checkTableStats(tabName, hasSizeInBytes = true, expectedRowCounts = None) | ||

| // ALTER TABLE SET/UNSET TBLPROPERTIES invalidates some Hive specific statistics, but not | ||

| // Spark specific statistics. This is triggered by the Hive alterTable API. | ||

| assert(numRows.isDefined && numRows.get == -1, "numRows is lost") | ||

| val rawDataSize = extractStatsPropValues(describeResult, "rawDataSize") | ||

| assert(rawDataSize.isDefined && rawDataSize.get == -1, "rawDataSize is lost") | ||

|

|

||

| if (analyzedBySpark) { | ||

| checkTableStats(tabName, hasSizeInBytes = true, expectedRowCounts = Some(500)) | ||

| } else { | ||

| checkTableStats(tabName, hasSizeInBytes = true, expectedRowCounts = None) | ||

| } | ||

| } | ||

| } | ||

| } | ||

|

|

||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@wangyum, this will shows the info every time this modules is imported. why did we do this here?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

okay. it's a temp fix so I'm fine. I will make a followup to handle https://github.com/apache/spark/pull/24639/files

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Skip the

hive-thriftservermodule when running the hadoop-3.2 test. Will remove it in another PR: https://github.com/apache/spark/pull/24628/filesThere was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thank you @HyukjinKwon