-

Notifications

You must be signed in to change notification settings - Fork 29k

[SPARK-35780][SQL] Support DATE/TIMESTAMP literals across the full range #32959

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Changes from 1 commit

457d3b6

633781f

b250bc7

5b4fe62

e94885c

e9558cb

7e7a81a

e0ff811

d9f8af4

9a07c14

9e96020

b383571

dda6136

18d7766

e146045

4091859

4043889

8d69c88

cd330a6

4723f8e

538463a

File filter

Filter by extension

Conversations

Jump to

Diff view

Diff view

…nge-datetime

- Loading branch information

There are no files selected for viewing

| Original file line number | Diff line number | Diff line change |

|---|---|---|

|

|

@@ -253,7 +253,7 @@ object DateTimeUtils { | |

| // A Long is able to represent a timestamp within [+-]200 thousand years | ||

| val maxDigitsYear = 6 | ||

| // For the nanosecond part, more than 6 digits is allowed, but will be truncated. | ||

| segment == 6 || (segment == 0 && digits > 0 && digits <= maxDigitsYear) || | ||

| segment == 6 || (segment == 0 && digits >= 4 && digits <= maxDigitsYear) || | ||

| (segment != 0 && segment != 6 && digits <= 2) | ||

| } | ||

| if (s == null || s.trimAll().numBytes() == 0) { | ||

|

|

@@ -527,7 +527,7 @@ object DateTimeUtils { | |

| def isValidDigits(segment: Int, digits: Int): Boolean = { | ||

| // An integer is able to represent a date within [+-]5 million years. | ||

| var maxDigitsYear = 7 | ||

|

Contributor

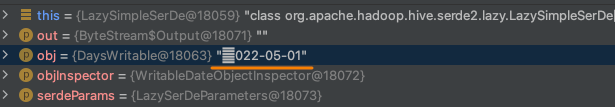

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. Can I implement a configuration item that configures the range of digits allowed for the year? I found that it was writing to tables in different formats and the results would behave differently. create table t(c1 date) stored as textfile;

insert overwrite table t select cast( '22022-05-01' as date);

select * from t1; -- output nullcreate table t(c1 date) stored as orcfile;

insert overwrite table t select cast( '22022-05-01' as date);

select * from t1; -- output +22022-05-01Because orc/parquet date stores integers, but textfile and sequencefile store text. But if you use hive jdbc, the query will fail, because

Contributor

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. It's expected that not all the data sources and BI clients support datetime values larger than It looks to me that the Hive table should fail to write

Contributor

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. BTW, I don't think it's possible to add a Spark config to forbid large datetime values. The literal is just one place, there are many other datetime operations that may produce large datetime values, which have been there before this PR.

Contributor

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. Thanks for your explanation, make sense. There may be some dates that were treated as abnormal by users in previous Spark versions, and can be handled normally in Spark 3.2, although they are normal dates.

Contributor

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. Yea the impact on BI clients was missed, though strictly speaking BI clients are not part of Spark. |

||

| (segment == 0 && digits > 0 && digits <= maxDigitsYear) || (segment != 0 && digits <= 2) | ||

| (segment == 0 && digits >= 4 && digits <= maxDigitsYear) || (segment != 0 && digits <= 2) | ||

| } | ||

| if (s == null || s.trimAll().numBytes() == 0) { | ||

| return None | ||

|

|

||

Uh oh!

There was an error while loading. Please reload this page.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

segments except year are allowed to have 0 digits before this PR. so I didn't do zero checks for these segments.

for example, before and after this PR, the below query is valid:

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

good catch, we should fail it. Let's do it in another PR.