-

Notifications

You must be signed in to change notification settings - Fork 29k

[SPARK-36039][K8S] Fix executor pod hadoop conf mount #33257

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

|

Can one of the admins verify this patch? |

|

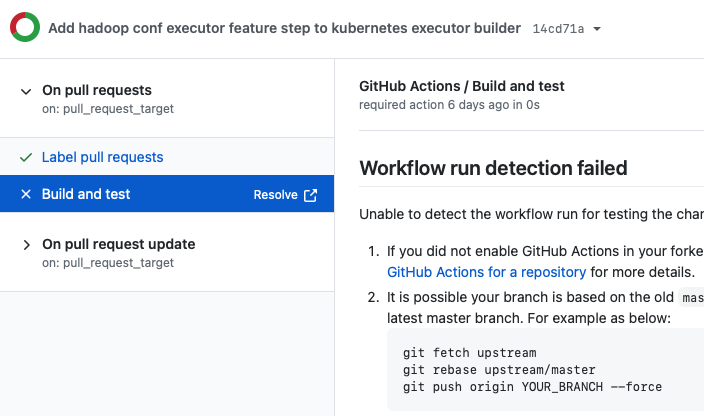

Hi, @cutiechi . Thank you for making a PR. Apache Spark community is using the contributor's GitHub Action resource. Please allow GitHub Action on your fork and make it up-to-date. The following failure is happening on your fork. |

dongjoon-hyun

left a comment

dongjoon-hyun

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Also, please add a test case.

cc @ScrapCodes

Hello, I have already done this, but I don’t know why I can’t pass the detection of this action |

Ok |

|

Hi @cutiechi, Thank you for the PR! There is a way to mount arbitrary hadoop configuration on executors, i.e. by spark conf propagate implemented in [SPARK-30985]. Place all hadoop configuration files in the SPARK_HOME/conf dir and it will be loaded on the executors and driver as well. This happens internally by creating a configMap, one for driver and executor each. At the moment these configMaps are not fully user configurable. IMO, If we make these configMap user configurable, then that solution will apply to all the frameworks not specific to hadoop. [SPARK-32223] In the mean time, we can have this. But we would need a k8s integration test. |

|

Or may be the integration test is not absolutely necessary, just the Unit test is enough. e.g. this test |

|

Please help me review again, thx |

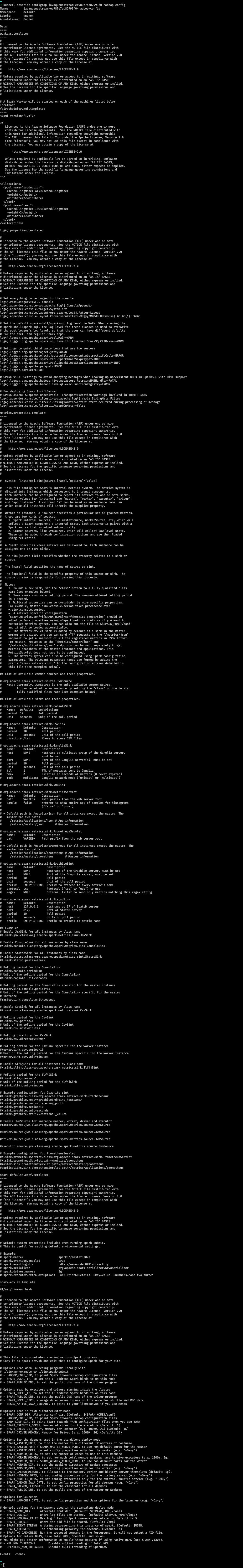

...core/src/main/scala/org/apache/spark/deploy/k8s/features/HadoopConfExecutorFeatureStep.scala

Outdated

Show resolved

Hide resolved

|

Thanks for the fix, I am also facing this issue. FYI, I think the same issue is present for the kerberos config. When specifying the prop |

|

Please help me review again, thx |

|

While testing your PR, I am getting the same error again. Executors are crash looping with: This happens because, configmap does not get created in the executor step. And the current code is designed that way. It will work, if we use a user provided configmap. |

|

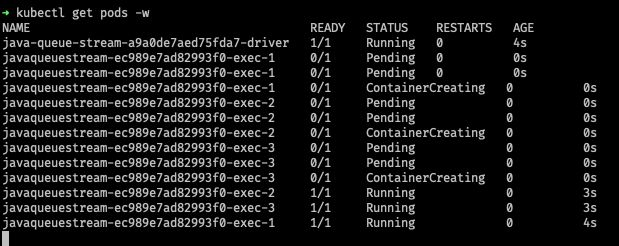

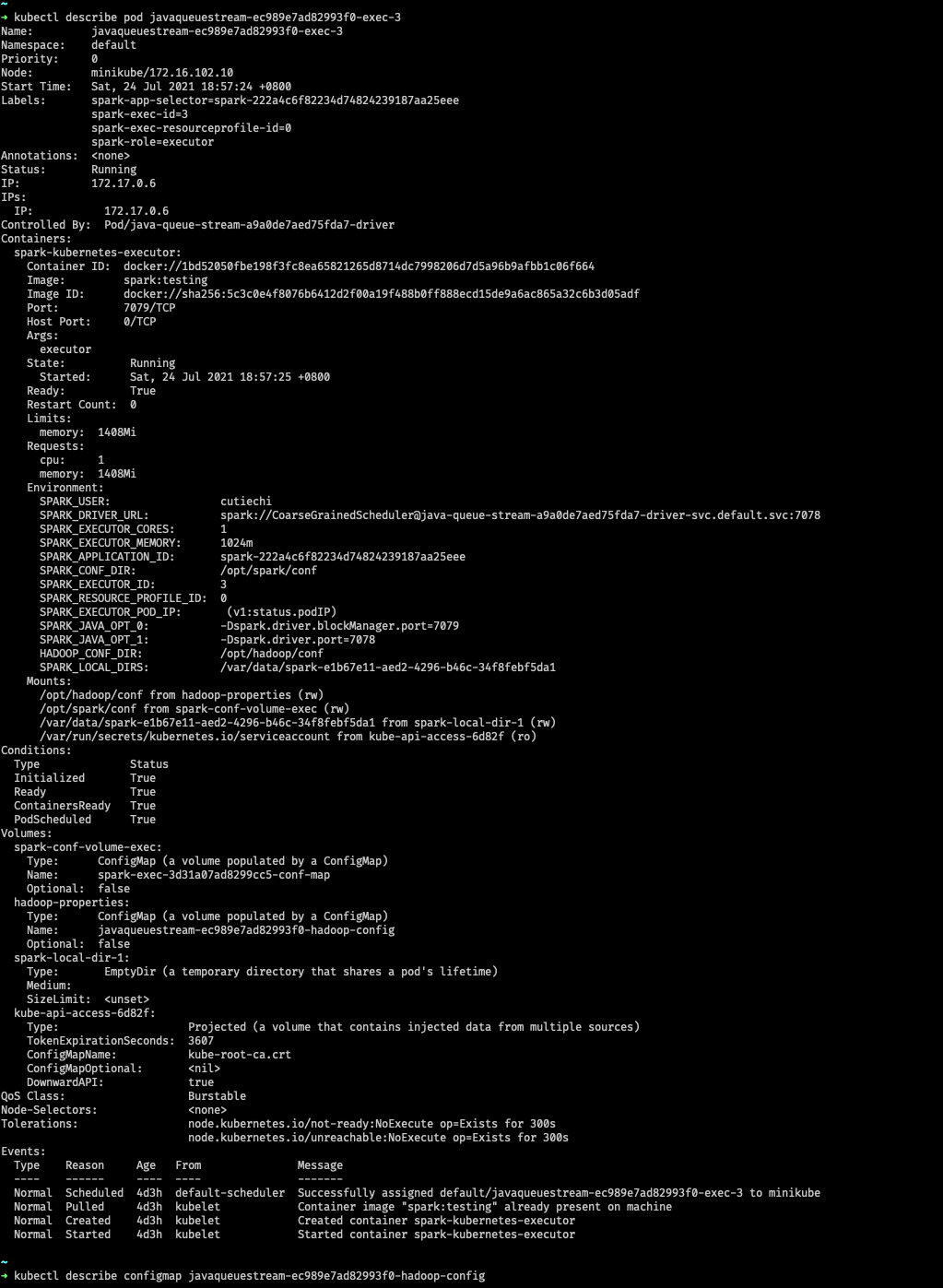

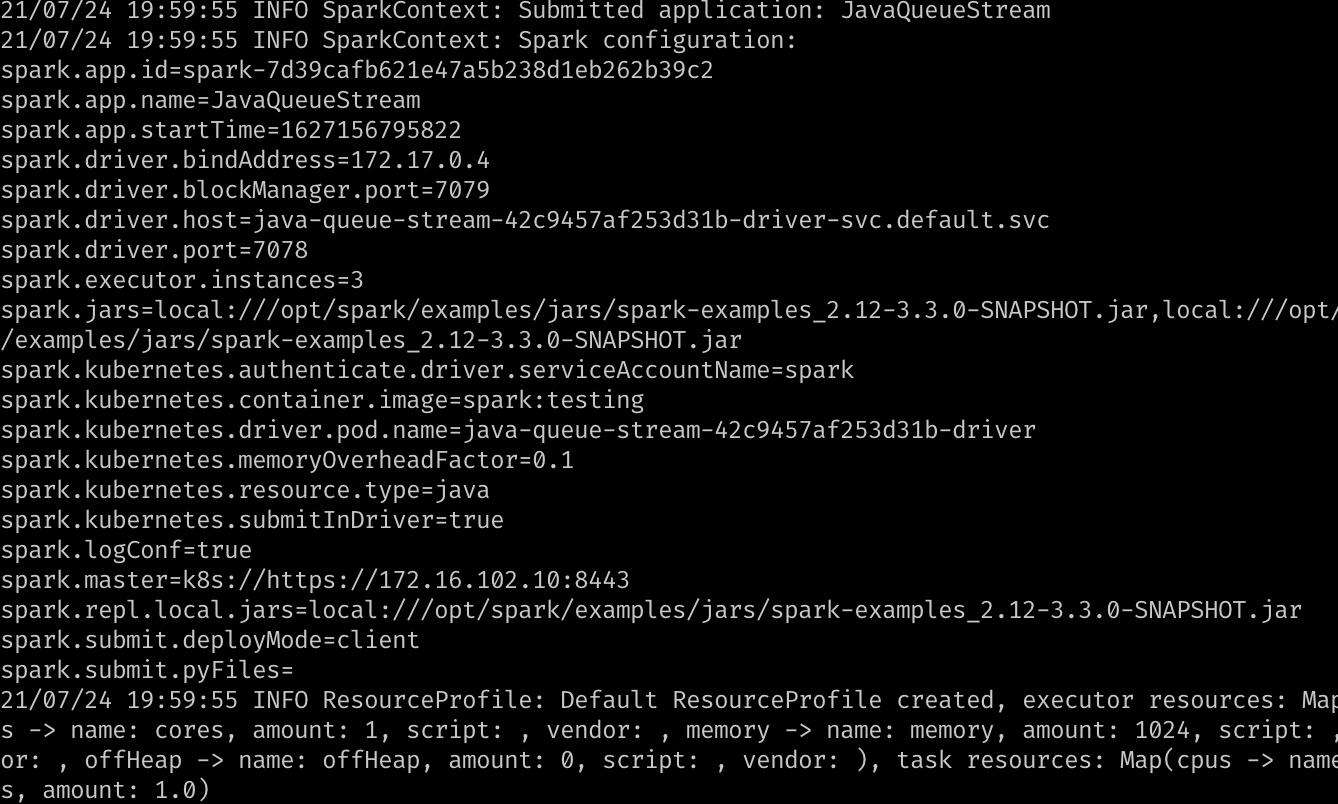

I recompiled the code of the current branch and re-tested according to the following command: But the result is different from yours, all pods are running normally Pods: One of the executor pod: Hadoop properties ConfigMap of this Pod: Did you pull the latest code and recompile and test it? Please help me review again, thx |

|

This video was recorded during my test: CleanShot.2021-07-28.at.22.12.29.mp4 |

|

Interesting! Can you run with EDIT: for this to work.

Another question: What happens when you run in deploy mode as client? |

Ok |

|

@ScrapCodes Log in driver pod, there is no such log in executor pod : |

|

@ScrapCodes Client Mode: CleanShot.2021-07-29.at.21.05.56.mp4 |

|

Please help me review again, thx |

|

@ScrapCodes @dongjoon-hyun |

|

Ping @ScrapCodes / @dongjoon-hyun , I can also take a look if y'all are busy. |

|

Hi @holdenk , with resources(i.e. a k8s cluster on IBM Cloud) I have, I was unable to get this patch to work. So, I could not make progress. Feel free to take a look ! (your and @dongjoon-hyun's approval will be final) |

|

I recompiled and tested it again, I'm sure there is no problem @ScrapCodes @holdenk @dongjoon-hyun |

|

@ScrapCodes Did you rebuild the image? |

|

@ScrapCodes ping |

|

We're closing this PR because it hasn't been updated in a while. This isn't a judgement on the merit of the PR in any way. It's just a way of keeping the PR queue manageable. |

What changes were proposed in this pull request?

Fix executor pod hadoop conf mount.

Why are the changes needed?

Arg --conf spark.kubernetes.hadoop.configMapName for executor pod not working.

Does this PR introduce any user-facing change?

No.

How was this patch tested?

UT.