[SPARK-40486][PS] Implement spearman and kendall in DataFrame.corrwith

#37929

Add this suggestion to a batch that can be applied as a single commit.

This suggestion is invalid because no changes were made to the code.

Suggestions cannot be applied while the pull request is closed.

Suggestions cannot be applied while viewing a subset of changes.

Only one suggestion per line can be applied in a batch.

Add this suggestion to a batch that can be applied as a single commit.

Applying suggestions on deleted lines is not supported.

You must change the existing code in this line in order to create a valid suggestion.

Outdated suggestions cannot be applied.

This suggestion has been applied or marked resolved.

Suggestions cannot be applied from pending reviews.

Suggestions cannot be applied on multi-line comments.

Suggestions cannot be applied while the pull request is queued to merge.

Suggestion cannot be applied right now. Please check back later.

What changes were proposed in this pull request?

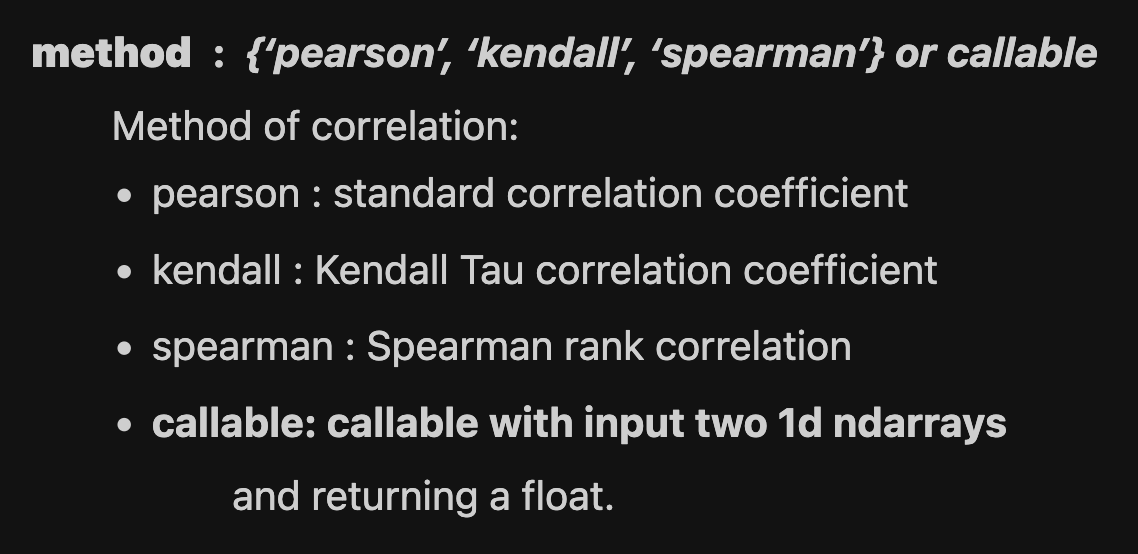

DataFrame.corrintocorrelation.py, so it should be able to reused inDataFrame.corrwith/DataFrameGroupBy.corrwith/DataFrameGroupBy.corr/etc;spearmanandkendallinDataFrame.corrwithaxisinDataFrame.corrwith;Why are the changes needed?

For API coverage

Does this PR introduce any user-facing change?

yes, new correlations supported

How was this patch tested?

added UT