-

Notifications

You must be signed in to change notification settings - Fork 29k

[SPARK-40739][SPARK-40738] Fix problems that affect windows shell environments (cygwin/msys2/mingw) #38167

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

|

Can one of the admins verify this patch? |

|

Thanks for the contribution. Would you mind checking https://github.com/apache/spark/pull/38167/checks?check_run_id=8783733198 and https://spark.apache.org/contributing.html? e.g., let's file a JIRA and link it to the PR title. |

…umentation ### What changes were proposed in this pull request? This PR aims to supplement undocumented orc configurations in documentation. ### Why are the changes needed? Help users to confirm configurations through documentation instead of code. ### Does this PR introduce _any_ user-facing change? Yes, more configurations in documentations. ### How was this patch tested? Pass the GA. Closes #38188 from dcoliversun/SPARK-40726. Authored-by: Qian.Sun <[email protected]> Signed-off-by: Sean Owen <[email protected]>

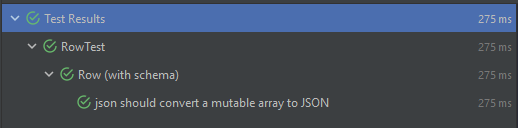

… Row to JSON for Scala 2.13 ### What changes were proposed in this pull request? I encountered an issue using Spark while reading JSON files based on a schema it throws every time an exception related to conversion of types. >Note: This issue can be reproduced only with Scala `2.13`, I'm not having this issue with `2.12` ```` Failed to convert value ArraySeq(1, 2, 3) (class of class scala.collection.mutable.ArraySeq$ofRef}) with the type of ArrayType(StringType,true) to JSON. java.lang.IllegalArgumentException: Failed to convert value ArraySeq(1, 2, 3) (class of class scala.collection.mutable.ArraySeq$ofRef}) with the type of ArrayType(StringType,true) to JSON. ```` If I add ArraySeq to the matching cases, the test that I added passed successfully  With the current code source, the test fails and we have this following error  ### Why are the changes needed? If the person is using Scala 2.13, they can't parse an array. Which means they need to fallback to 2.12 to keep the project functioning ### How was this patch tested? I added a sample unit test for the case, but I can add more if you want to. Closes #38154 from Amraneze/fix/spark_40705. Authored-by: Ait Zeouay Amrane <[email protected]> Signed-off-by: Sean Owen <[email protected]>

Two suggestions are provided: Enable Github Actions: Actions permissions

Workflow permissions

Allow Github Actions to create and approve pull requests The second suggestion is this: git fetch upstream

git rebase upstream/master

git push origin YOUR_BRANCH --forceI just did so, although it didn't fix the problem. UPDATE: I found the screen for enabling workflows, so we should be okay to re-run the failed check now. |

I'm looking into it now on the JIRA website. |

### What changes were proposed in this pull request? In the PR, I propose to remove `PartitionAlreadyExistsException` and use `PartitionsAlreadyExistException` instead of it. ### Why are the changes needed? 1. To simplify user apps. After the changes, users don't need to catch both exceptions `PartitionsAlreadyExistException` as well as `PartitionAlreadyExistsException `. 2. To improve code maintenance since don't need to support almost the same code. 3. To avoid errors like the PR #38152 fixed `PartitionsAlreadyExistException` but not `PartitionAlreadyExistsException`. ### Does this PR introduce _any_ user-facing change? Yes. ### How was this patch tested? By running the affected test suites: ``` $ build/sbt -Phive-2.3 -Phive-thriftserver "test:testOnly *SupportsPartitionManagementSuite" $ build/sbt -Phive-2.3 -Phive-thriftserver "test:testOnly *.AlterTableAddPartitionSuite" ``` Closes #38161 from MaxGekk/remove-PartitionAlreadyExistsException. Authored-by: Max Gekk <[email protected]> Signed-off-by: Max Gekk <[email protected]>

|

The following 2 JIRA issue were created. Both are fixed by this PR. They are both linked to this PR.

|

…n types ### What changes were proposed in this pull request? 1. Extend the support for Join with different join types. Before this PR, all joins are hardcoded `inner` type. So this PR supports other join types. 2. Add join to connect DSL. 3. Update a few Join proto fields to better reflect the semantic. ### Why are the changes needed? Extend the support for Join in connect. ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? UT Closes #38157 from amaliujia/SPARK-40534. Authored-by: Rui Wang <[email protected]> Signed-off-by: Wenchen Fan <[email protected]>

…ndency for Spark Connect ### What changes were proposed in this pull request? `mypy-protobuf` is only needed when the connect proto is changed and then to use [generate_protos.sh](https://github.com/apache/spark/blob/master/connector/connect/dev/generate_protos.sh) to update python side generated proto files. We should mark this dependency as optional for people who do not care. ### Why are the changes needed? `mypy-protobuf` can be optional dependency for people who do not touch connect proto files. ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? N/A Closes #38195 from amaliujia/dev_requirements. Authored-by: Rui Wang <[email protected]> Signed-off-by: Ruifeng Zheng <[email protected]>

…ecutorDecommissionInfo

### What changes were proposed in this pull request?

This change populates `ExecutorDecommission` with messages in `ExecutorDecommissionInfo`.

### Why are the changes needed?

Currently the message in `ExecutorDecommission` is a fixed value ("Executor decommission."), so it is the same for all cases, e.g. spot instance interruptions and auto-scaling down. With this change we can better differentiate those cases.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Added a unit test.

Closes #38030 from bozhang2820/spark-40596.

Authored-by: Bo Zhang <[email protected]>

Signed-off-by: Yi Wu <[email protected]>

…ules ### What changes were proposed in this pull request? This main change of this pr is refactor shade relocation/rename rules refer to result of `mvn dependency:tree -pl connector/connect` to ensure that maven and sbt produce assembly jar according to the same rules. The main parts of `mvn dependency:tree -pl connector/connect` result as follows: ``` [INFO] +- com.google.guava:guava:jar:31.0.1-jre:compile [INFO] | +- com.google.guava:listenablefuture:jar:9999.0-empty-to-avoid-conflict-with-guava:compile [INFO] | +- org.checkerframework:checker-qual:jar:3.12.0:compile [INFO] | +- com.google.errorprone:error_prone_annotations:jar:2.7.1:compile [INFO] | \- com.google.j2objc:j2objc-annotations:jar:1.3:compile [INFO] +- com.google.guava:failureaccess:jar:1.0.1:compile [INFO] +- com.google.protobuf:protobuf-java:jar:3.21.1:compile [INFO] +- io.grpc:grpc-netty:jar:1.47.0:compile [INFO] | +- io.grpc:grpc-core:jar:1.47.0:compile [INFO] | | +- com.google.code.gson:gson:jar:2.9.0:runtime [INFO] | | +- com.google.android:annotations:jar:4.1.1.4:runtime [INFO] | | \- org.codehaus.mojo:animal-sniffer-annotations:jar:1.19:runtime [INFO] | +- io.netty:netty-codec-http2:jar:4.1.72.Final:compile [INFO] | | \- io.netty:netty-codec-http:jar:4.1.72.Final:compile [INFO] | +- io.netty:netty-handler-proxy:jar:4.1.72.Final:runtime [INFO] | | \- io.netty:netty-codec-socks:jar:4.1.72.Final:runtime [INFO] | +- io.perfmark:perfmark-api:jar:0.25.0:runtime [INFO] | \- io.netty:netty-transport-native-unix-common:jar:4.1.72.Final:runtime [INFO] +- io.grpc:grpc-protobuf:jar:1.47.0:compile [INFO] | +- io.grpc:grpc-api:jar:1.47.0:compile [INFO] | | \- io.grpc:grpc-context:jar:1.47.0:compile [INFO] | +- com.google.api.grpc:proto-google-common-protos:jar:2.0.1:compile [INFO] | \- io.grpc:grpc-protobuf-lite:jar:1.47.0:compile [INFO] +- io.grpc:grpc-services:jar:1.47.0:compile [INFO] | \- com.google.protobuf:protobuf-java-util:jar:3.19.2:runtime [INFO] +- io.grpc:grpc-stub:jar:1.47.0:compile [INFO] +- org.spark-project.spark:unused:jar:1.0.0:compile ``` The new shade rule excludes the following jar packages: - scala related jars - netty related jars - only sbt inlcude jars before: pmml-model-*.jar, findbugs jsr305-*.jar, spark unused-1.0.0.jar So after this pr maven shade will includes the following jars: ``` [INFO] --- maven-shade-plugin:3.2.4:shade (default) spark-connect_2.12 --- [INFO] Including com.google.guava:guava:jar:31.0.1-jre in the shaded jar. [INFO] Including com.google.guava:listenablefuture:jar:9999.0-empty-to-avoid-conflict-with-guava in the shaded jar. [INFO] Including org.checkerframework:checker-qual:jar:3.12.0 in the shaded jar. [INFO] Including com.google.errorprone:error_prone_annotations:jar:2.7.1 in the shaded jar. [INFO] Including com.google.j2objc:j2objc-annotations:jar:1.3 in the shaded jar. [INFO] Including com.google.guava:failureaccess:jar:1.0.1 in the shaded jar. [INFO] Including com.google.protobuf:protobuf-java:jar:3.21.1 in the shaded jar. [INFO] Including io.grpc:grpc-netty:jar:1.47.0 in the shaded jar. [INFO] Including io.grpc:grpc-core:jar:1.47.0 in the shaded jar. [INFO] Including com.google.code.gson:gson:jar:2.9.0 in the shaded jar. [INFO] Including com.google.android:annotations:jar:4.1.1.4 in the shaded jar. [INFO] Including org.codehaus.mojo:animal-sniffer-annotations:jar:1.19 in the shaded jar. [INFO] Including io.perfmark:perfmark-api:jar:0.25.0 in the shaded jar. [INFO] Including io.grpc:grpc-protobuf:jar:1.47.0 in the shaded jar. [INFO] Including io.grpc:grpc-api:jar:1.47.0 in the shaded jar. [INFO] Including io.grpc:grpc-context:jar:1.47.0 in the shaded jar. [INFO] Including com.google.api.grpc:proto-google-common-protos:jar:2.0.1 in the shaded jar. [INFO] Including io.grpc:grpc-protobuf-lite:jar:1.47.0 in the shaded jar. [INFO] Including io.grpc:grpc-services:jar:1.47.0 in the shaded jar. [INFO] Including com.google.protobuf:protobuf-java-util:jar:3.19.2 in the shaded jar. [INFO] Including io.grpc:grpc-stub:jar:1.47.0 in the shaded jar. ``` sbt assembly will include the following jars: ``` [debug] Including from cache: j2objc-annotations-1.3.jar [debug] Including from cache: guava-31.0.1-jre.jar [debug] Including from cache: protobuf-java-3.21.1.jar [debug] Including from cache: grpc-services-1.47.0.jar [debug] Including from cache: failureaccess-1.0.1.jar [debug] Including from cache: grpc-stub-1.47.0.jar [debug] Including from cache: perfmark-api-0.25.0.jar [debug] Including from cache: annotations-4.1.1.4.jar [debug] Including from cache: listenablefuture-9999.0-empty-to-avoid-conflict-with-guava.jar [debug] Including from cache: animal-sniffer-annotations-1.19.jar [debug] Including from cache: checker-qual-3.12.0.jar [debug] Including from cache: grpc-netty-1.47.0.jar [debug] Including from cache: grpc-api-1.47.0.jar [debug] Including from cache: grpc-protobuf-lite-1.47.0.jar [debug] Including from cache: grpc-protobuf-1.47.0.jar [debug] Including from cache: grpc-context-1.47.0.jar [debug] Including from cache: grpc-core-1.47.0.jar [debug] Including from cache: protobuf-java-util-3.19.2.jar [debug] Including from cache: error_prone_annotations-2.10.0.jar [debug] Including from cache: gson-2.9.0.jar [debug] Including from cache: proto-google-common-protos-2.0.1.jar ``` All the dependencies mentioned above are relocationed to the `org.sparkproject.connect` package according to the new rules to avoid conflicts with other third-party dependencies. ### Why are the changes needed? Refactor shade relocation/rename rules to ensure that maven and sbt produce assembly jar according to the same rules. ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? Pass GitHub Actions Closes #38162 from LuciferYang/SPARK-40677-FOLLOWUP. Lead-authored-by: yangjie01 <[email protected]> Co-authored-by: YangJie <[email protected]> Signed-off-by: Hyukjin Kwon <[email protected]>

…l inputs

### What changes were proposed in this pull request?

add a dedicated expression for `product`:

1. for integral inputs, directly use `LongType` to avoid the rounding error:

2. when `ignoreNA` is true, skip following values when meet a `zero`;

3. when `ignoreNA` is false, skip following values when meet a `zero` or `null`;

### Why are the changes needed?

1. existing computation logic is too complex in the PySpark side, with a dedicated expression, we can simplify the PySpark side and apply it in more cases.

2. existing computation of `product` is likely to introduce rounding error for integral inputs, for example `55108 x 55108 x 55108 x 55108` in the following case:

before:

```

In [14]: df = pd.DataFrame({"a": [55108, 55108, 55108, 55108], "b": [55108.0, 55108.0, 55108.0, 55108.0], "c": [1, 2, 3, 4]})

In [15]: df.a.prod()

Out[15]: 9222710978872688896

In [16]: type(df.a.prod())

Out[16]: numpy.int64

In [17]: df.b.prod()

Out[17]: 9.222710978872689e+18

In [18]: type(df.b.prod())

Out[18]: numpy.float64

In [19]:

In [19]: psdf = ps.from_pandas(df)

In [20]: psdf.a.prod()

Out[20]: 9222710978872658944

In [21]: type(psdf.a.prod())

Out[21]: int

In [22]: psdf.b.prod()

Out[22]: 9.222710978872659e+18

In [23]: type(psdf.b.prod())

Out[23]: float

In [24]: df.a.prod() - psdf.a.prod()

Out[24]: 29952

```

after:

```

In [1]: import pyspark.pandas as ps

In [2]: import pandas as pd

In [3]: df = pd.DataFrame({"a": [55108, 55108, 55108, 55108], "b": [55108.0, 55108.0, 55108.0, 55108.0], "c": [1, 2, 3, 4]})

In [4]: df.a.prod()

Out[4]: 9222710978872688896

In [5]: psdf = ps.from_pandas(df)

In [6]: psdf.a.prod()

Out[6]: 9222710978872688896

In [7]: df.a.prod() - psdf.a.prod()

Out[7]: 0

```

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

existing UT & added UT

Closes #38148 from zhengruifeng/ps_new_prod.

Authored-by: Ruifeng Zheng <[email protected]>

Signed-off-by: Hyukjin Kwon <[email protected]>

|

(@philwalk rebasing it would retrigger the Github Actions jobs) |

…one grouping expressions ### What changes were proposed in this pull request? 1. Add `groupby` to connect DSL and test more than one grouping expressions 2. Pass limited data types through connect proto for LocalRelation's attributes. 3. Cleanup unused `Trait` in the testing code. ### Why are the changes needed? Enhance connect's support for GROUP BY. ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? UT Closes #38155 from amaliujia/support_more_than_one_grouping_set. Authored-by: Rui Wang <[email protected]> Signed-off-by: Wenchen Fan <[email protected]>

…classes ### What changes were proposed in this pull request? In the PR, I propose to use error classes in the case of type check failure in collection expressions. ### Why are the changes needed? Migration onto error classes unifies Spark SQL error messages. ### Does this PR introduce _any_ user-facing change? Yes. The PR changes user-facing error messages. ### How was this patch tested? ``` build/sbt "sql/testOnly *SQLQueryTestSuite" build/sbt "test:testOnly org.apache.spark.SparkThrowableSuite" build/sbt "test:testOnly *ExpressionTypeCheckingSuite" build/sbt "test:testOnly *DataFrameFunctionsSuite" build/sbt "test:testOnly *DataFrameAggregateSuite" build/sbt "test:testOnly *AnalysisErrorSuite" build/sbt "test:testOnly *CollectionExpressionsSuite" build/sbt "test:testOnly *ComplexTypeSuite" build/sbt "test:testOnly *HigherOrderFunctionsSuite" build/sbt "test:testOnly *PredicateSuite" build/sbt "test:testOnly *TypeUtilsSuite" ``` Closes #38197 from lvshaokang/SPARK-40358. Authored-by: lvshaokang <[email protected]> Signed-off-by: Max Gekk <[email protected]>

### What changes were proposed in this pull request? This PR cleans up the logic of `listFunctions`. Currently `listFunctions` gets all external functions and registered functions (built-in, temporary, and persistent functions with a specific database name). It is not necessary to get persistent functions that match a specific database name again since`externalCatalog.listFunctions` already fetched them. We only need to list all built-in and temporary functions from the function registries. ### Why are the changes needed? Code clean up. ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? Existing unit tests. Closes #38194 from allisonwang-db/spark-40740-list-functions. Authored-by: allisonwang-db <[email protected]> Signed-off-by: Hyukjin Kwon <[email protected]>

### What changes were proposed in this pull request? Code refactor on all File data source options: - `TextOptions` - `CSVOptions` - `JSONOptions` - `AvroOptions` - `ParquetOptions` - `OrcOptions` - `FileIndex` related options Change semantics: - First, we introduce a new trait `DataSourceOptions`, which defines the following functions: - `newOption(name)`: Register a new option - `newOption(name, alternative)`: Register a new option with alternative - `getAllValidOptions`: retrieve all valid options - `isValidOption(name)`: validate a given option name - `getAlternativeOption(name)`: get alternative option name if any - Then, for each class above - Create/update its companion object to extend from the trait above and register all valid options within it. - Update places where name strings are used directly to fetch option values to use those option constants instead. - Add a unit test for each file data source options ### Why are the changes needed? Currently for each file data source, all options are placed sparsely in the options class and there is no clear list of all options supported. As more and more options are added, the readability get worse. Thus, we want to refactor those codes so that - we can easily get a list of supported options for each data source - enforce better practice for adding new options going forwards. ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? Closes #38113 from xiaonanyang-db/SPARK-40667. Authored-by: xiaonanyang-db <[email protected]> Signed-off-by: Wenchen Fan <[email protected]>

### What changes were proposed in this pull request? Support Column Alias in the Connect DSL (thus in Connect proto). ### Why are the changes needed? Column alias is a part of dataframe API , meanwhile we need column alias to support `withColumn` etc. API. ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? UT Closes #38174 from amaliujia/alias. Authored-by: Rui Wang <[email protected]> Signed-off-by: Wenchen Fan <[email protected]>

…t `min_count` ### What changes were proposed in this pull request? Make `_reduce_for_stat_function` in `groupby` accept `min_count` ### Why are the changes needed? to simplify the implementations ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? existing UTs Closes #38201 from zhengruifeng/ps_groupby_mc. Authored-by: Ruifeng Zheng <[email protected]> Signed-off-by: Hyukjin Kwon <[email protected]>

…eric type

### What changes were proposed in this pull request?

This pr aims to fix following Java compilation warnings related to generic type:

```

2022-10-08T01:43:33.6487078Z /home/runner/work/spark/spark/core/src/main/java/org/apache/spark/SparkThrowable.java:54: warning: [rawtypes] found raw type: HashMap

2022-10-08T01:43:33.6487456Z return new HashMap();

2022-10-08T01:43:33.6487682Z ^

2022-10-08T01:43:33.6487957Z missing type arguments for generic class HashMap<K,V>

2022-10-08T01:43:33.6488617Z where K,V are type-variables:

2022-10-08T01:43:33.6488911Z K extends Object declared in class HashMap

2022-10-08T01:43:33.6489211Z V extends Object declared in class HashMap

2022-10-08T01:50:21.5951932Z /home/runner/work/spark/spark/sql/catalyst/src/main/java/org/apache/spark/sql/connector/catalog/SupportsAtomicPartitionManagement.java:55: warning: [rawtypes] found raw type: Map

2022-10-08T01:50:21.5999993Z createPartitions(new InternalRow[]{ident}, new Map[]{properties});

2022-10-08T01:50:21.6000343Z ^

2022-10-08T01:50:21.6000642Z missing type arguments for generic class Map<K,V>

2022-10-08T01:50:21.6001272Z where K,V are type-variables:

2022-10-08T01:50:21.6001569Z K extends Object declared in interface Map

2022-10-08T01:50:21.6002109Z V extends Object declared in interface Map

2022-10-08T01:50:21.6006655Z /home/runner/work/spark/spark/sql/catalyst/src/main/java/org/apache/spark/sql/connector/util/V2ExpressionSQLBuilder.java:216: warning: [rawtypes] found raw type: Literal

2022-10-08T01:50:21.6007121Z protected String visitLiteral(Literal literal) {

2022-10-08T01:50:21.6007395Z ^

2022-10-08T01:50:21.6007673Z missing type arguments for generic class Literal<T>

2022-10-08T01:50:21.6008032Z where T is a type-variable:

2022-10-08T01:50:21.6008324Z T extends Object declared in interface Literal

2022-10-08T01:50:21.6008785Z /home/runner/work/spark/spark/sql/catalyst/src/main/java/org/apache/spark/sql/util/NumericHistogram.java:56: warning: [rawtypes] found raw type: Comparable

2022-10-08T01:50:21.6009223Z public static class Coord implements Comparable {

2022-10-08T01:50:21.6009503Z ^

2022-10-08T01:50:21.6009791Z missing type arguments for generic class Comparable<T>

2022-10-08T01:50:21.6010137Z where T is a type-variable:

2022-10-08T01:50:21.6010433Z T extends Object declared in interface Comparable

2022-10-08T01:50:21.6010976Z /home/runner/work/spark/spark/sql/catalyst/src/main/java/org/apache/spark/sql/util/NumericHistogram.java:191: warning: [unchecked] unchecked method invocation: method sort in class Collections is applied to given types

2022-10-08T01:50:21.6011474Z Collections.sort(tmp_bins);

2022-10-08T01:50:21.6011714Z ^

2022-10-08T01:50:21.6012050Z required: List<T>

2022-10-08T01:50:21.6012296Z found: ArrayList<Coord>

2022-10-08T01:50:21.6012604Z where T is a type-variable:

2022-10-08T01:50:21.6012926Z T extends Comparable<? super T> declared in method <T>sort(List<T>)

2022-10-08T02:13:38.0769617Z /home/runner/work/spark/spark/sql/hive-thriftserver/src/main/java/org/apache/hive/service/cli/operation/OperationManager.java:85: warning: [rawtypes] found raw type: AbstractWriterAppender

2022-10-08T02:13:38.0770287Z AbstractWriterAppender ap = new LogDivertAppender(this, OperationLog.getLoggingLevel(loggingMode));

2022-10-08T02:13:38.0770645Z ^

2022-10-08T02:13:38.0770947Z missing type arguments for generic class AbstractWriterAppender<M>

2022-10-08T02:13:38.0771330Z where M is a type-variable:

2022-10-08T02:13:38.0771665Z M extends WriterManager declared in class AbstractWriterAppender

2022-10-08T02:13:38.0774487Z /home/runner/work/spark/spark/sql/hive-thriftserver/src/main/java/org/apache/hive/service/cli/operation/LogDivertAppender.java:268: warning: [rawtypes] found raw type: Layout

2022-10-08T02:13:38.0774940Z Layout l = ap.getLayout();

2022-10-08T02:13:38.0775173Z ^

2022-10-08T02:13:38.0775441Z missing type arguments for generic class Layout<T>

2022-10-08T02:13:38.0775849Z where T is a type-variable:

2022-10-08T02:13:38.0776359Z T extends Serializable declared in interface Layout

2022-10-08T02:19:55.0035795Z [WARNING] /home/runner/work/spark/spark/connector/avro/src/main/java/org/apache/spark/sql/avro/SparkAvroKeyOutputFormat.java:56:17: [rawtypes] found raw type: SparkAvroKeyRecordWriter

2022-10-08T02:19:55.0037287Z [WARNING] /home/runner/work/spark/spark/connector/avro/src/main/java/org/apache/spark/sql/avro/SparkAvroKeyOutputFormat.java:56:13: [unchecked] unchecked call to SparkAvroKeyRecordWriter(Schema,GenericData,CodecFactory,OutputStream,int,Map<String,String>) as a member of the raw type SparkAvroKeyRecordWriter

2022-10-08T02:19:55.0038442Z [WARNING] /home/runner/work/spark/spark/connector/avro/src/main/java/org/apache/spark/sql/avro/SparkAvroKeyOutputFormat.java:75:31: [rawtypes] found raw type: DataFileWriter

2022-10-08T02:19:55.0039370Z [WARNING] /home/runner/work/spark/spark/connector/avro/src/main/java/org/apache/spark/sql/avro/SparkAvroKeyOutputFormat.java:75:27: [unchecked] unchecked call to DataFileWriter(DatumWriter<D>) as a member of the raw type DataFileWriter

```

### Why are the changes needed?

Fix Java compilation warnings.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Pass GitHub Actions.

Closes #38198 from LuciferYang/fix-java-warn.

Lead-authored-by: yangjie01 <[email protected]>

Co-authored-by: YangJie <[email protected]>

Signed-off-by: Hyukjin Kwon <[email protected]>

…pts to make code more portable ### What changes were proposed in this pull request? Consistently invoke bash with /usr/bin/env bash in scripts to make code more portable ### Why are the changes needed? some bash still use #!/bin/bash ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? no need test Closes #38191 from huangxiaopingRD/script. Authored-by: huangxiaoping <[email protected]> Signed-off-by: Sean Owen <[email protected]>

…CY_ERROR_TEMP_2076-2100 ### What changes were proposed in this pull request? This PR proposes to migrate 25 execution errors onto temporary error classes with the prefix `_LEGACY_ERROR_TEMP_2076` to `_LEGACY_ERROR_TEMP_2100`. The error classes are prefixed with `_LEGACY_ERROR_TEMP_` indicates the dev-facing error messages, and won't be exposed to end users. ### Why are the changes needed? To speed-up the error class migration. The migration on temporary error classes allow us to analyze the errors, so we can detect the most popular error classes. ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? ``` $ build/sbt "sql/testOnly org.apache.spark.sql.SQLQueryTestSuite" $ build/sbt "test:testOnly *SQLQuerySuite" ``` Closes #38122 from itholic/SPARK-40540-2076-2100. Lead-authored-by: itholic <[email protected]> Co-authored-by: Haejoon Lee <[email protected]> Signed-off-by: Max Gekk <[email protected]>

I did the following, hope it was correct: git fetch upstream

git rebase upstream/master

git pull

git commit -m 'rebase to trigger build'

git push |

|

I think this is messed up now, not sure how as your approach seems OK (though you would have had to force push) |

|

I will delete the fork and recreate the changes, it seems the simplest fix to me. |

This PR is superceded by #38228

This fixes two problems that affect development in a Windows shell environment, such as

cygwinormsys2.Running

./build/sbt packageBinfrom A Windows cygwinbashsession fails.Details

This occurs if

WSLis installed, becauseproject\SparkBuild.scalacreates abashprocess, butWSL bashis called, even thoughcygwin bashappears earlier in thePATH. In addition, file path arguments to bash contain backslashes. The fix is to insure that the correctbashis called, and that arguments passed tobashare passed with slashes rather than slashes.The other problem fixed by the PR is to address problems preventing the

bashscripts (spark-shell,spark-submit, etc.) from being used in WindowsSHELLenvironments. The problem is that the bash version ofspark-classfails in a Windows shell environment, the result oflauncher/src/main/java/org/apache/spark/launcher/Main.javanot following the convention expected byspark-class, and also appending CR to line endings. The resulting error message not helpful.There are two parts to this fix:

Main.javato treat aSHELLsession on Windows as abashsessionMain.javaDoes this PR introduce any user-facing change?

These changes should NOT affect anyone who is not trying build or run bash scripts from a Windows SHELL environment.

It might make sense to actively unset the

SHELLvariable inside ofspark-class.cmd, to avoid this corner case.How was this patch tested?

Manual tests were performed to verify both changes.