-

Notifications

You must be signed in to change notification settings - Fork 85

fix fluence for mua -> 0 #164

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

|

thanks, I am supportive of this patch, but the implementation is not not exactly robust. see my comment to the code. |

src/mcx_core.cu

Outdated

| weight = w0 - p.w; | ||

| } else if (gcfg->outputtype == otFluence || gcfg->outputtype == otFlux) { | ||

| weight = (prop.mua == 0.f) ? 0.f : ((w0 - p.w) / (prop.mua)); | ||

| weight = (prop.mua/prop.mus < 0.001) ? (w0 * len * (1 - prop.mua * len / 2)) : ((w0 - p.w) / (prop.mua)); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

currently, mcx simulation kernel could accept domains with either mua or mus to be exactly 0. with your new formula

weight = (prop.mua/prop.mus < 0.001) ? (w0 * len * (1 - prop.mua * len / 2)) : ((w0 - p.w) / (prop.mua));

I see it can raise an exception if mus is 0, or both mua/mus is exactly 0.

please consider a formula that can also accommodate the case when mus=0.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

several minor comments:

- for numerical constants, please add

fat the end so that it won't be treated as "double constant" which will trigger double-precision hardware/computation, which is slow on consumer GPUs. - same for integer constants, convert those to 1.f or 2.f could avoid unnecessary type conversions

- avoid using division "/" in a CUDA code. it is one of the most expensive operators in the GPU kernel, even more expensive than

sin/cos. try to convert to multiplication whenever possible, for example, instead of/2, change it to*0.5f

|

Thank you for your quick and tutorial reply. I've just started learning to code and your suggestions were very useful. I came up with a slightly different solution that hopefully solves some of the previous shortcomings. Rather using a condition based on I still cannot explain the origin of this small residual gap for vanishing absorption (which however is only present in the scattering case). Do you have any suggestion about what could be causing it? |

sorry for the delay. I am trying to figure out the math of your patch. my understanding is that your are trying to calculate the limit why you had if you replace this term by |

|

No, the small residual does not disappear when using either fewer or more terms in the expansion. As I mentioned when I first created the pull request, what is particularly weird to me is that if we use just |

|

@epini, would you be able to share your test script that I can reproduce the "small residual"? I want to take a deeper look. |

|

Sure, here is the code. Of course the results depend on which modification have been implemented before compiling the executable as previously specified. %% Validation of new fluence rate method proposed by F. Martelli

% Biomedical Optics Express Vol. 14, Issue 1, pp. 148-162 (2023)

% https://doi.org/10.1364/BOE.477339

clear

close all

%% material input settings

N=100; % number of layers

N_mua=100; % number of mua values

data=zeros(2,N,N_mua); % initialize matrix for all simulations data

cfg.unitinmm=0.1; % cubic voxel size in mm

sf=cfg.unitinmm; % scaling factor

L=N*sf; % sample thickness in mm

sz=2*sf; % sample dimensions along xy plane

g=0;

mus=0.1; % mm^-1

n=1.4;

v=3e11/n; % speed of light inside the medium (mm/s)

vol_matrix=ones(uint8(sz/sf),uint8(sz/sf),N);

for j=1:N

vol_matrix(:,:,j)=j; % define a medium per layer

end

cfg.vol=vol_matrix;

index = 1;

for mua=logspace(-15,6,N_mua) % set values for mua

cfg.prop=[0 0 1 1;

repmat([mua mus g n], [N,1])];

cfg.bc='cc_cc_000001'; % cyclic boundary condition to mimic horizontally infinite slabs

% source input settings

cfg.nphoton=1e6;

cfg.srcpos=[sz/(2*sf)+1 sz/(2*sf)+1 0];

cfg.srcdir=[0 0 1];

cfg.srctype='pencil';

% time intervals steps

cfg.tstart=0; % s

cfg.tend=1e-7; % s

cfg.tstep=cfg.tend; % s

% other settings

cfg.gpuid=1;

cfg.autopilot=1;

cfg.issaveexit=1;

cfg.debuglevel='p';

% output settings

cfg.issaveref=0;

cfg.issave2pt=1; % if = 0 disables volumetric data output to speed up simulation

cfg.isreflect=1;

cfg.outputtype='fluence';

cfg.detpos=[sz/(2*sf)+0.5 sz/(2*sf)+0.5 N/2+0.5 N*2];

cfg.maxdetphoton=cfg.nphoton; % over 1e7 runs out of memory on my laptop

cfg.savedetflag='dpw'; % detected photons recorded parameters

% detected photon analysis

fprintf("Simulation started mua = %g mus = %g\n", mua, mus);

[flux,detps]=mcxlab(cfg);

% detector

pos_x = sf*(detps.p(:,1) - sz/(2*sf)+0.5);

pos_y = sf*(detps.p(:,2)- sz/(2*sf)+0.5);

pos_z = (detps.p(:,3));

PBM_fluence = detps.ppath(:,:); % use ppath to retrieve fluence for mua=0 (Martelli's method)

path_times = sum(PBM_fluence, 2)./v;

% PBM fluence evaluation

layer_PBM = zeros(1,L/sf); % Martelli's method (Pathlength Based Method)

layer_ABM = zeros(1,L/sf); % mcx native method (Absorption Based Method)

for k=1:N

layer_PBM(k) = mean(PBM_fluence(:,k));

AMB_flux = flux.data(:,:,k,:)*(sf^2); % fluence per time bin in k_th layer

layer_ABM(k) = sum(AMB_flux, 'all'); % fluence integrated over all time bins in k_th layer

end

data(1,:,index)=layer_PBM;

data(2,:,index)=layer_ABM;

index = index + 1;

end

%% plot fluence in one layer

layer = 1;

figure(4), hold on

plot(logspace(-15,6,N_mua), squeeze(data(1,layer,:)), 'k--')

plot(logspace(-15,6,N_mua), squeeze(data(2,layer,:)), 'b-')

ylabel('$\displaystyle \langle\Phi\rangle$ [Wmm$^{-2}]$','interpreter','latex', 'Fontsize', 16)

xlabel('$\displaystyle \mu_{\textrm{a}}$ (mm$^{-1}$)','interpreter','latex', 'Fontsize', 16)

legend('zero absorption', 'MCX')

set(gca, 'xscale', 'log')

axis([1e-15 1e6 0 6])

title(strcat('Single precision with if condition, mus=', num2str(mus), ' mm^{-1}, n=1.4')) |

|

@epini, sorry for the delay. I finally have a chance to run your test script, and now I see what you meant by a small dependency. I merged your patch, and also simplified it further with only the 1st order Taylor expansion instead of the 2nd order because they show nearly no difference in my test. Regarding the dependency, I saw you used the accumulation of the partial paths to obtain the reference solution. if you look at the 1st order Taylor expansion, I think they are essentially the same formula, so I don't see a reason the two ways should yield different result. It is likely due to a book keep issue in the code somewhere. I used I've tested two possibilities 1) disabling specular reflection using anyhow, I appreciate your patch and excited that this simple patch allows mcx to get meaningful fluence in non-absorbing medium. |

#235 was reported by Thomas Rialan in https://groups.google.com/g/mcx-users/c/3zvP5W4OGnM revert #164 to fix high error reported by Jessica Lund in https://groups.google.com/g/mcx-users/c/hr6lqpfTYbg

|

hi @epini, I found the reason why the fluence does not convert to the expected value when mua->0. It was due to an incorrect variable - after replacing |

Dear Prof. Fang,

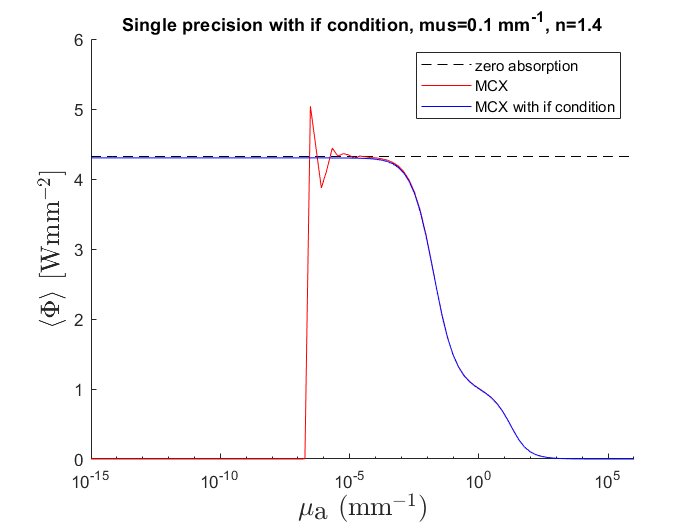

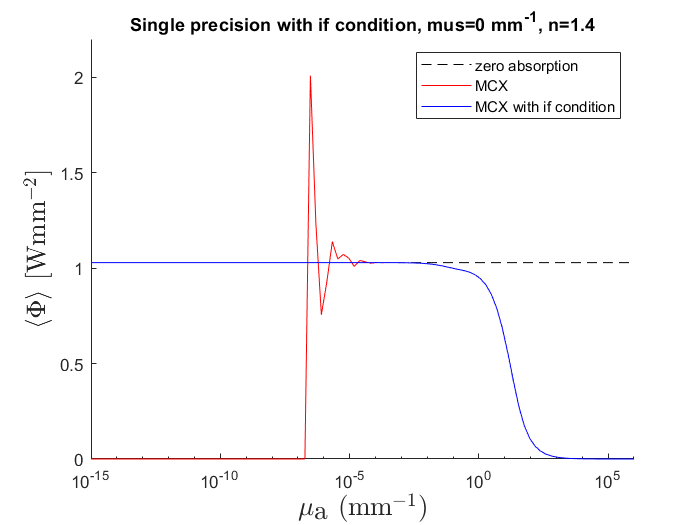

I was playing with MCXlab at low absorption values, but it seems that below a certain threshold the fluence fails numerically and becomes eventually zero. The threshold can be lowered by turning on the

DUSE_DOUBLEandDUSE_MORE_DOUBLEflags, but in principle the correct limit for low absorption is simply obtained by accumulating the appropriate Taylor expansion for the exponentialw0 * (1 - exp(-prop.mua * len)) / prop.mua.To take advantage of this one can introduce an

ifcondition depending on theprop.mua / prop.musratio instead of the currently implemented zero value forprop.mua == 0. See the picture below relative to a homogeneous medium.The dashed line value is calculated as the average pathlength in the medium (which is given by line 1894 of mcx_core.cu). Strangely enough, a small difference is visible between when the

ifcondition kicks in. A Kahan summation may be needed somewhere? Adding the sameifcondition and Taylor expansion at line 1894 returns instead a smooth convergence to the dashed value.Perhaps this is not the ideal solution, but I think it could still be preferable compared to the drastic loss of precision at low mua and the incorrect fluence value that is currently set for null absorption.