-

Notifications

You must be signed in to change notification settings - Fork 29k

[WIP][SPARK-35084][CORE] Spark 3: supporting "--packages" in k8s cluster mode #32397

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

|

Can I see logs about failure of build and test? |

|

Thank you for your contribution, @ocworld . |

|

ok to test |

Apache Spark GitHub Action is running in your GitHub Account. If you click the failure link, you can see the error log in your repo. The current failure occurs because your master branch is too outdate. Please follow the guideline to sync your master branch. In addition, I also triggered Jenkins machine too. For those users, we have another test machine additionally. |

|

Kubernetes integration test starting |

|

Kubernetes integration test status failure |

|

@dongjoon-hyun Thank you for your test and reply. |

|

Test build #138136 has finished for PR 32397 at commit

|

|

Kubernetes integration test starting |

|

Kubernetes integration test status failure |

|

Test build #138137 has finished for PR 32397 at commit

|

|

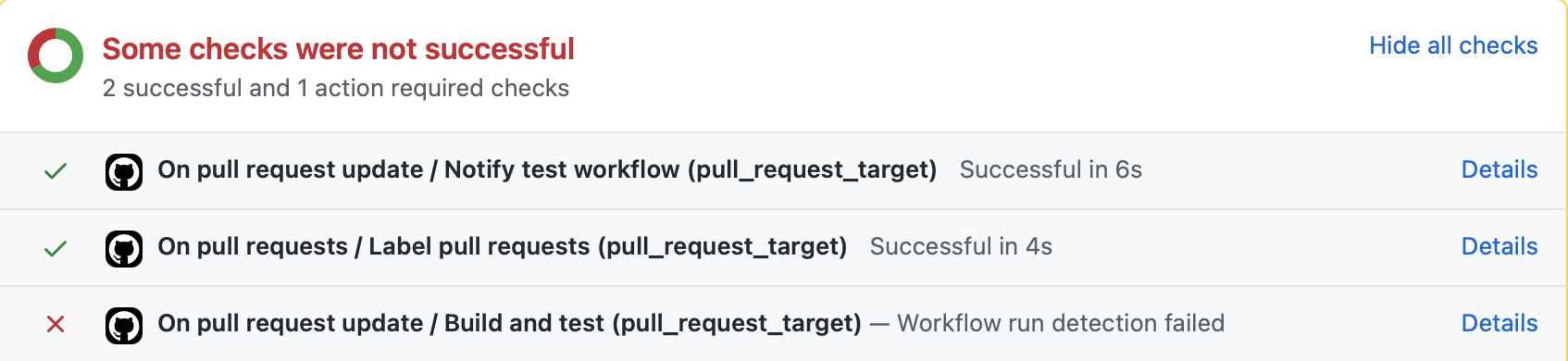

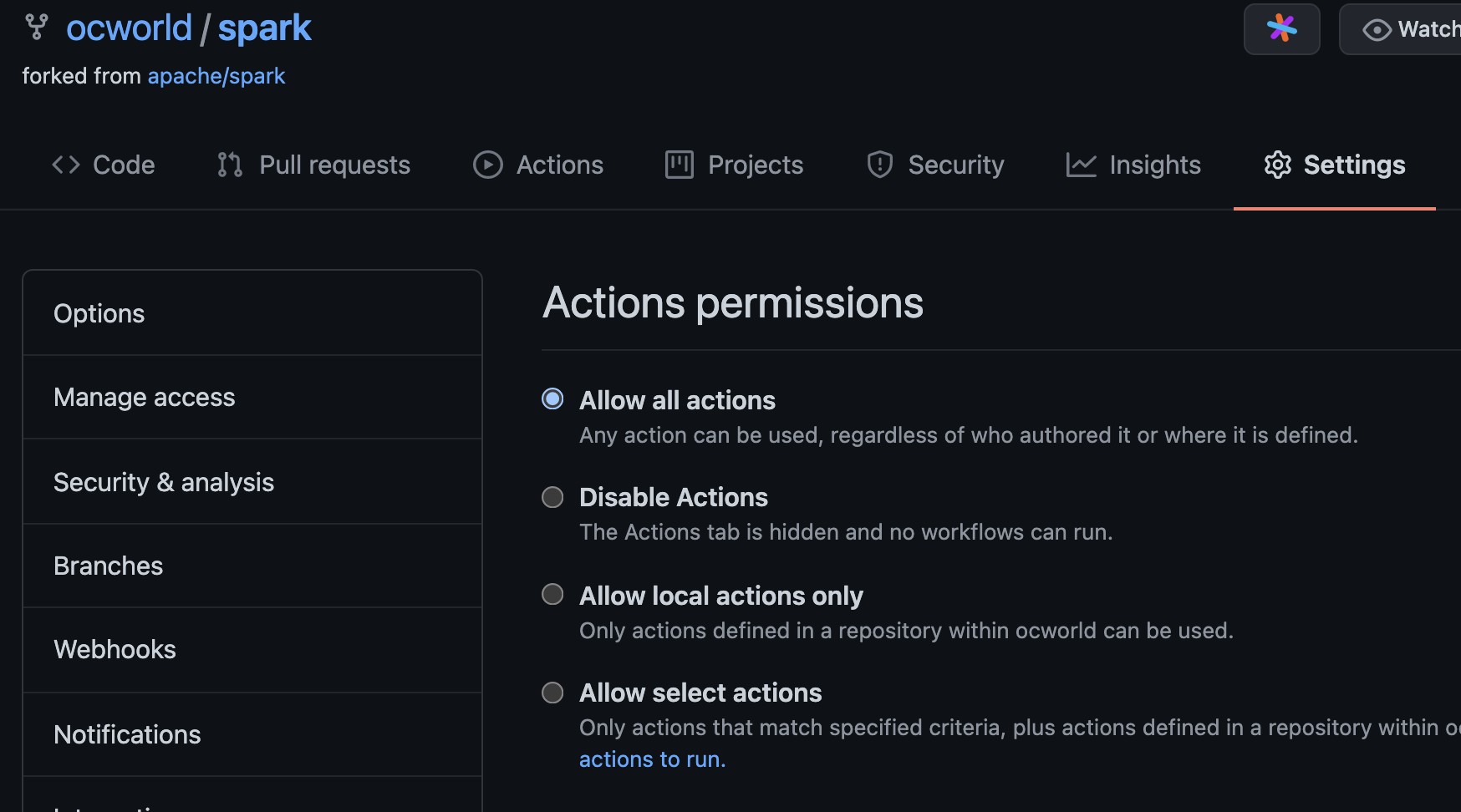

@ocworld can you take a look https://github.com/apache/spark/pull/32397/checks?check_run_id=2484554770 and enable Github Actions in your forked repository? |

|

@HyukjinKwon Github actions setting is enabled as allow all actions in my forked repository. |

|

Can you rebase and force push in this PR to retrigger the build? |

|

@HyukjinKwon I've already rebase and force push. So, now, everything is up-to-date. |

|

did you do something like #32400 (comment)? The GitHub Actions should run the build in your forked repository but it seems not. |

|

@HyukjinKwon Oh! you're right. Workflow in actions tab was not activated. It is enabled now. Thank you. |

|

Kubernetes integration test starting |

|

Kubernetes integration test status failure |

|

Test build #138158 has finished for PR 32397 at commit

|

|

+CC @shardulm94, @xkrogen |

dongjoon-hyun

left a comment

dongjoon-hyun

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Could you elaborate more about the procedure how you test in the PR description?

# How was this patch tested?

In my k8s environment, i tested the code.

The best way to protect this feature is having a test coverage at BasicTestsSuite or DepsTestsSuite. Do you think you can add a new test case test, @ocworld ?

|

Kubernetes integration test unable to build dist. exiting with code: 1 |

|

@dongjoon-hyun I reviewed "BasicTestsSuite" and "DepsTestsSuite" I think it is hard to add test case in it. It's because it is needed to get spark.jars in the running application's spark context on the driver pod. I don't have any idea about it, now. Do you have any idea about test? |

|

Test build #138380 has finished for PR 32397 at commit

|

|

Kubernetes integration test starting |

|

Kubernetes integration test status failure |

|

Test build #142659 has finished for PR 32397 at commit

|

|

Kubernetes integration test starting |

|

Kubernetes integration test status failure |

|

Any update on this ? |

|

Any news on when this is going to be merged? |

It is not merged now. I've tried to add test. |

|

We're closing this PR because it hasn't been updated in a while. This isn't a judgement on the merit of the PR in any way. It's just a way of keeping the PR queue manageable. |

|

is there a solution for this? |

In my case I just build the docker images (from the official repo) with the dependencies included by hand. ex: then

and at the end

Still looking for the day this is included in spark release. |

|

@dongjoon-hyun may be this PR could be re-opened and stale removed since this is still an issue ? Thank you! |

|

The repo that submitted the PR is deleted so we can't re-open it. But feel free to recreate and go for it. |

|

I'll reopen the pr soon. |

|

@jbguerraz @holdenk The pr is recreated in #38828 |

Thank you so much for the workaround, and it's a pity that this bug took two days of my life :) |

|

@jbguerraz @GaruGaru The pr(#38828) is merged now |

|

Thank you @ocworld :-) |

What changes were proposed in this pull request?

Supporting '--packages' in the k8s cluster mode

Why are the changes needed?

In spark 3, '--packages' in the k8s cluster mode is not supported. I expected that managing dependencies by using packages like spark 2.

Spark 2.4.5

https://github.com/apache/spark/blob/v2.4.5/core/src/main/scala/org/apache/spark/deploy/SparkSubmit.scala

Spark 3.0.2

https://github.com/apache/spark/blob/v3.0.2/core/src/main/scala/org/apache/spark/deploy/SparkSubmit.scala

unlike spark2, in spark 3, jars are not added in any place.

Does this PR introduce any user-facing change?

Unlike spark 2, resolved jars are added not in cluster mode spark submit but in driver.

It's because in spark 3, the feature is added that is uploading jars with prefix "file://" to s3.

So, if resolved jars are added in spark submit, every jars from packages are uploading to s3! When I tested it, it is very bad experience to me.

How was this patch tested?

In my k8s environment, i tested the code.