-

Notifications

You must be signed in to change notification settings - Fork 29k

[SPARK-35664][SQL] Support java.time.LocalDateTime as an external type of TimestampWithoutTZ type #32814

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

|

Kubernetes integration test starting |

|

Kubernetes integration test status failure |

sql/catalyst/src/test/scala/org/apache/spark/sql/catalyst/util/DateTimeUtilsSuite.scala

Outdated

Show resolved

Hide resolved

|

Test build #139447 has finished for PR 32814 at commit

|

1101f55 to

c2ebb06

Compare

|

Kubernetes integration test unable to build dist. exiting with code: 1 |

|

Test build #139469 has finished for PR 32814 at commit

|

|

Kubernetes integration test starting |

|

Kubernetes integration test status success |

|

@gengliangwang Have you done similar changes as in #31729, correct? Just want to avoid comparing of this PR to similar PRs for intervals. |

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/util/DateTimeUtils.scala

Outdated

Show resolved

Hide resolved

| localDateTime.toEpochSecond(ZoneOffset.UTC) * MICROS_PER_SECOND + | ||

| localDateTime.getNano / NANOS_PER_MICROS |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Potentially, * and + can overflow. Could you use Math.multiplyExact

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Also, please, add tests to check long overflow.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

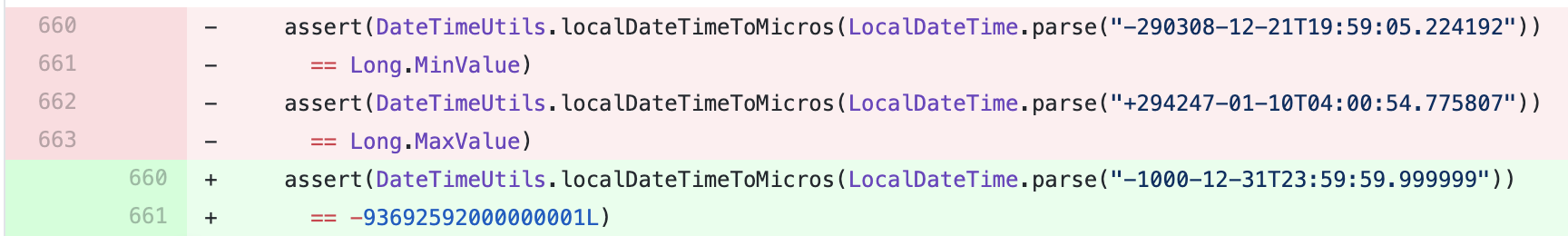

BTW, I replaced * by multiplyExact, and got:

java.lang.ArithmeticException: long overflow

at java.lang.Math.multiplyExact(Math.java:892)

at org.apache.spark.sql.catalyst.util.DateTimeUtils$.localDateTimeToMicros(DateTimeUtils.scala:79)

at org.apache.spark.sql.catalyst.util.DateTimeUtilsSuite.$anonfun$new$51(DateTimeUtilsSuite.scala:660)

on your new test:

assert(DateTimeUtils.localDateTimeToMicros(LocalDateTime.parse("-290308-12-21T19:59:05.224192"))

== Long.MinValue)…/DateTimeUtils.scala Co-authored-by: Maxim Gekk <[email protected]>

|

Test build #139487 has finished for PR 32814 at commit

|

|

@gengliangwang I wonder why did you remove the checks for Does |

@MaxGekk Yes. The Long.MinValue case contains negative seconds and positive nanoseconds. Thus it overflows when we try to convert it back. We can fix it but it is too corner. The fix makes performance worse, too. |

|

Kubernetes integration test starting |

|

Kubernetes integration test starting |

@gengliangwang Could you open an JIRA for the issue, so, we will fix this separately from this PR. |

|

Kubernetes integration test status success |

|

Kubernetes integration test status success |

…UserDefinedTypeSuite ### What changes were proposed in this pull request? Refactor LocalDateTimeUDT as YearUDT in UserDefinedTypeSuite ### Why are the changes needed? As we are going to support java.time.LocalDateTime as an external type of TimestampWithoutTZ type #32814, registering java.time.LocalDateTime as UDT will cause test failures: https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/139469/testReport/ This PR is to unblock #32814. ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? Unit test. Closes #32824 from gengliangwang/UDTFollowUp. Authored-by: Gengliang Wang <[email protected]> Signed-off-by: Gengliang Wang <[email protected]>

|

Kubernetes integration test starting |

|

Kubernetes integration test status failure |

sql/catalyst/src/test/scala/org/apache/spark/sql/catalyst/util/DateTimeUtilsSuite.scala

Outdated

Show resolved

Hide resolved

sql/catalyst/src/test/scala/org/apache/spark/sql/catalyst/util/DateTimeUtilsSuite.scala

Outdated

Show resolved

Hide resolved

|

Test build #139538 has finished for PR 32814 at commit

|

|

Kubernetes integration test starting |

|

Test build #139520 has finished for PR 32814 at commit

|

|

@cloud-fan @MaxGekk Thanks for the review. |

|

Kubernetes integration test status success |

What changes were proposed in this pull request?

In the PR, I propose to extend Spark SQL API to accept

java.time.LocalDateTimeas an external type of recently added new Catalyst type -TimestampWithoutTZ. The Java classjava.time.LocalDateTimehas a similar semantic to ANSI SQL timestamp without timezone type, and it is the most suitable to be an external type forTimestampWithoutTZType. In more details:TimestampWithoutTZConverterwhich converts java.time.LocalDateTime instances to/from internal representation of the Catalyst typeTimestampWithoutTZType(to Long type). TheTimestampWithoutTZConverterobject uses new methods of DateTimeUtils:TimestampWithoutTZTypein RowEncoder via the methods createDeserializerForLocalDateTime() and createSerializerForLocalDateTime().java.time.LocalDateTimeinstances.Why are the changes needed?

To allow users parallelization of

java.time.LocalDateTimecollections, and construct timestamp without time zone columns. Also to collect such columns back to the driver side.Does this PR introduce any user-facing change?

The PR extends existing functionality. So, users can parallelize instances of the java.time.LocalDateTime class and collect them back.

How was this patch tested?

New unit tests