-

Notifications

You must be signed in to change notification settings - Fork 213

OTA-844: pkg/cvo/metrics: Add 'reason' to cluster_operator_up #868

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

OTA-844: pkg/cvo/metrics: Add 'reason' to cluster_operator_up #868

Conversation

| g.Set(0) | ||

| isUp := 0 | ||

| var reason := "NoAvailableCondition" | ||

| if condition := resourcemerge.FindOperatorStatusCondition(op.Status.Conditions, configv1.OperatorAvailable); condition != nil { |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Metric cluster_operator_up is equivalent to metric cluster_operator_conditions{condition="Available"}.

What is the point of keeping it?

Also semantic of cluster_operator_conditions metric is clear (I do not have to look at the code producing that metric to know what it does); semantic of metric cluster_operator_up is less clear.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Metric

cluster_operator_upis equivalent to metriccluster_operator_conditions{condition="Available"}.

cluster_operator_up also includes the operator version from the ClusterOperator. And it detects "ClusterOperator exists but Available is unset", where cluster_operator_conditions has no time-series.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Also semantic of

cluster_operator_conditionsmetric is clear (I do not have to look at the code producing that metric to know what it does)...

I'm completely onboard with improving help-text for cluster_operator_up.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

cluster_operator_up also includes the operator version from the ClusterOperator.

Couldn't the cluster_operator_conditions metric ship the version as well?

And it detects "ClusterOperator exists but Available is unset", where cluster_operator_conditions has no time-series.

Well, here cluster_operator_up will be set to 0 whether the Available condition is unset to set to 0. Only cluster_operator_conditions{condition="Available"} metric can be use to distinguish it from being unset or set to 0.

I'm completely onboard with improving help-text for cluster_operator_up.

Thanks for that, but as said, just with its name, I know what cluster_operator_conditions does. I have to dig further to know what cluster_operator_up does.

Last, please note that in some other request (OSD-8320), we have been asked to use the reason of the Degraded condition in conjunction with cluster_operator_up to inhibit ClusterOperatorDown alert.

Maybe this is a misstake as it would be strange to pick up the value from one metric (cluster_operator_conditions{condition="Available"}) and the reason from an other (cluster_operator_conditions{condition="Degraded"}); but hits is puzzling to me and need to be clarified.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Couldn't the

cluster_operator_conditionsmetric ship the version as well?

It could, but the version is ClusterOperator-scoped, and not condition-scoped. cluster_operator_up is ClusterOperator-scoped, so it's a good fit there. cluster_operator_conditions is condition-scoped, so it's not a good fit there (would you copy the same version on to time-series for every one of the operator's conditions?). You could obviously also add a new ClusterOperator-scoped metric whose sole purpose was exposing the version information, but 🤷, that seems like a lot of churn, and I'm not clear on the benefit.

Only

cluster_operator_conditions{condition="Available"}metric can be use to distinguish it from being unset or set to 0.

With this pull request, you can make that distinction by seeing if the cluster_operator_up == 0 reason is NoAvailableCondition or otherwise. But as far as "when to page an admin?" goes, I don't think that distinction is all that important. Both are bad enough that we want to be paging admins.

I know what

cluster_operator_conditionsdoes. I have to dig further to know whatcluster_operator_updoes.

I don't mind if other cluster-version-operator approvers want to override me, but personally "many metrics consumers are able to intuit the semantics without having to read docs" is a useful property, and worthy of aspiring to, but not something that must be delivered in order for a metric to exist.

Last, please note that in some other request (OSD-8320), we have been asked to use the reason of the Degraded condition in conjunction with

cluster_operator_upto inhibitClusterOperatorDownalert.

That seems like an anti-pattern to me, since Degraded=True is theoretically orthogonal to Available=False. And it's Available=False (or absent) that are driving ClusterOperatorDown. We've had it decoupled from Degraded since #550. That said, my push-back is just that I don't want to re-mix Degradedness into ClusterOperatorDown; I have no problem at all if consumers like OSD decide to join ClusterOperatorDown with Degraded information when deciding if/how to respond.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thanks for the feedback Trevor.

As my remark is more a matter of preference, I am ok to close this discussion.

My worries were linked to OSD-8320 which is asking to use a different condition for the reason. From what I understand using the reason coming from the Available condition is perfectly fine with the silences defined there:

openshift/configure-alertmanager-operator@db85a6d

(although I wonder if it would be better to not silence the alert and let CAD process it by sending an automated SL to the customer)

70f969d to

917170d

Compare

|

Feeding e2e-agnostic into PromeCIeus, However, sharding by |

| description: The {{ "{{ $labels.name }}" }} operator may be down or disabled because {{ "${{ $labels.reason }}" }}, and the components it manages may be unavailable or degraded. Cluster upgrades may not complete. For more information refer to 'oc get -o yaml clusteroperator {{ "{{ $labels.name }}" }}'{{ "{{ with $console_url := \"console_url\" | query }}{{ if ne (len (label \"url\" (first $console_url ) ) ) 0}} or {{ label \"url\" (first $console_url ) }}/settings/cluster/{{ end }}{{ end }}" }}. | ||

| expr: | | ||

| max by (namespace, name) (cluster_operator_up{job="cluster-version-operator"} == 0) | ||

| max by (namespace, name, reason) (cluster_operator_up{job="cluster-version-operator"} == 0) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I'm still avoiding the cardinality hit of including the full message in the metric, because we don't want to load Prometheus down with that many time-series. For message-level granularity, users still have to follow the oc ... or web-console links from the alert description.

I am not clear how much cardinality increase we are looking at by adding reason. I am still not clear about the motivation.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I am not clear how much cardinality increase we are looking at by adding reason.

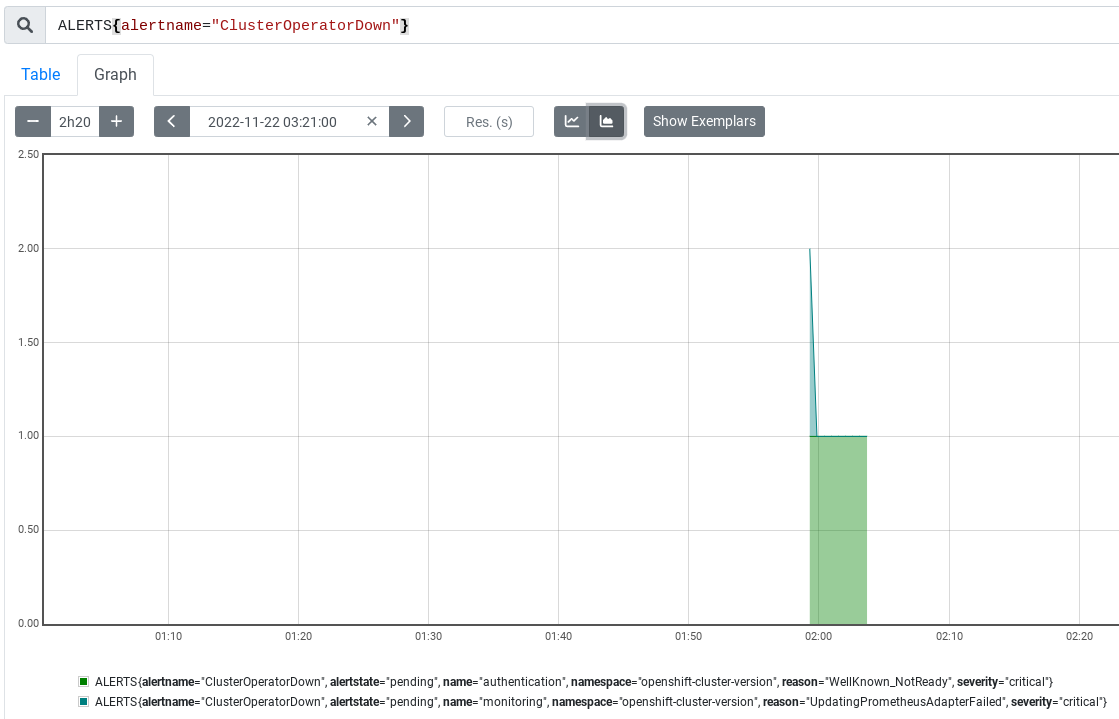

We already have reason cardinality on cluster_operator_conditions, and that seems ok. Checking 4.11 Telemetry:

sort_desc(

count by (name) (

count by (name, reason) (

(cluster_operator_conditions{condition="Available"} == 0)

+ on (_id) group_left ()

group by (_id) (cluster_version{type="current",version=~"4[.]11[.][0-9]*"})

)

)

)

gives:

{name="authentication"} 16

{name="monitoring"} 10

{name="storage"} 6

{name="console"} 5

{name="image-registry"} 3

...

so the cardinality increase would be mostly limited to those particular operators.

message cardinality will be higher, potentially including things like request port numbers, API response IDs, and that sort of thing.

I am still not clear about the motivation.

Cluster admins like SRE-P get a ClusterOperatorDown alert. They wonder if it's a true-positive (actual cluster pain; needs quick manual intervention) or a false-positive (operator is over-reacting, cluster is actually ok, or will soon self-heal to become ok). Splitting ClusterOperatorDown by name gives you some granularity (e.g. you can decide whether a particular component like etcd is often a true-positive or often a false-positive. But splitting by (name, reason) tuples gives more granularity, so you can more precisely separate likely-to-be-a-false-positive from likely-to-be-a-true-positive.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

{name="authentication"} 16

Digging into this one out of curiousity:

group by (reason) (

(cluster_operator_conditions{name="authentication",condition="Available"} == 0)

+ on (_id) group_left ()

group by (_id) (cluster_version{type="current",version=~"4[.]11[.][0-9]*"})

)

gives:

{reason="OAuthServerDeployment_NoPod::OAuthServerRouteEndpointAccessibleController_EndpointUnavailable::OAuthServerServiceEndpointAccessibleController_EndpointUnavailable"} 1

{reason="OAuthServerRouteEndpointAccessibleController_EndpointUnavailable"} 1

{reason="WellKnown_NotReady"} 1

{reason="OAuthServerDeployment_NoPod"} 1

{reason="APIServerDeployment_NoPod::APIServices_PreconditionNotReady"} 1

{reason="APIServices_PreconditionNotReady"} 1

{reason="OAuthServerRouteEndpointAccessibleController_EndpointUnavailable::WellKnown_NotReady"} 1

{reason="APIServerDeployment_NoPod"} 1

{reason="APIServerDeployment_NoPod::APIServices_PreconditionNotReady::WellKnown_NotReady"} 1

{reason="APIServerDeployment_NoPod::APIServices_PreconditionNotReady::OAuthServerServiceEndpointAccessibleController_EndpointUnavailable::WellKnown_NotReady"} 1

{reason="APIServices_Error"} 1

{reason="OAuthServerRouteEndpointAccessibleController_EndpointUnavailable::OAuthServerServiceEndpointAccessibleController_EndpointUnavailable::WellKnown_NotReady"} 1

So if reason cardinality is a concern, we can reduce it somewhat (at the cost of reduced granularity) by collecting multiple-reason conditions under MultipleReasons (like the cluster-version operator does for some of its conditions) instead of concatenating on ::.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Cluster admins like SRE-P get a ClusterOperatorDown alert. They wonder if it's a true-positive (actual cluster pain; needs quick manual intervention) or a false-positive (operator is over-reacting, cluster is actually ok, or will soon self-heal to become ok). Splitting ClusterOperatorDown by name gives you some granularity (e.g. you can decide whether a particular component like etcd is often a true-positive or often a false-positive. But splitting by (name, reason) tuples gives more granularity, so you can more precisely separate likely-to-be-a-false-positive from likely-to-be-a-true-positive.

I'm not entirely convinced about this. Do we want to optimize for what is basically an OpenShift bug (operator down should never be a false-positive thing to be ignored, right?). I feel that we'd just add some tech jargon to admin-facing messages, after admin-ing the CI clusters myself for some time I'm not sure if I found that too useful tbh.

That said, it does not seem the cost of adding the labels is too high, and I can imagine they can be useful when troubleshooting, diagnosis or maybe conditional risks.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I'm not entirely convinced about this. Do we want to optimize for what is basically an OpenShift bug (operator down should never be a false-positive thing to be ignored, right?). I feel that we'd just add some tech jargon to admin-facing messages, after admin-ing the CI clusters myself for some time I'm not sure if I found that too useful tbh.

It is true that the aim of adding the reason is to silence the alert for some reasons as done here:

openshift/configure-alertmanager-operator@db85a6d

It would (maybe) be better to not silence the alert and let CAD (Cluster Anomaly Detection) deal with the alert and send the proper SL to the customer.

@wking & @petr-muller : what do you think about that?

Maybe we should open a card on our side to track that suggestion.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

It would (maybe) be better to not silence the alert and let CAD (Cluster Anomaly Detection) deal with the alert and send the proper SL to the customer.

An issues with automatically service-logging on these alerts is that, while sometimes they are things the customer can fix (hooray). sometimes they are things that SRE-P should fix for the customer (that's why customers pay us to manage clusters). For example:

- Sometimes the alert is really a false-positive. The appropriate action is to file an OCPBUGS ticket, which then gets fixed, and the fixes ship, and then clusters get updated to pick up the fix. That can still be a long turnaround time, and because some managed offerings like OSD defer control-plane patch updates to the customer, the turnaround time can be very slow. Silences give service delivery something to de-noise in the meantime. Customers don't want to be bothered by this loop.

- Sometimes the alert is a true-positive, but it's something SRE-P is supposed to fix without bothering the customer. E.g.

monitoring'sUpdatingPrometheusOperatorFailedis probably not something that the customer wants to be bothered about.

To give users sub-component granularity about why they're getting a critical alert. I'm still avoiding the cardinality hit of including the full message in the metric, because we don't want to load Prometheus down with *that* many time-series. For message-level granularity, users still have to follow the 'oc ...' or web-console links from the alert description.

917170d to

bbcc33d

Compare

| annotations: | ||

| summary: Cluster operator has not been available for 10 minutes. | ||

| description: The {{ "{{ $labels.name }}" }} operator may be down or disabled, and the components it manages may be unavailable or degraded. Cluster upgrades may not complete. For more information refer to 'oc get -o yaml clusteroperator {{ "{{ $labels.name }}" }}'{{ "{{ with $console_url := \"console_url\" | query }}{{ if ne (len (label \"url\" (first $console_url ) ) ) 0}} or {{ label \"url\" (first $console_url ) }}/settings/cluster/{{ end }}{{ end }}" }}. | ||

| description: The {{ "{{ $labels.name }}" }} operator may be down or disabled because {{ "${{ $labels.reason }}" }}, and the components it manages may be unavailable or degraded. Cluster upgrades may not complete. For more information refer to 'oc get -o yaml clusteroperator {{ "{{ $labels.name }}" }}'{{ "{{ with $console_url := \"console_url\" | query }}{{ if ne (len (label \"url\" (first $console_url ) ) ) 0}} or {{ label \"url\" (first $console_url ) }}/settings/cluster/{{ end }}{{ end }}" }}. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

looking at your authentication examples below, will we produce UI messages like "...down or disable because OAuthServerRouteEndpointAccessibleController_EndpointUnavailable::WellKnown_NotReady...".

If yes, is that desirable?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

err... I guess? I could see truncating on 50 characters or something if we felt like ClusterOperator writers couldn't be trusted. I could also see asking the auth and other folks to consolidate to MultipleReasons or similar, as a less open-ended slug.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Length is one thing but this also feels like techy clutter in what is otherwise a natural language sequence

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I have also concern about putting words which can only OpenShift engineers can understand. However it is not better than not putting the reasons e.g. https://issues.redhat.com/browse/OSD-8320. If this turn out to be a bad idea we can revert it back.

| description: The {{ "{{ $labels.name }}" }} operator may be down or disabled because {{ "${{ $labels.reason }}" }}, and the components it manages may be unavailable or degraded. Cluster upgrades may not complete. For more information refer to 'oc get -o yaml clusteroperator {{ "{{ $labels.name }}" }}'{{ "{{ with $console_url := \"console_url\" | query }}{{ if ne (len (label \"url\" (first $console_url ) ) ) 0}} or {{ label \"url\" (first $console_url ) }}/settings/cluster/{{ end }}{{ end }}" }}. | ||

| expr: | | ||

| max by (namespace, name) (cluster_operator_up{job="cluster-version-operator"} == 0) | ||

| max by (namespace, name, reason) (cluster_operator_up{job="cluster-version-operator"} == 0) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Cluster admins like SRE-P get a ClusterOperatorDown alert. They wonder if it's a true-positive (actual cluster pain; needs quick manual intervention) or a false-positive (operator is over-reacting, cluster is actually ok, or will soon self-heal to become ok). Splitting ClusterOperatorDown by name gives you some granularity (e.g. you can decide whether a particular component like etcd is often a true-positive or often a false-positive. But splitting by (name, reason) tuples gives more granularity, so you can more precisely separate likely-to-be-a-false-positive from likely-to-be-a-true-positive.

I'm not entirely convinced about this. Do we want to optimize for what is basically an OpenShift bug (operator down should never be a false-positive thing to be ignored, right?). I feel that we'd just add some tech jargon to admin-facing messages, after admin-ing the CI clusters myself for some time I'm not sure if I found that too useful tbh.

That said, it does not seem the cost of adding the labels is too high, and I can imagine they can be useful when troubleshooting, diagnosis or maybe conditional risks.

LalatenduMohanty

left a comment

LalatenduMohanty

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

/lgtm

|

[APPROVALNOTIFIER] This PR is APPROVED This pull-request has been approved by: LalatenduMohanty, wking The full list of commands accepted by this bot can be found here. The pull request process is described here DetailsNeeds approval from an approver in each of these files:

Approvers can indicate their approval by writing |

|

/retest |

|

/override ci/prow/e2e-agnostic-upgrade-into-change |

|

@wking: Overrode contexts on behalf of wking: ci/prow/e2e-agnostic-upgrade-into-change DetailsIn response to this:

Instructions for interacting with me using PR comments are available here. If you have questions or suggestions related to my behavior, please file an issue against the kubernetes/test-infra repository. |

|

@wking: all tests passed! Full PR test history. Your PR dashboard. DetailsInstructions for interacting with me using PR comments are available here. If you have questions or suggestions related to my behavior, please file an issue against the kubernetes/test-infra repository. I understand the commands that are listed here. |

…om ${{

Fixing a ClusterOperatorDown typo from bbcc33d (pkg/cvo/metrics:

Add 'reason' to cluster_operator_up, 2022-11-21, openshift#868) that I'd

carried forward into ClusterReleaseNotAccepted in 734b9c5

(install/0000_90_cluster-version-operator_02_servicemonitor: Add

ClusterReleaseNotAccepted, 2023-02-23, openshift#906) [1].

[1]: https://issues.redhat.com/browse/OCPBUGS-8079

…om ${{

Fixing a ClusterOperatorDown typo from bbcc33d (pkg/cvo/metrics:

Add 'reason' to cluster_operator_up, 2022-11-21, openshift#868) that I'd

carried forward into ClusterReleaseNotAccepted in 734b9c5

(install/0000_90_cluster-version-operator_02_servicemonitor: Add

ClusterReleaseNotAccepted, 2023-02-23, openshift#906) [1].

[1]: https://issues.redhat.com/browse/OCPBUGS-8079

To give users sub-component granularity about why they're getting a critical alert.

I'm still avoiding the cardinality hit of including the full message in the metric, because we don't want to load Prometheus down with that many time-series. For message-level granularity, users still have to follow the

oc ...or web-console links from the alertdescription.