-

Notifications

You must be signed in to change notification settings - Fork 29k

[SPARK-30490][SQL] Eliminate compiler warnings in Avro datasource #27174

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

|

Test build #116528 has finished for PR 27174 at commit

|

| * If the option is not set, the Hadoop's config `avro.mapred.ignore.inputs.without.extension` | ||

| * is taken into account. If the former one is not set too, file extensions are ignored. | ||

| */ | ||

| @deprecated("Use the general data source option pathGlobFilter for filtering file names", "3.0") |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Why remove this if it's really deprecated? I get that it will remove some compiler warnings, but, that's not super important, or can be worked around as you do elsewhere by deprecating the test methods too?

|

Sean, deprecating of the value doesn’t make any sense because it is not used by users.

|

|

OK, the class appears public though, it's definitely not meant to be

accessed for other reasons?

…On Sat, Jan 11, 2020 at 10:05 AM Maxim Gekk ***@***.***> wrote:

Sean, deprecating of the value doesn’t make any sense because it is not

used by users.

сб, 11 янв. 2020 г. в 18:32, Sean Owen ***@***.***>:

> ***@***.**** commented on this pull request.

> ------------------------------

>

> In

> external/avro/src/main/scala/org/apache/spark/sql/avro/AvroOptions.scala

> <#27174 (comment)>:

>

> > @@ -68,8 +68,10 @@ class AvroOptions(

> * If the option is not set, the Hadoop's config

`avro.mapred.ignore.inputs.without.extension`

> * is taken into account. If the former one is not set too, file

extensions are ignored.

> */

> - @deprecated("Use the general data source option pathGlobFilter for

filtering file names", "3.0")

>

> Why remove this if it's really deprecated? I get that it will remove some

> compiler warnings, but, that's not super important, or can be worked

around

> as you do elsewhere by deprecating the test methods too?

>

> —

> You are receiving this because you authored the thread.

>

>

> Reply to this email directly, view it on GitHub

> <

#27174?email_source=notifications&email_token=AAMB5GPBEQSPURU7DY5UBZDQ5HRBJA5CNFSM4KFRXRNKYY3PNVWWK3TUL52HS4DFWFIHK3DMKJSXC5LFON2FEZLWNFSXPKTDN5WW2ZLOORPWSZGOCRNUYHY#pullrequestreview-341527583

>,

> or unsubscribe

> <

https://github.com/notifications/unsubscribe-auth/AAMB5GNSMNGO5AHKJSOU23TQ5HRBJANCNFSM4KFRXRNA

>

> .

>

--

Yours faithfully,

Maxim Gekk

http://www.linkedin.com/in/maxgekk

—

You are receiving this because you commented.

Reply to this email directly, view it on GitHub

<#27174?email_source=notifications&email_token=AAGIZ6XYLIVBDX76OZ3FBDTQ5HU5LA5CNFSM4KFRXRNKYY3PNVWWK3TUL52HS4DFVREXG43VMVBW63LNMVXHJKTDN5WW2ZLOORPWSZGOEIWFBPY#issuecomment-573329599>,

or unsubscribe

<https://github.com/notifications/unsubscribe-auth/AAGIZ6TVE5OHMOWBX7XPJH3Q5HU5LANCNFSM4KFRXRNA>

.

|

|

AvroOptions (and other options like CSVOptions) shouldn’t be accessible to users. Deprecating any values inside of AvroOptions seems similar to deprecating config entries inside of SQLConf - the values are not visible to users, and they are not aware of compiler warnings.

|

|

@gengliangwang @HyukjinKwon Could you take a look at the PR. |

| */ | ||

| @deprecated("Use the general data source option pathGlobFilter for filtering file names", "3.0") | ||

| val ignoreExtension: Boolean = { | ||

| def warn(s: String): Unit = logWarning( |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Why do we define a separate method?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

hmm, to reuse the same code in 2 places.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I don't feel strongly but I think it's fine to don't do it ...

| .getOrElse(!ignoreFilesWithoutExtension) | ||

| .map { ignoreExtensionOption => | ||

| if (ignoreExtensionOption != !ignoreFilesWithoutExtensionByDefault) { | ||

| warn(s"The Avro option '${AvroOptions.ignoreExtensionKey}'") |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@MaxGekk, from a cursory look, this warning can be shown for every file which I think is noisy:

Lines 24 to 30 in 053dd85

| abstract class FilePartitionReaderFactory extends PartitionReaderFactory { | |

| override def createReader(partition: InputPartition): PartitionReader[InternalRow] = { | |

| assert(partition.isInstanceOf[FilePartition]) | |

| val filePartition = partition.asInstanceOf[FilePartition] | |

| val iter = filePartition.files.toIterator.map { file => | |

| PartitionedFileReader(file, buildReader(file)) | |

| } |

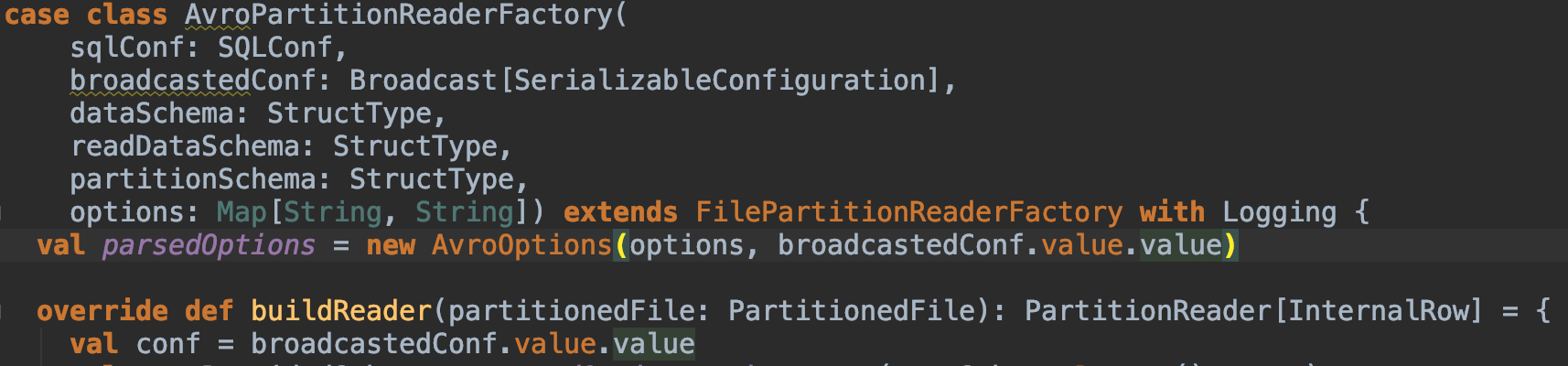

spark/external/avro/src/main/scala/org/apache/spark/sql/v2/avro/AvroPartitionReaderFactory.scala

Line 61 in 053dd85

| val parsedOptions = new AvroOptions(options, conf) |

Do you mind if I ask double check this?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@HyukjinKwon I will check that but general thoughts are:

- The log warning is printed only if an user sets non-default config values

- I don't think

AvroOptionsshould be created (initialized from scratch) per-each file if it is created in current implementation. I would say it is not necessary to initialize AvroOptions again and again. After all, AvroOptions should be the same for all files/partitions. - And the noise in logs will force people to avoid using of the deprecated options ;-)

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@HyukjinKwon you are right, it prints warnings per each partition. I have confirmed that by the test:

test("count deprecation log events") {

val partitionNum = 3

val logAppender = new AppenderSkeleton {

val loggingEvents = new ArrayBuffer[LoggingEvent]()

override def append(loggingEvent: LoggingEvent): Unit = loggingEvents.append(loggingEvent)

override def close(): Unit = {}

override def requiresLayout(): Boolean = false

}

withTempPath { dir =>

Seq(("a", 1, 2), ("b", 1, 2), ("c", 2, 1), ("d", 2, 1))

.toDF("value", "p1", "p2")

.repartition(partitionNum)

.write

.format("avro")

.option("header", true)

.save(dir.getCanonicalPath)

withLogAppender(logAppender) {

val df = spark

.read

.format("avro")

.schema("value STRING, p1 INTEGER, p2 INTEGER")

.option(AvroOptions.ignoreExtensionKey, false)

.option("header", true)

.load(dir.getCanonicalPath)

df.count()

}

val deprecatedEvents = logAppender.loggingEvents

.map(_.getRenderedMessage)

.filter(_.contains(AvroOptions.ignoreExtensionKey))

assert(deprecatedEvents.size === partitionNum)

}

}There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

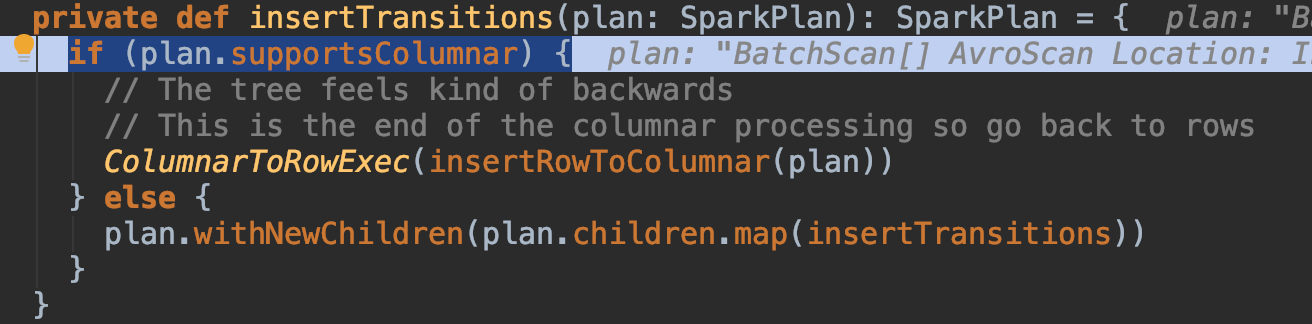

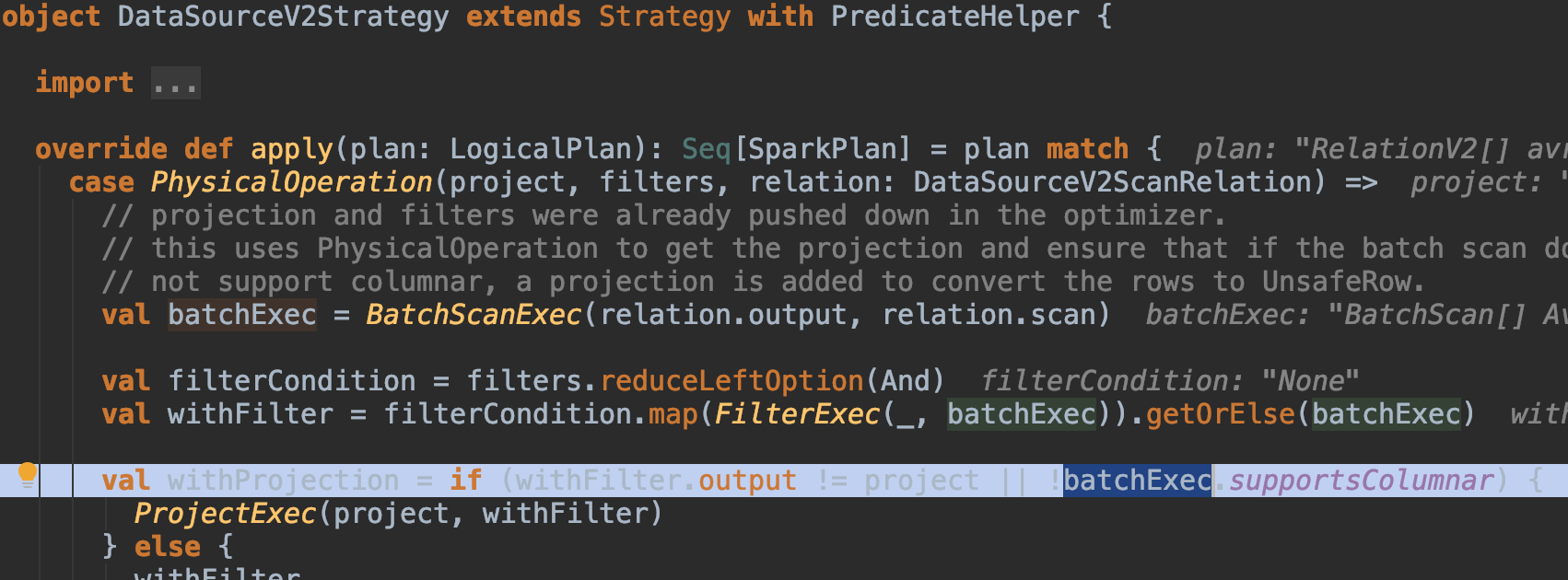

When I moved instantiation of AvroOptions out of buildReader():

The warning is printed always 2 times. It means AvroPartitionReaderFactory is constructed twice.

And both times from

Lines 60 to 66 in 053dd85

| override def supportsColumnar: Boolean = { | |

| require(partitions.forall(readerFactory.supportColumnarReads) || | |

| !partitions.exists(readerFactory.supportColumnarReads), | |

| "Cannot mix row-based and columnar input partitions.") | |

| partitions.exists(readerFactory.supportColumnarReads) | |

| } |

First time from

The second time from:

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

It is interesting that rewriting supportsColumnar as:

override val supportsColumnar: Boolean = {

val factory = readerFactory

require(partitions.forall(factory.supportColumnarReads) ||

!partitions.exists(factory.supportColumnarReads),

"Cannot mix row-based and columnar input partitions.")

partitions.exists(factory.supportColumnarReads)

}does not help too because DataSourceV2ScanExecBase is initialized twice from:

First time:

Second time in TreeNode.makeCopy:

Making supportsColumnar as lazy val doesn't help as well because supportsColumnar is invoked twice for different objects.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I think it is not nice that we construct some classes twice when it is not necessary. WDYT? /cc @cloud-fan @dongjoon-hyun

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yea we shouldn't instantiate twice, but not a big problem. I'm more worried about we instantiate it for every partition.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@MaxGekk, even if we fix this, it will still show the warning twice for schema inference and reading path at the very least. It's okay as long as we show the warning and document. Let's just go simple in this PR. This warning will be removed very soon, too.

| options: Map[String, String], | ||

| files: Seq[FileStatus]): Option[StructType] = { | ||

| val conf = spark.sessionState.newHadoopConf() | ||

| if (options.contains("ignoreExtension")) { |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@MaxGekk, let's just remove this option after branch-3.0 is cut out.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Shouldn't it be deprecated explicitly for users before removing? It should be mentioned in docs at least if we don't want to output a warning like in the PR.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I think it still shows the warning properly although it only shows during schema inference. Yeah, can you simply fix the doc and say it's deprecated at docs/sql-data-sources-avro.md?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

+1 with @HyukjinKwon

Let's remove the option and document it in the future, instead of creating such changes. If we merge this one, then there might be some other options we have to do the same thing.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Here is the PR #27194 for docs

What changes were proposed in this pull request?

@deprecatedannotation forAvroOptions. ignoreExtensionavro.mapred.ignore.inputs.without.extensionis set to non-default value -trueignoreExtensionis set to non-default value -trueWhy are the changes needed?

AvroOptions.ignoreExtensionis not used by users directly. In this ways, users are not aware of deprecated Hadoop's conf and avro option.Does this PR introduce any user-facing change?

Yes

How was this patch tested?

By

AvroSuite