-

Notifications

You must be signed in to change notification settings - Fork 29k

[SPARK-30490][SQL] Eliminate compiler warnings in Avro datasource #27174

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Changes from 1 commit

File filter

Filter by extension

Conversations

Jump to

Diff view

Diff view

- Loading branch information

There are no files selected for viewing

| Original file line number | Diff line number | Diff line change | ||||||||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

|

@@ -70,6 +70,9 @@ class AvroOptions( | |||||||||||||||||||||||||||||||

| */ | ||||||||||||||||||||||||||||||||

| @deprecated("Use the general data source option pathGlobFilter for filtering file names", "3.0") | ||||||||||||||||||||||||||||||||

| val ignoreExtension: Boolean = { | ||||||||||||||||||||||||||||||||

| def warn(s: String): Unit = logWarning( | ||||||||||||||||||||||||||||||||

|

Member

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. Why do we define a separate method?

Member

Author

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. hmm, to reuse the same code in 2 places.

Member

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. I don't feel strongly but I think it's fine to don't do it ... |

||||||||||||||||||||||||||||||||

| s"$s is deprecated, and it will be not use by Avro datasource in the future releases. " + | ||||||||||||||||||||||||||||||||

| "Use the general data source option pathGlobFilter for filtering file names.") | ||||||||||||||||||||||||||||||||

| val ignoreFilesWithoutExtensionByDefault = false | ||||||||||||||||||||||||||||||||

| val ignoreFilesWithoutExtension = conf.getBoolean( | ||||||||||||||||||||||||||||||||

| AvroFileFormat.IgnoreFilesWithoutExtensionProperty, | ||||||||||||||||||||||||||||||||

|

|

@@ -78,7 +81,17 @@ class AvroOptions( | |||||||||||||||||||||||||||||||

| parameters | ||||||||||||||||||||||||||||||||

| .get(AvroOptions.ignoreExtensionKey) | ||||||||||||||||||||||||||||||||

| .map(_.toBoolean) | ||||||||||||||||||||||||||||||||

| .getOrElse(!ignoreFilesWithoutExtension) | ||||||||||||||||||||||||||||||||

| .map { ignoreExtensionOption => | ||||||||||||||||||||||||||||||||

| if (ignoreExtensionOption != !ignoreFilesWithoutExtensionByDefault) { | ||||||||||||||||||||||||||||||||

| warn(s"The Avro option '${AvroOptions.ignoreExtensionKey}'") | ||||||||||||||||||||||||||||||||

|

Member

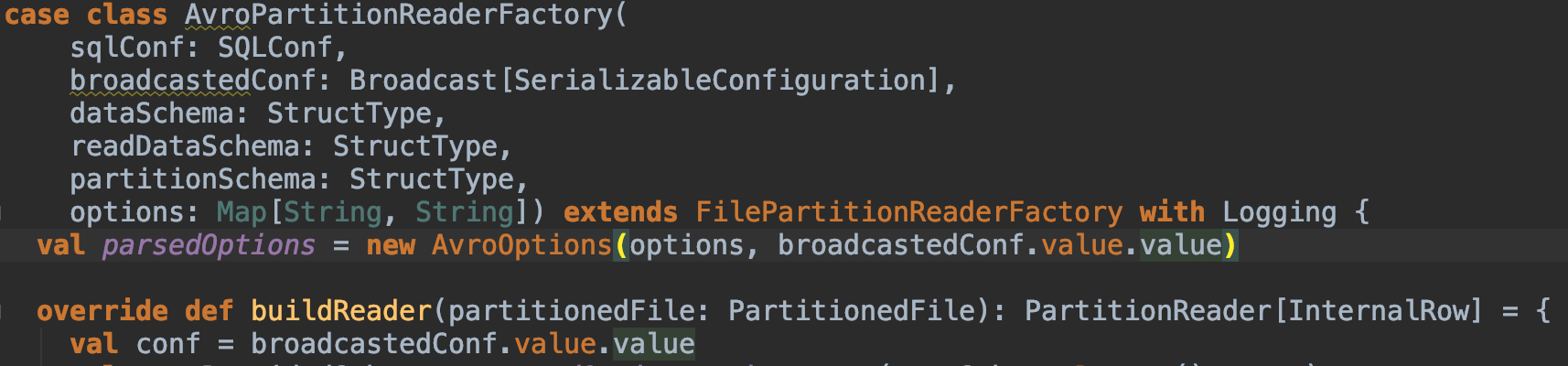

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. @MaxGekk, from a cursory look, this warning can be shown for every file which I think is noisy: Lines 24 to 30 in 053dd85

spark/external/avro/src/main/scala/org/apache/spark/sql/v2/avro/AvroPartitionReaderFactory.scala Line 61 in 053dd85

Do you mind if I ask double check this?

Member

Author

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. @HyukjinKwon I will check that but general thoughts are:

Member

Author

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. @HyukjinKwon you are right, it prints warnings per each partition. I have confirmed that by the test: test("count deprecation log events") {

val partitionNum = 3

val logAppender = new AppenderSkeleton {

val loggingEvents = new ArrayBuffer[LoggingEvent]()

override def append(loggingEvent: LoggingEvent): Unit = loggingEvents.append(loggingEvent)

override def close(): Unit = {}

override def requiresLayout(): Boolean = false

}

withTempPath { dir =>

Seq(("a", 1, 2), ("b", 1, 2), ("c", 2, 1), ("d", 2, 1))

.toDF("value", "p1", "p2")

.repartition(partitionNum)

.write

.format("avro")

.option("header", true)

.save(dir.getCanonicalPath)

withLogAppender(logAppender) {

val df = spark

.read

.format("avro")

.schema("value STRING, p1 INTEGER, p2 INTEGER")

.option(AvroOptions.ignoreExtensionKey, false)

.option("header", true)

.load(dir.getCanonicalPath)

df.count()

}

val deprecatedEvents = logAppender.loggingEvents

.map(_.getRenderedMessage)

.filter(_.contains(AvroOptions.ignoreExtensionKey))

assert(deprecatedEvents.size === partitionNum)

}

}

Member

Author

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. When I moved instantiation of AvroOptions out of buildReader(): Lines 60 to 66 in 053dd85

First time from  The second time from:

Member

Author

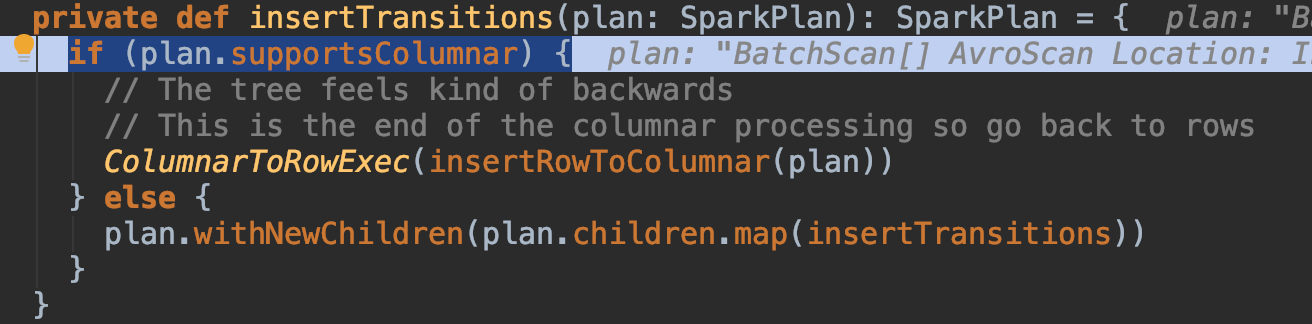

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. It is interesting that rewriting override val supportsColumnar: Boolean = {

val factory = readerFactory

require(partitions.forall(factory.supportColumnarReads) ||

!partitions.exists(factory.supportColumnarReads),

"Cannot mix row-based and columnar input partitions.")

partitions.exists(factory.supportColumnarReads)

}does not help too because

Member

Author

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. I think it is not nice that we construct some classes twice when it is not necessary. WDYT? /cc @cloud-fan @dongjoon-hyun

Contributor

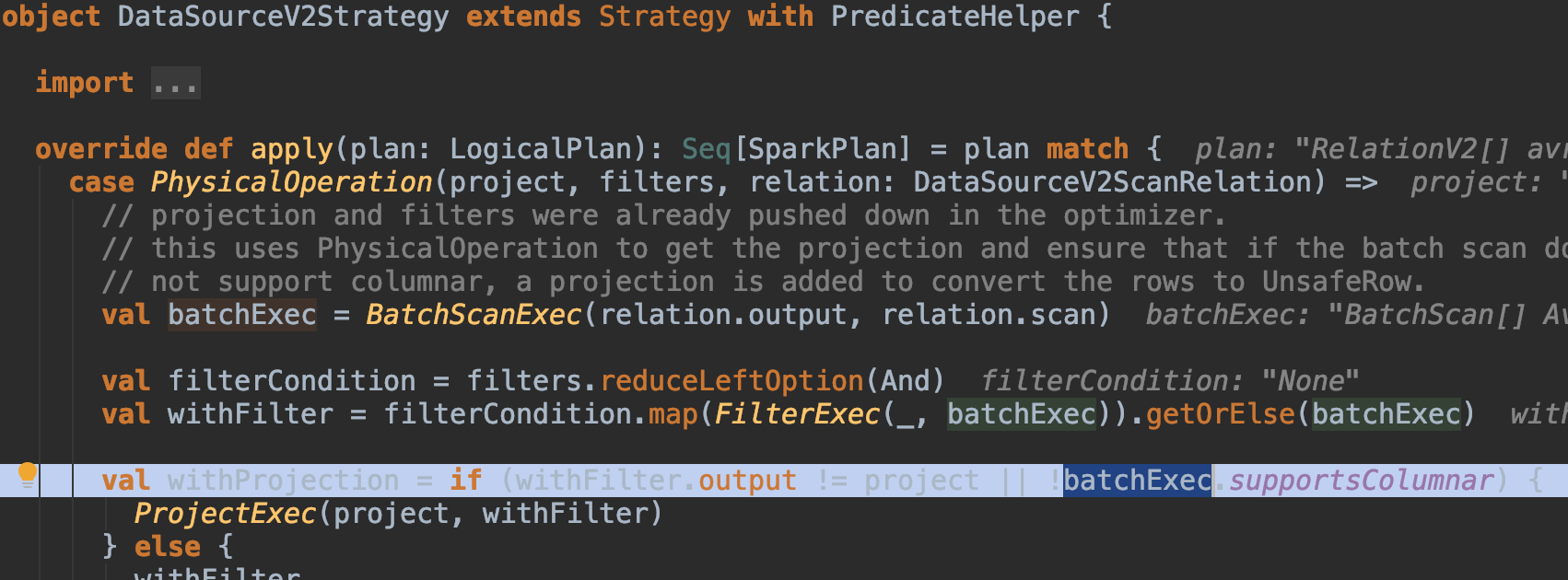

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. Yea we shouldn't instantiate twice, but not a big problem. I'm more worried about we instantiate it for every partition.

Member

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. @MaxGekk, even if we fix this, it will still show the warning twice for schema inference and reading path at the very least. It's okay as long as we show the warning and document. Let's just go simple in this PR. This warning will be removed very soon, too. |

||||||||||||||||||||||||||||||||

| } | ||||||||||||||||||||||||||||||||

| ignoreExtensionOption | ||||||||||||||||||||||||||||||||

| }.getOrElse { | ||||||||||||||||||||||||||||||||

| if (ignoreFilesWithoutExtension != ignoreFilesWithoutExtensionByDefault) { | ||||||||||||||||||||||||||||||||

| warn(s"The Hadoop's config '${AvroFileFormat.IgnoreFilesWithoutExtensionProperty}'") | ||||||||||||||||||||||||||||||||

| } | ||||||||||||||||||||||||||||||||

| !ignoreFilesWithoutExtension | ||||||||||||||||||||||||||||||||

| } | ||||||||||||||||||||||||||||||||

| } | ||||||||||||||||||||||||||||||||

|

|

||||||||||||||||||||||||||||||||

| /** | ||||||||||||||||||||||||||||||||

|

|

||||||||||||||||||||||||||||||||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Why remove this if it's really deprecated? I get that it will remove some compiler warnings, but, that's not super important, or can be worked around as you do elsewhere by deprecating the test methods too?