-

Notifications

You must be signed in to change notification settings - Fork 29k

[SPARK-32696][SQL][test-hive1.2][test-hadoop2.7]Get columns operation should handle interval column properly #29539

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Changes from all commits

File filter

Filter by extension

Conversations

Jump to

Diff view

Diff view

There are no files selected for viewing

| Original file line number | Diff line number | Diff line change |

|---|---|---|

|

|

@@ -19,7 +19,7 @@ package org.apache.spark.sql.hive.thriftserver | |

|

|

||

| import java.sql.{DatabaseMetaData, ResultSet} | ||

|

|

||

| import org.apache.spark.sql.types.{ArrayType, BinaryType, BooleanType, DecimalType, DoubleType, FloatType, IntegerType, MapType, NumericType, StringType, StructType, TimestampType} | ||

| import org.apache.spark.sql.types.{ArrayType, BinaryType, BooleanType, CalendarIntervalType, DecimalType, DoubleType, FloatType, IntegerType, MapType, NumericType, StringType, StructType, TimestampType} | ||

|

|

||

| class SparkMetadataOperationSuite extends HiveThriftJdbcTest { | ||

|

|

||

|

|

@@ -333,4 +333,31 @@ class SparkMetadataOperationSuite extends HiveThriftJdbcTest { | |

| assert(pos === 17, "all columns should have been verified") | ||

| } | ||

| } | ||

|

|

||

| test("get columns operation should handle interval column properly") { | ||

|

Contributor

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. Nit: Can we also test an extract query of the table with calendar types?

Member

Author

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. This PR fixes SparkGetColumnsOperation. As a meta operation, it is not related to extract-queries that executed by SparkExecuteStatementOperation. BTW, I notice that test cases for extracting interval values in VIEWS through the thrift server might be missing in the current codebase. Maybe we can make another PR to improve these, WDYT, @cloud-fan Or I can just add some UTs here https://github.com/yaooqinn/spark/blob/d24d27f1bc39e915df23d65f8fda0d83e716b308/sql/hive-thriftserver/src/test/scala/org/apache/spark/sql/hive/thriftserver/HiveThriftServer2Suites.scala#L674

Contributor

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. That's why I think we should add a small check here to make sure they actually work.

Contributor

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. I'm OK to add the missing test in this PR.

Member

Author

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. done, thank you guys for the check~ |

||

| val viewName = "view_interval" | ||

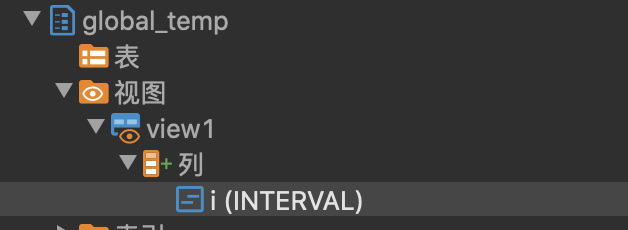

| val ddl = s"CREATE GLOBAL TEMP VIEW $viewName as select interval 1 day as i" | ||

|

|

||

| withJdbcStatement(viewName) { statement => | ||

| statement.execute(ddl) | ||

| val data = statement.getConnection.getMetaData | ||

| val rowSet = data.getColumns("", "global_temp", viewName, null) | ||

| while (rowSet.next()) { | ||

| assert(rowSet.getString("TABLE_CAT") === null) | ||

| assert(rowSet.getString("TABLE_SCHEM") === "global_temp") | ||

| assert(rowSet.getString("TABLE_NAME") === viewName) | ||

| assert(rowSet.getString("COLUMN_NAME") === "i") | ||

| assert(rowSet.getInt("DATA_TYPE") === java.sql.Types.OTHER) | ||

| assert(rowSet.getString("TYPE_NAME").equalsIgnoreCase(CalendarIntervalType.sql)) | ||

|

Contributor

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. if we treat it as string type, can we still report the

Member

Author

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. I guess that all the meta operations should do nothing else but specifically describe how all the objects(database/table /columns etc) stored in the Spark system. If we change the column meta here from INTERVAL to STRING, so the column here becomes orderable and comparable?(JDBC users may guess), they may have no idea about what they are dealing with and get a completely different awareness of the meta-information comparing to those users who use spark-sql self-contained applications. |

||

| assert(rowSet.getInt("COLUMN_SIZE") === CalendarIntervalType.defaultSize) | ||

| assert(rowSet.getInt("DECIMAL_DIGITS") === 0) | ||

| assert(rowSet.getInt("NUM_PREC_RADIX") === 0) | ||

| assert(rowSet.getInt("NULLABLE") === 0) | ||

| assert(rowSet.getString("REMARKS") === "") | ||

| assert(rowSet.getInt("ORDINAL_POSITION") === 0) | ||

| assert(rowSet.getString("IS_NULLABLE") === "YES") | ||

| assert(rowSet.getString("IS_AUTO_INCREMENT") === "NO") | ||

| } | ||

| } | ||

| } | ||

| } | ||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

What will be returned by rs.getMetadata.getColumnType for the interval column?

I believe that will be a string, because of how we just make it a string in the output schema in https://github.com/apache/spark/blob/master/sql/hive-thriftserver/src/main/scala/org/apache/spark/sql/hive/thriftserver/SparkExecuteStatementOperation.scala#L362

There was a discussion last year in #25694 (comment), that because the interval is just returned as string in the query output schema, and not compliant to standard SQL interval types, it should not be listed by SparkGetTypeInfoOperation, and be treated just as strings.

If we want to make it be returned as a java.sql.Types.OTHER, to make this complete, it should be returned as such in result set metadata as well, and listed in SparkGetTypeInfoOperation.

Or we could map it to string.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

the ResultSetMetadata describes the return types of the result set, the GetColumnOperation retrieves the metadata of the original table or view. IMHO, they do not have to be the same.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I will make a followup to add it to SparkGetTypeInfoOperation

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

It would be the most consistent if the table columns metadata, and metadata of the result of

select * from tablehad consistent types. I don't really have a preference whether it should be string or interval. Last year the discussion leaned towards string, because of the intervals not really being standard compliant, but indeed there might be value in having it marked as interval in the schema, so the application might try to parse it as such... Though, e.g. between Spark 2.4 and Spark 3.0 the format of the returned interval changed, see https://issues.apache.org/jira/browse/SPARK-32132, which might have broken how anyone would have parsed it, so Spark is not really giving any guarantees as to the stability of the format of this type...Ultimately, I think this is very rarely used, as intervals didn't even work at all until #25277 and it was not raised earlier...

Uh oh!

There was an error while loading. Please reload this page.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

The ResultSetMetadata is used by the client-side user to do the right work on the result set what they get,when the interval values become strings, they need to follow the rules of String operations in their future activities.

The GetColumnOperation tells the client-side users about how the data is stored and formed at the server-side, then if the JDBC-users know column A is an interval type, then they should notice that they can not perform string functions, comparators, etc on column A.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

In ResultSetMetadata would the client-side user not learn that they should follow string rules by using getColumnClassName, which should return String, while it would be still valuable to them to know that that column represents an interval?